Credo’s 2QFY26 Earnings: Reliability, not cables

How a tiny link glitch created a billion-dollar toll road—and why hyperscalers are standardizing on Credo’s invisible infrastructure.

TL;DR:

Margins, customer ramp patterns, and operating leverage all point to a business becoming embedded in hyperscaler workflows.

Credo’s real product is reliability intelligence, not AECs—Pilot could be the start of a long-duration data moat.

At today’s valuation, investors are betting on the toll-road story—and the next 12–18 months will reveal whether it’s real or priced in.

When Cables Start Thinking

Somewhere in a hyperscaler data center, thousands of GPUs are six days into an AI training run. The compute bill is approaching $2 million. And then, for a fraction of a second, a single connection flickers.

In traditional data centers, this momentary disruption, what engineers call a “link flap”, would be invisible. Traffic routes around. Users notice nothing. But AI training clusters don’t work that way. When thousands of processors must coordinate every calculation in lockstep, a single flicker doesn’t route around anything. It halts everything. Six days of progress, gone. The job restarts from the last checkpoint, burning another week of GPU time at tens of thousands of dollars per hour.

This is the problem that explains Credo Technology’s Q2 FY26 earnings report. Not faster cables or cheaper components. The prevention of catastrophically expensive glitches in the most valuable computing infrastructure ever built.

The numbers from the quarter were impressive in the conventional sense: revenue of $268 million, up 272% YoY; earnings that beat estimates by 37%; guidance that exceeded Wall Street expectations by an even wider margin. But impressive AI semiconductor numbers aren’t surprising anymore. They’re expected. The interesting question is what these particular numbers reveal about what kind of business Credo is actually building.

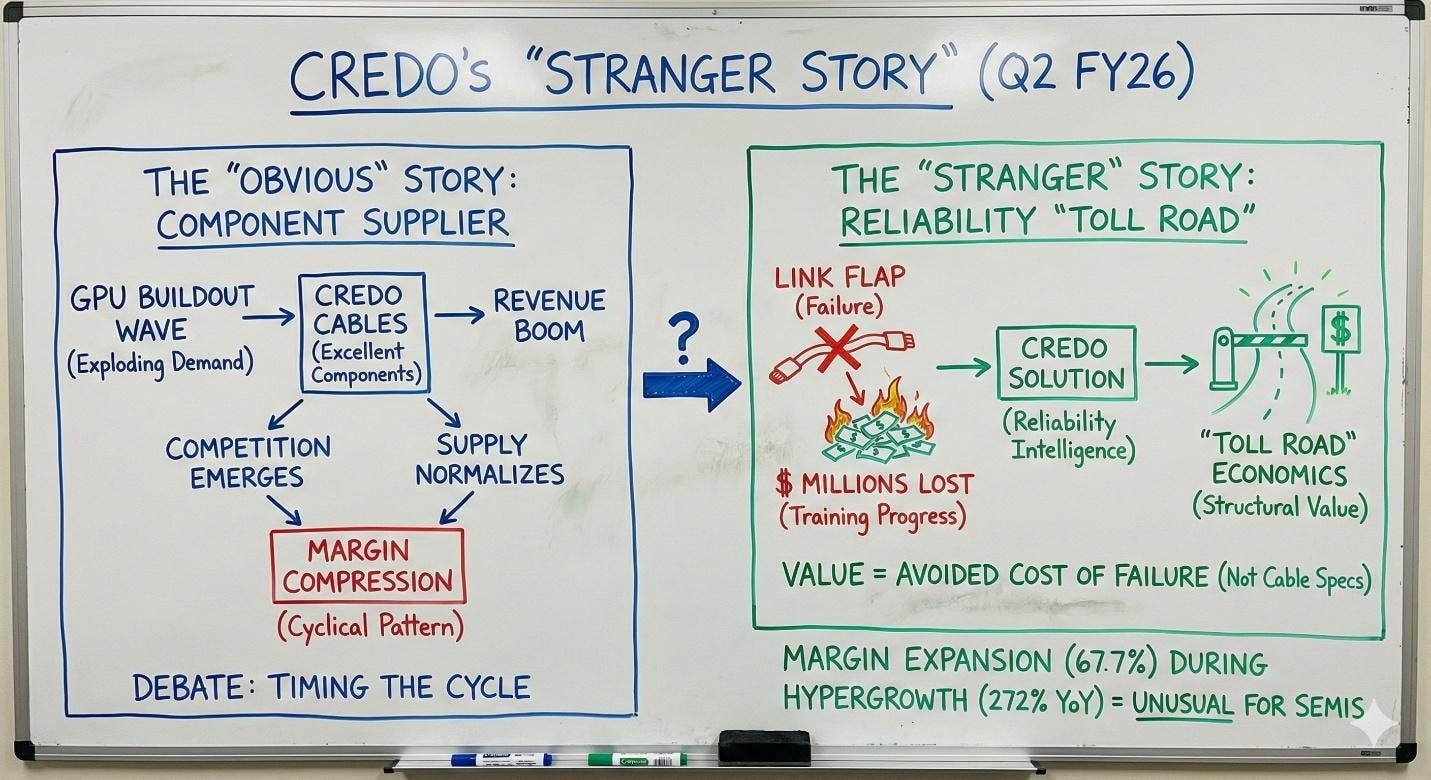

A Stranger Story

The obvious interpretation is that Credo is a well-positioned component supplier riding the GPU buildout wave. They make excellent cables for AI clusters. Demand for AI clusters is exploding. Therefore Credo’s revenue is exploding. When the buildout slows, growth will normalize. When competition emerges, margins will compress. The investment debate, under this view, is about timing the cycle and estimating terminal multiples for a high-quality semiconductor company.

The numbers tell a stranger story.

Consider the gross margins. Credo posted 67.7% gross margins while growing revenue 272% YoY. This isn’t supposed to happen in semiconductors. Hypergrowth brings pricing pressure. Customers with volume leverage demand discounts. Competitors see the margins and pile in. The normal pattern is margin compression during scale-up, not expansion.

So why would Credo’s margins expand during hypergrowth? One possibility is temporary supply constraints—they can charge whatever they want because alternatives don’t exist yet. This explanation predicts margins will compress as supply normalizes and competition emerges.

Another possibility is that Credo isn’t competing on cable specifications at all. They’re competing against the cost of the link flap. When a connection failure can destroy millions of dollars in training progress, the solution that prevents failures isn’t evaluated against other cables. It’s evaluated against the cost of the failure it prevents. Under this view, the margins aren’t temporary pricing power. They’re the economics of collecting tolls on the only reliable road through AI infrastructure.

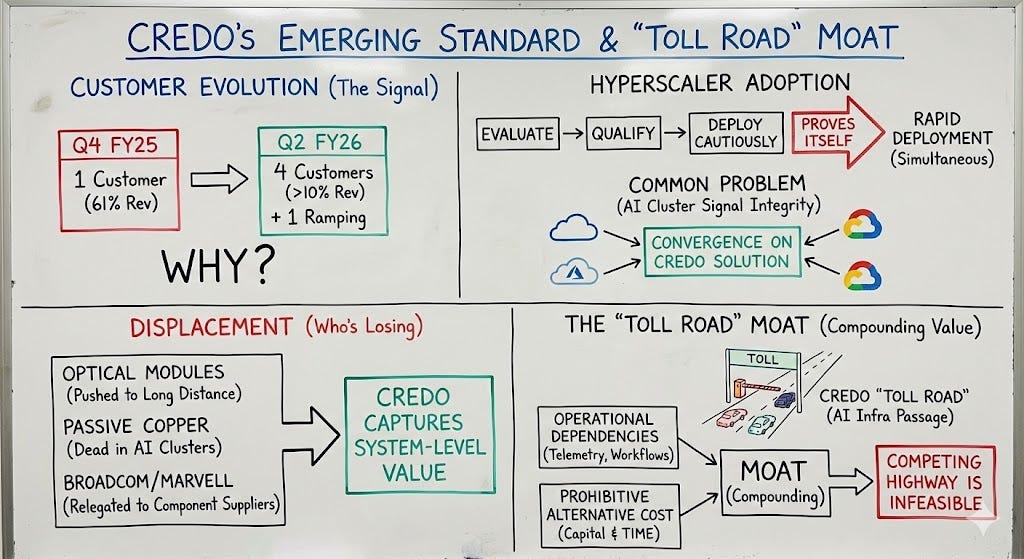

The Standard Nobody Voted For

The customer evolution points in the same direction. In Q4 of fiscal 2025, one customer represented 61% of Credo’s revenue. By Q2 of fiscal 2026—just two quarters later—four customers each exceeded 10% of revenue, with a fifth beginning to contribute.

The surface read is diversification reducing concentration risk. The deeper question is: why would four hyperscalers independently reach significant deployment scale within the same two-quarter window?

Hyperscalers don’t adopt new connectivity standards gradually. They evaluate exhaustively, qualify rigorously, and deploy cautiously—until the solution proves itself, at which point they deploy as fast as their supply chain allows. The simultaneity of adoption across four major customers suggests they all encountered the same problem and converged on the same solution. This is the signature of a standard emerging, not a vendor winning contracts.

The pattern becomes clearer when you ask who’s losing. Optical module vendors are being pushed to longer distances where their physics still dominate. Passive copper cables are effectively dead in AI clusters—the signal integrity requirements are simply too demanding. Even Broadcom and Marvell, who supply the DSPs that Credo’s solutions incorporate, are relegated to component suppliers while Credo captures the system-level value.

The displacement isn’t just about better products. It’s about the difference between selling parts and collecting tolls. Broadcom sells a chip. Credo sells passage through the AI infrastructure bottleneck.

And like all toll roads, the cost of building an alternative route is prohibitive—not just in capital, but in time. Every day Credo’s road carries more traffic, the operational dependencies deepen. Every hyperscaler that builds monitoring workflows around Credo’s telemetry makes the feasibility of a competing highway diminish. The moat isn’t static; it compounds.

The Economics of Embedding

The operating leverage tells the same story from a different angle. Revenue grew 20% sequentially in Q2. Operating expenses grew 5%.

In a normal hardware business, incremental revenue requires incremental cost—more manufacturing support, more sales engineering, more customer success. When revenue dramatically outpaces expenses, it usually means the company is underinvesting and storing up problems, or the business model has fundamentally different economics than it appears.

Credo is clearly not underinvesting. They’re adding headcount, ramping new products, and making acquisitions. The leverage is structural. Each additional deployment leverages existing qualifications, existing engineering knowledge, existing operational playbooks. The marginal cost of serving the next hyperscaler is dramatically lower than the marginal revenue.

These aren’t box-shipping economics. They’re the economics of a business that has embedded itself into how work gets done.

Why Now

The “why now” connects to NVIDIA’s architecture decisions.

The transition from 100G to 200G per lane isn’t just a specification upgrade. It’s the moment when NVIDIA’s GB200 and GH200 cluster designs make network reliability non-negotiable. In these architectures, thousands of GPUs share memory and coordinate computation across the network fabric. The network isn’t connecting computers—the network is the computer.

When the network is the computer, a network glitch is a computer crash. And when each hour of computer time costs tens of thousands of dollars, the tolerance for glitches approaches zero. This is why hyperscalers are standardizing on Credo’s approach despite having built their empires on optical networking. The physics of AI training changed the economics of connectivity.

Management’s language has shifted accordingly. They no longer describe AECs as a product competing for design wins. They describe them as “the de facto standard” for AI cluster connectivity up to seven meters. This isn’t marketing aspiration—it’s operational reality across four major hyperscalers.

The Product They Said Least About

The most interesting product Credo mentioned is the one they said least about.

Pilot is Credo’s telemetry and optimization layer: software that monitors every connection in real-time, predicts failures before they happen, and feeds operational data back into system improvements. Management mentions it briefly in every earnings call without disclosing attach rates, pricing, or customer penetration.

This matters because Pilot transforms the business model from selling hardware to accumulating operational intelligence. Every AEC deployment becomes a sensor node. Every successful deployment generates data that improves all future deployments. Every hyperscaler that standardizes on Credo’s approach adds to a dataset no competitor can replicate.

If that sounds like the beginnings of a data moat, you’re paying attention.

(And if you’re wondering whether hyperscalers would share failure data with a vendor, consider that they already share it with server and GPU suppliers, when the alternative is more multi-million dollar glitches, transparency looks cheap.)

The clearest sign of where Pilot is heading is ZeroFlap Optics—Credo’s new optical product line that integrates telemetry directly into network management software. The product creates what management calls a “check-engine light” for every link in an AI cluster. This isn’t a better optical module. It’s the extension of operational intelligence into a new physical domain.

The pattern extends further. Active LED Cables apply the same approach to 30-meter distances. OmniConnect gearboxes extend it to memory-to-compute connections. Each new product line is another segment of road where Credo can collect tolls—and another domain where the operational integration deepens.

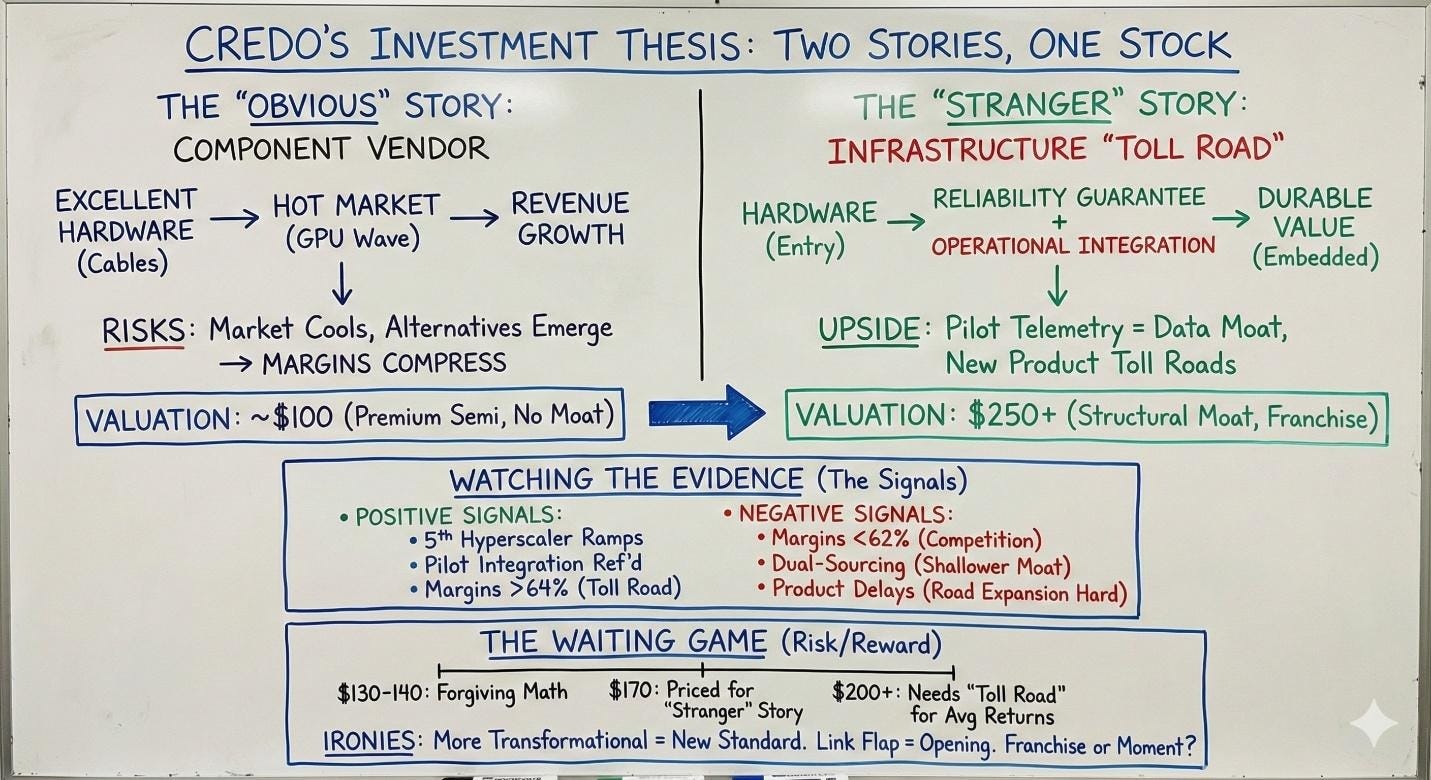

Two Stories, One Stock

The question these developments force is whether Credo is selling shovels or charging rent on the mine.

The obvious story says Credo builds excellent hardware for a hot market. When the market cools, growth normalizes. When alternatives emerge, margins compress. If it’s the obvious story, $100 seems about right—a premium semiconductor company with excellent timing but no structural moat.

The stranger story suggests Credo is building operational infrastructure that AI clusters will depend on regardless of which GPUs or networking protocols win. The hardware is the entry point; the reliability guarantee and operational integration are what create durable value. If it’s the stranger story, $250 might be conservative—and potentially much higher if the new product lines extend the toll road into adjacent domains.

At $170, the market has already voted that Credo is something special. The question is whether it’s special enough to justify the price, or whether the specialness is already fully reflected.

Watching the Evidence

So how do we know which story is right? Not by listening to analysts, but by watching what customers actually do.

If the fifth hyperscaler ramps to meaningful scale in fiscal 2027, the standard-setting dynamic is real. If customers begin referencing Pilot integration in their own technical communications, the operational embedding is happening. If gross margins hold above 64% through the 200G transition despite competition, the toll collection is structural.

What would undermine the thesis? If margins compress below 62% with “competitive pricing” language, the obvious story is correct. If major customers begin dual-sourcing with Broadcom or Marvell alternatives, the moat is shallower than it appears. If new product timelines slip repeatedly, the road expansion is harder than management suggests.

The most important signal is the one hardest to track: whether Pilot telemetry becomes embedded in how hyperscalers operate their AI infrastructure. If switching away from Credo would require rebuilding operational workflows—not just swapping cables—then the toll road is real. If Credo remains easily substitutable once alternatives reach qualification, it’s just a component company with good timing.

The Waiting Game

The hardest part of investing isn’t the analysis. It’s waiting for the price that makes the analysis matter.

At $170, Credo is priced for the stranger story to be true. If you’re confident it is, the current price may be reasonable. If you’re uncertain—and the honest answer is that the Pilot thesis remains unproven—then waiting for a price that compensates for that uncertainty makes sense.

Somewhere around $130-140, the math becomes more forgiving. At that level, even the obvious story offers acceptable returns, and the stranger story offers exceptional ones. Above $200, the risk-reward inverts—you need the toll road to be real just to generate market-average returns.

The thesis is clear. What remains is the discipline to wait for the opportunity that matches the analysis.

The irony is that the more transformational Credo becomes, the more it resembles the thing it’s replacing: not a component vendor fighting for design wins, but the new standard that everything else must conform to. The link flap created the opening. What Credo builds in that opening will determine whether this is a moment or a franchise.

CRDO 0.00%↑ AVGO 0.00%↑ ALAB 0.00%↑ NVDA 0.00%↑

Disclaimer:

The content does not constitute any kind of investment or financial advice. Kindly reach out to your advisor for any investment-related advice. Please refer to the tab “Legal | Disclaimer” to read the complete disclaimer.