Microsoft 1QFY26: Jagged intelligence & the Control Premium

Microsoft's Q1 results prove that in the chaotic era of AI, enterprises will pay a premium for control.

TL;DR

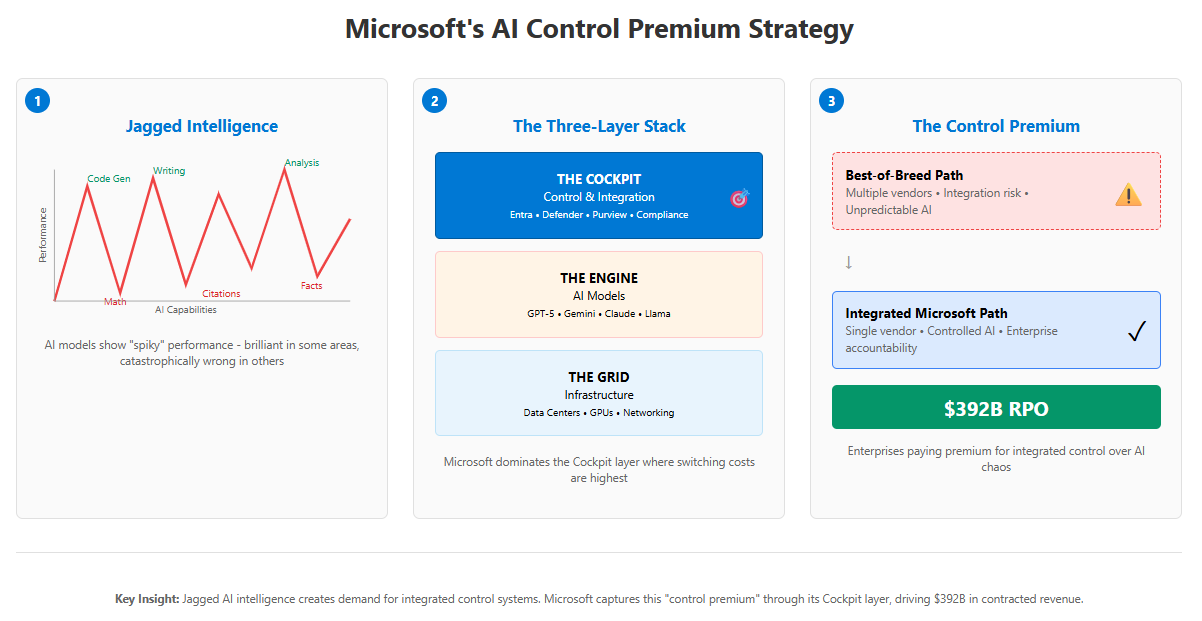

The “Great Re-Integration”: Microsoft’s blockbuster Q1 results—including 112% bookings growth and a $392B backlog—prove the AI era is forcing enterprises to abandon “best-of-breed” fragmentation. They are now paying a massive premium for a single, integrated, and accountable partner to control the risks of “jagged intelligence.”

The “Control Premium” is the Real Moat: Microsoft’s strategy is not just about winning the infrastructure (Grid) or model (Engine) race. It’s about dominating the “Cockpit”—the integrated layer of security, identity, and compliance. This is where enterprises pay a “Control Premium” to safely deploy AI, creating a deeper lock-in than raw performance or cost.

Capital as a Weapon: Microsoft’s staggering $35B quarterly CapEx, funded entirely by its massive cash flow, is not an expense; it’s a strategic weapon. It’s an industrial-scale consolidation play designed to make the price of competition economically irrational for all but 2-3 players, echoing the playbooks of historical infrastructure barons.

The Server & Tools Playbook

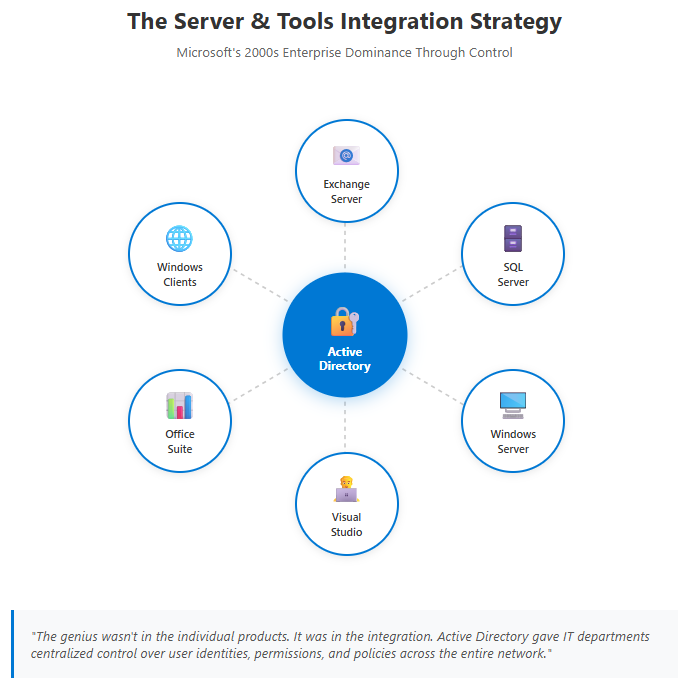

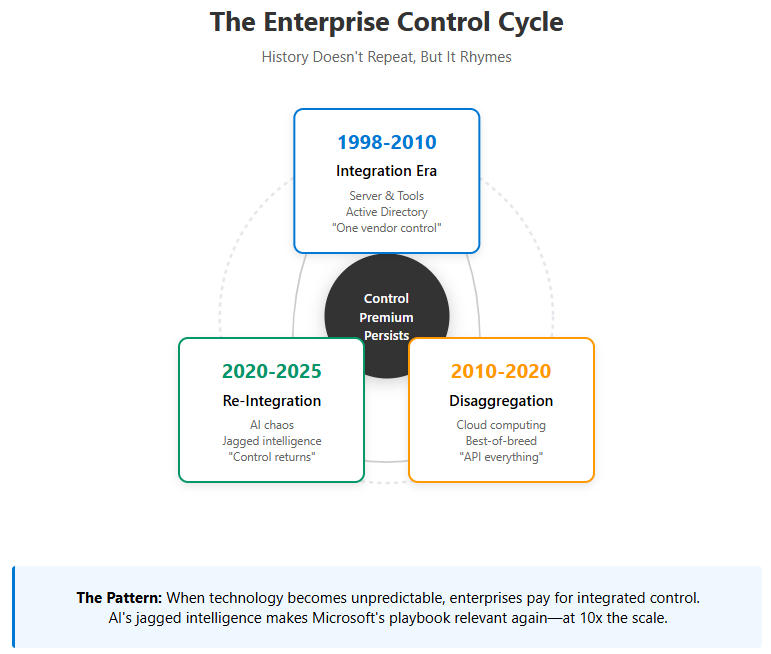

In 1998, Microsoft had a problem. Unix systems dominated enterprise computing, and competitors like Sun Microsystems and Oracle controlled the high-margin server market. Bill Gates responded with a strategy that would define Microsoft’s next two decades: Windows 2000 Server, paired with SQL Server, Exchange, and Active Directory.

The genius wasn’t in the individual products. It was in the integration. Active Directory gave IT departments centralized control over user identities, permissions, and policies across the entire network. Exchange connected to Outlook, which connected to Windows desktops, which authenticated through Active Directory. SQL Server stored the data that powered everything. Visual Studio was the development environment that kept developers building on Microsoft’s stack.

The system worked because it solved the enterprise’s most fundamental problem: control. CIOs didn’t need the fastest Unix workstation or the most elegant Oracle database. They needed a single vendor they could hold accountable when something broke at 3 AM. They needed integrated security that worked across email, file servers, and databases. They needed systems that reduced complexity rather than adding it.

Microsoft won the 2000s not by having the best individual components, but by selling control. The “Server & Tools” division became a $20+ billion annual revenue machine not despite being less technically impressive than competitors, but because it was integrated.

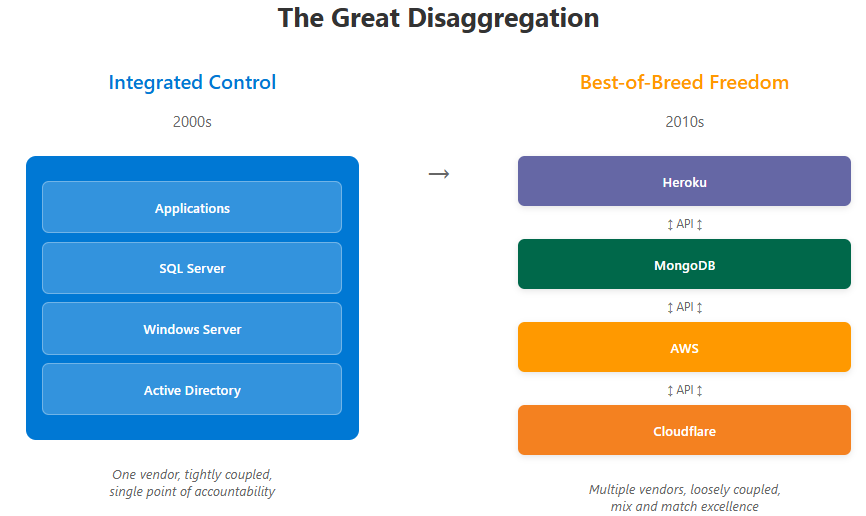

Then cloud computing arrived, and the playbook stopped working.

The Great Re-Integration

The first decade of cloud was defined by disaggregation. AWS proved you could separate compute, storage, and networking into distinct services with clean APIs between them. Developers loved it. You could use S3 for storage, Heroku for apps, MongoDB for databases, and Cloudflare for CDN. Best-of-breed won. Integration lost.

Microsoft’s Azure strategy adapted by becoming “developer-friendly”—offering comparable services to AWS while maintaining enterprise relationships. It worked well enough to build a $75 billion run-rate business. But it was fundamentally defensive. The cloud era wasn’t playing to Microsoft’s historical strength: selling integrated control.

The AI era is different.

As Satya Nadella described on Microsoft’s Q1 earnings call, AI models have “jagged intelligence.” A model might be brilliant at writing code but catastrophically wrong at basic arithmetic. It might generate perfect marketing copy but hallucinate legal citations. The intelligence is “spiky”—extraordinary capabilities mixed with unpredictable failures.

This creates a fundamental enterprise problem. You cannot deploy jagged intelligence directly into production systems without catastrophic risk. A hallucinated legal document isn’t a feature request; it’s a liability that costs the company millions. An AI that occasionally leaks customer data isn’t acceptable at any price.

The solution isn’t better models. OpenAI’s GPT-5 is more capable than GPT-4, but it’s still jagged. Google’s Gemini is impressive but unpredictable. Every frontier model shares this characteristic—extraordinary capability paired with fundamental unreliability.

The solution is integration. You need systems that smooth the jagged edges: guardrails that catch hallucinations, security layers that prevent data leaks, audit trails that track every AI decision, and compliance frameworks that satisfy regulators. You need, in other words, the same thing enterprises needed in 1998: control.

Microsoft’s Q1 FY26 results are the definitive proof that the Great Re-Integration is happening. The numbers aren’t just good. They’re receipts.

The Receipts of Re-Integration

From Bloomberg, October 29, 2025: “Microsoft Corp. reported revenue of $77.7 billion for its fiscal first quarter, topping Wall Street expectations. The company’s closely watched cloud-services unit, which includes its Azure business, grew by 40%, exceeding expectations.”

My first thought reading these results wasn’t about the beat—it was about what the magnitude of certain numbers reveals about customer behavior.

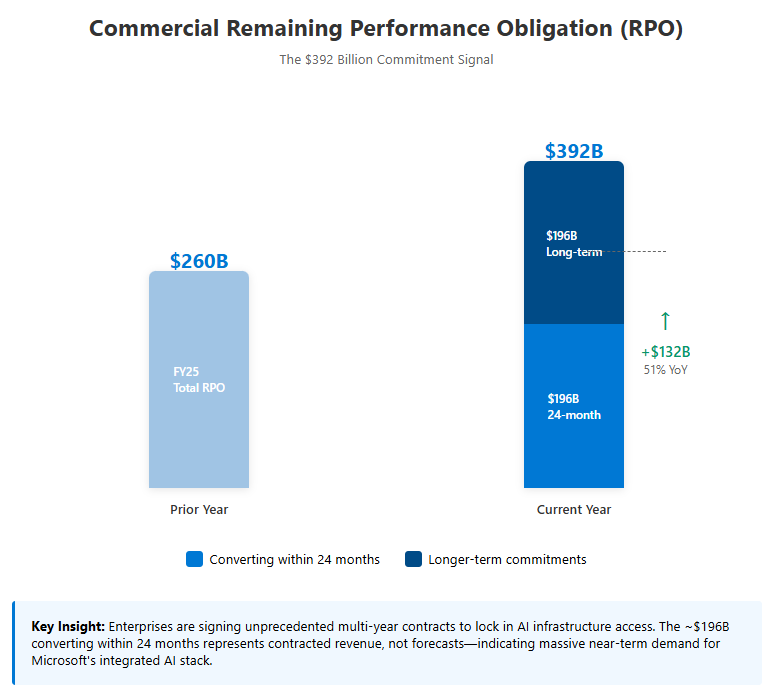

Exhibit 1: The $392 Billion Commitment

Commercial Remaining Performance Obligation reached $392 billion, growing 51% YoY. This isn’t typical enterprise software growth. This is customers signing multi-year contracts at unprecedented scale to lock in access to Microsoft’s AI infrastructure.

The weighted average duration is roughly 2 years—meaning approximately $196 billion converts to revenue over the next 24 months. That’s not a forecast; it’s contracted. Bloomberg consensus models show $294 billion total FY26 revenue. The math requires either massive Cloud acceleration or the model is wrong.

More revealing: commercial bookings grew 112% year-over-year. The bookings number typically grows in line with revenue (15-20% annually). When bookings growth hits triple digits, something structural changed in customer behavior.

What changed is enterprises capitulating to the Great Re-Integration. They’re not evaluating five cloud providers and choosing the best price. They’re signing long-term commitments with Microsoft to secure access to the integrated AI stack they believe they’ll need.

Exhibit 2: The $34.9 Billion Price Tag

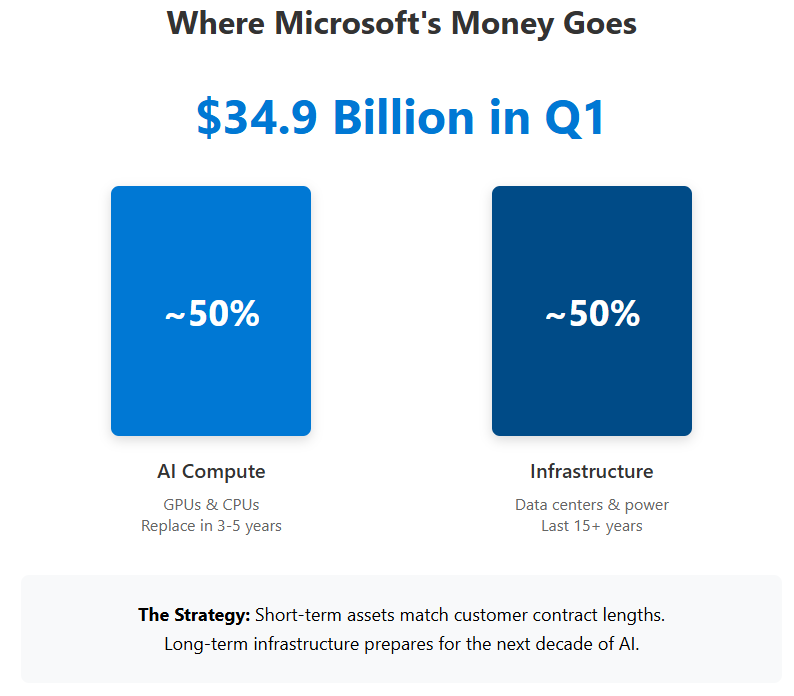

Capital expenditures reached $34.9 billion in Q1, up 74% YoY. The composition matters. Roughly 50% went to short-lived assets—primarily GPUs and CPUs—that depreciate over 3-5 years. These are the engines that process AI workloads. The remaining $17+ billion went to long-lived infrastructure: data centers, power infrastructure, networking gear that supports the fleet for 15+ years.

The $11.1 billion in finance leases is particularly telling. These are commitments for massive data center sites that Microsoft is securing now to support monetization over the next decade and beyond.

This isn’t speculative investment. Microsoft is matching asset lifetimes to contract durations. The $392 billion RPO with ~2 year average duration justifies the short-lived GPU/CPU spending. The long-term infrastructure spending anticipates renewal cycles and expansion.

CFO Amy Hood made this explicit: “Short-lived assets generally are done to match the duration of the contracts or the duration of your expectation of those contracts.”

Exhibit 3: The Margin Signal

Microsoft Cloud gross margin compressed from 71% to 68%. Bulls worried this indicates permanent margin pressure from low-margin AI infrastructure. I read it differently.

The compression is entirely mix shift—Azure growing 40% versus M365 growing 17% means lower-margin business takes larger share. But look at what’s not happening: operating margins stayed at 49%, up 2 points YoY.

More importantly, Microsoft guided Q2 operating margins as “relatively flat year-over-year” while absorbing 74% CapEx growth and scaling AI workloads dramatically. This means they’re translating infrastructure deployment directly into revenue without margin degradation at unprecedented investment levels.

The guidance to stay “relatively flat” while spending $35 billion quarterly on infrastructure is remarkable. It suggests pricing power and efficiency gains offsetting cost increases.

The Cash Engine That Funds the Empire

There’s a financial story beneath the strategy that deserves attention: Microsoft is self-funding this entire build-out.

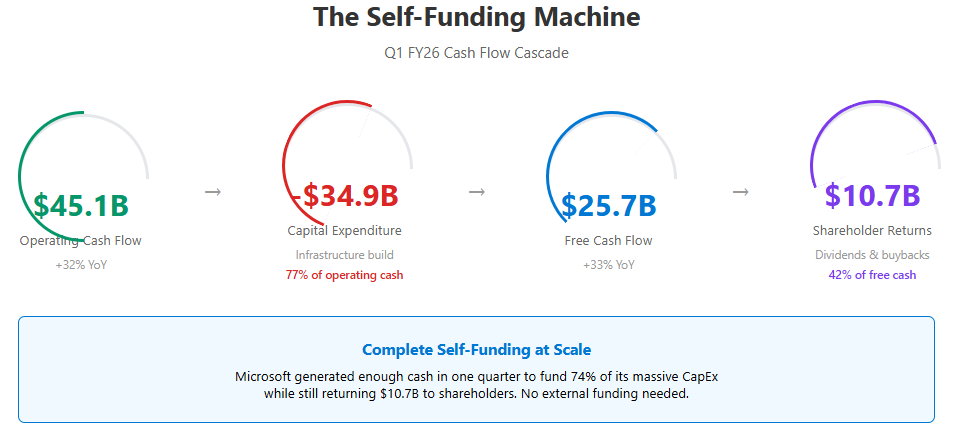

Cash flow from operations reached $45.1 billion in Q1, up 32% year-over-year. Free cash flow hit $25.7 billion, up 33%. The company generated enough cash in one quarter to fund 74% of the entire quarterly CapEx while still returning $10.7 billion to shareholders through dividends and buybacks.

This matters because the investment cycle is just beginning. Hood guided FY26 CapEx growth “higher than FY25,” implying a $100+ billion annual run rate. FY27 will likely be “significantly higher.” The question isn’t whether Microsoft can afford this—operating cash flow runs $150+ billion annually—but whether competitors can match it.

Google can. They’re guiding $91-93 billion for 2025 with similar self-funded cash generation. AWS likely can as Amazon’s retail business generates massive cash flow. But the list stops there.

The gap between cash paid for property and equipment ($19.4 billion) and accounting CapEx ($34.9 billion) reflects finance lease timing and equipment not yet paid for. Over time these converge, but the core point stands: Microsoft’s business generates sufficient cash to fund this infrastructure race without capital markets dependency.

This is the financial foundation that makes the strategic plays possible.

The Three-Layer Stack

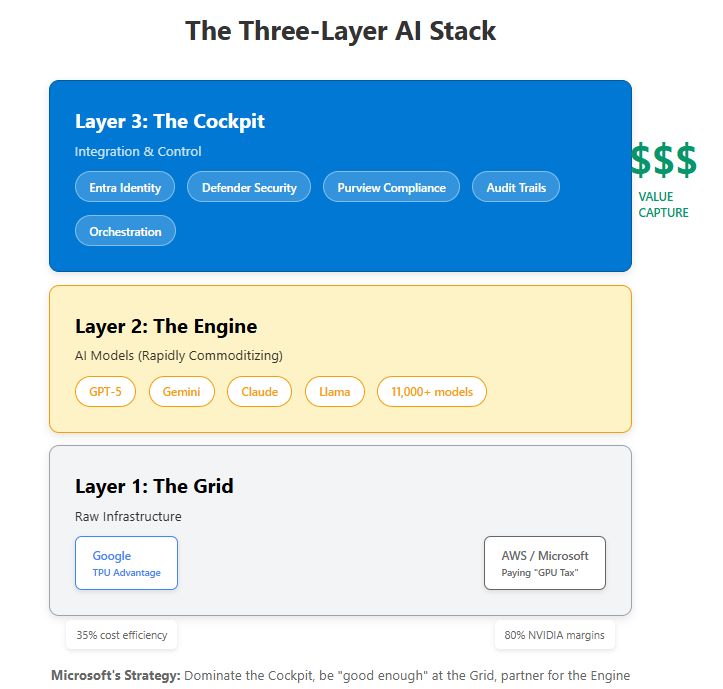

To understand Microsoft’s strategy, you need a framework for how AI infrastructure actually works. Think of it as three distinct layers:

Layer 1: The Grid (Infrastructure)

This is raw compute—data centers, power, networking, GPUs. It’s measured in tokens processed per dollar and watts consumed. Google has a structural advantage here through custom TPU chips that avoid paying NVIDIA’s 80% gross margins. Microsoft and AWS both pay the “GPU tax.”

Layer 2: The Engine (Models)

This is the AI models themselves—GPT-5, Gemini, Claude, Llama. The layer is rapidly commoditizing. Microsoft’s Azure AI Foundry offers 11,000+ models. Google’s Vertex AI offers similar breadth. AWS Bedrock positions as the neutral platform for all models. No single player owns this layer because customers demand choice.

Layer 3: The Cockpit (Integration & Control)

This is where Microsoft wins. The cockpit includes identity management (Entra), security frameworks (Defender, Purview), compliance tooling, audit trails, and orchestration systems that make AI production-ready. It’s the layer that manages jagged intelligence.

Microsoft’s entire strategy is to dominate the Cockpit while being “good enough” at the Grid and partnering for the Engine. They’re willing to pay NVIDIA’s GPU tax because they’re capturing value at Layer 3 where switching costs are highest.

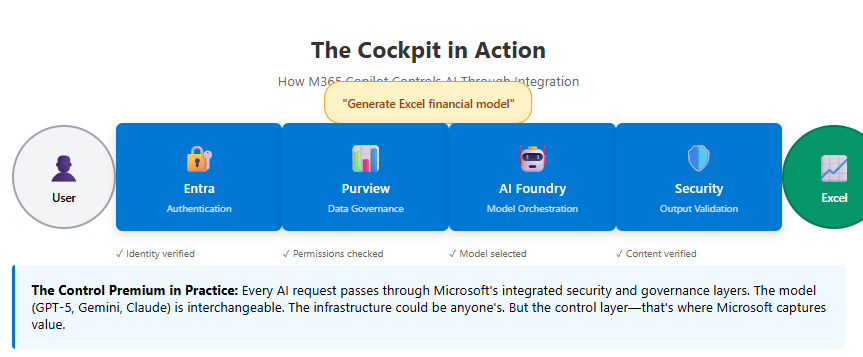

Consider how M365 Copilot actually works. A user asks Copilot to generate an Excel financial model. The request passes through:

Entra for authentication

Purview for data governance

Azure AI Foundry for model orchestration

Back through security layers for output validation

Into Excel with formatting that matches company standards

The model (Engine) could be GPT-5, Gemini, or Claude—doesn’t matter. The infrastructure (Grid) could be Microsoft’s or partially Google’s—doesn’t matter. What matters is the Cockpit that makes it work reliably in production.

This is where the control premium gets captured.

Reading Between the Lines

Two details from the earnings report and call reveal more than what was said explicitly.

The Efficiency Paradox

Satya mentioned that Microsoft achieved 30% throughput improvements on GPT-4.1 and GPT-5 through software optimization. These gains make each GPU 30% more productive without additional hardware cost.

Standard logic: if throughput improved 30%, infrastructure needs should decline proportionally. Instead, CapEx grew 74%.

This implies demand is growing faster than efficiency improvements can offset. Much faster. If you improve efficiency 30% but still need to grow spending 74%, underlying workload demand must be growing 100%+ annually.

Microsoft cannot disclose demand growth rates this explicitly without revealing pricing power to customers. But the math is unavoidable.

The Constraint Extension

In Q4 FY25 earnings, Microsoft guided that capacity constraints would be “relieved by end of fiscal year.” In Q1, Hood revised this to “constrained through at least the end of our fiscal year”—extending the timeline by two quarters.

This happened despite deploying $35 billion in infrastructure. Either supply chains deteriorated significantly (TSMC, HBM, power infrastructure), or demand accelerated beyond internal models.

The short ~2-year RPO duration signals customers believe capacity will be available within contract terms. They’re not signing desperate 5-year lockups because they think scarcity is permanent. They’re signing 2-year commitments confident in Microsoft’s ability to deliver but wanting to secure their place in line.

The combination—extended constraints despite massive spending, paired with short-duration high-growth RPO—suggests extraordinary demand growth that Microsoft is racing to meet.

The Control Premium

Here’s what Microsoft is actually selling: control over jagged intelligence.

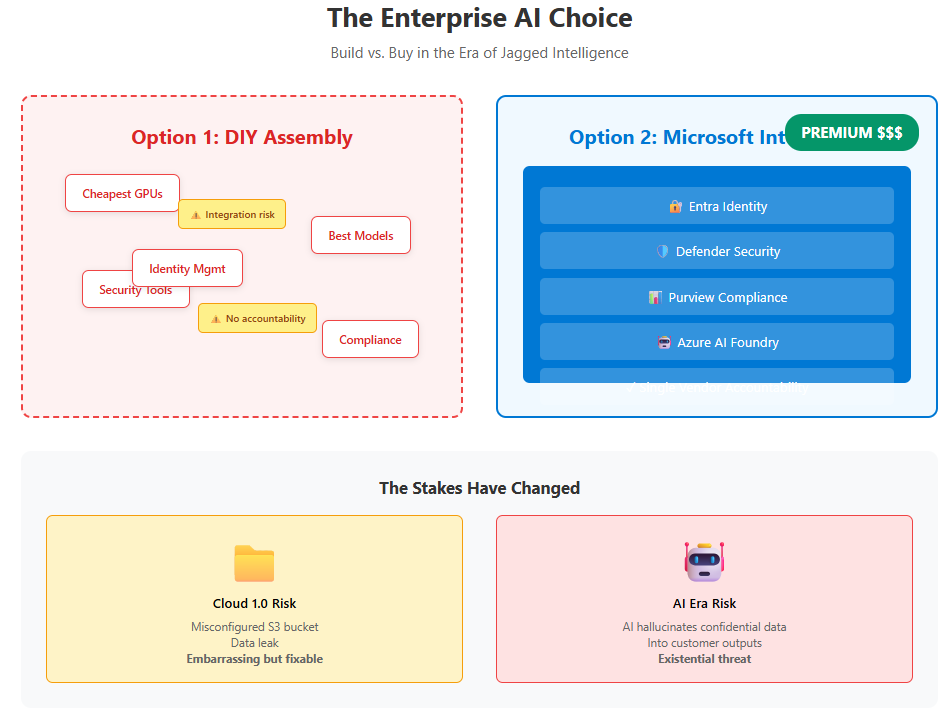

The Control Premium is what enterprises pay for integrated systems that manage AI’s unpredictability. It’s the price difference between assembling best-of-breed components yourself and accepting a fully integrated solution from a single vendor.

Enterprises face a choice. They can:

Assemble best-of-breed components (cheapest GPUs, best models, separate security tools)

Accept an integrated system from a single vendor at a premium price

In cloud 1.0, option 1 won. In the AI era, option 2 is winning. The reason is risk.

A mis-configured S3 bucket that leaks data is embarrassing and fixable. An AI that occasionally hallucinates confidential information into customer-facing outputs is an existential threat. The jaggedness of AI intelligence makes integration a necessity, not a luxury.

The control premium shows up as:

Higher Azure pricing versus comparable AWS/Google compute

M365 Copilot ARPU expansion from $20/user baseline to $50+ with AI features

Azure AI Foundry charging orchestration fees beyond raw model costs

Microsoft is building the capability to capture small percentages across massive transaction volumes. Every AI-generated email, every code commit through GitHub Copilot, every security decision enhanced by Defender AI—these become micro-transactions where Microsoft extracts value through its Cockpit layer.

The Q1 results validate this works. The 112% bookings growth and $392 billion RPO prove enterprises are willing to pay the premium.

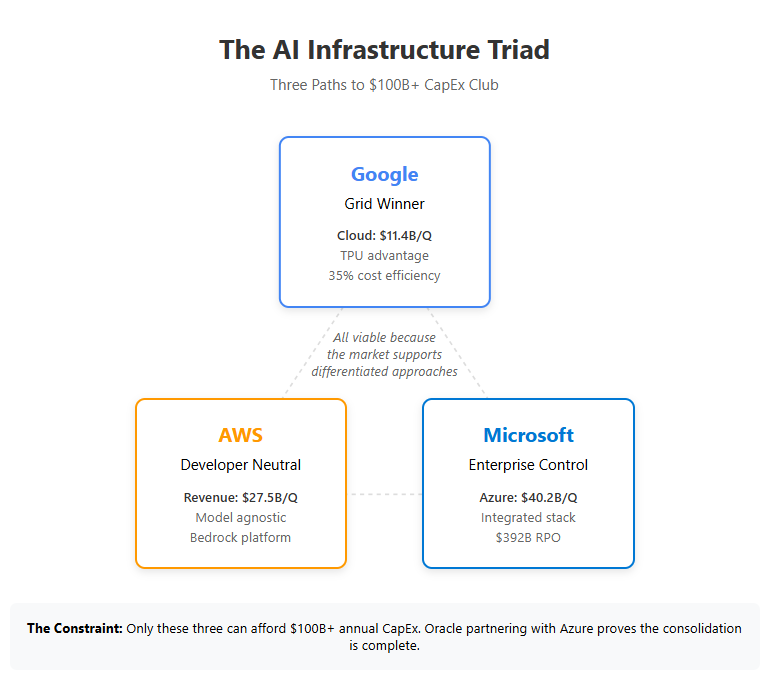

The Competitive Context

This positions Microsoft clearly within the emerging structure. Google wins the Grid through TPU economics—processing tokens 35% cheaper than NVIDIA-dependent competitors. AWS wins among developers wanting neutrality and avoiding vendor lock-in. Microsoft wins the Boardroom by selling control.

All three are viable because the market is large enough to support differentiated approaches. The constraint is capital—$100 billion annual CapEx eliminates everyone else. Oracle partnering with Azure rather than competing proves consolidation is complete.

The strategic tension is whether the control premium persists long-term or whether cost advantages eventually dominate. That plays out over 5-10 years. For now, customer behavior indicates integration is winning.

What to Watch

Four metrics determine if the thesis holds:

RPO Growth & Duration: The $392 billion growing 51% with ~2 year duration is the lead indicator. If growth sustains above 35-40% with stable duration, the Great Re-Integration is real. Deceleration below 25% growth signals demand saturation or competitive pressure.

Microsoft Cloud Gross Margin Direction: Current 68% reflects mix shift to Azure. Recovery toward 70-72% over next 6-8 quarters indicates software efficiency gains (like the 30% GPU improvement) flowing through as margin expansion. Further compression below 66% suggests structural margin pressure.

Azure Growth Rate vs RPO Conversion: Azure growing 40% while RPO grows 51% means bookings outpace revenue recognition—positive for future quarters. If both decelerate together, demand is slowing. If RPO conversion accelerates but bookings slow, it signals near-term strength masking weakening demand.

Free Cash Flow vs CapEx Trajectory: Current free cash flow of $25.7 billion on $34.9 billion CapEx demonstrates financial sustainability. Watch the gap. If CapEx grows to $40+ billion quarterly while FCF stays flat, it signals either deteriorating ROIC or capital markets dependency emerging. Widening FCF while maintaining CapEx pace validates the self-funding model.

Control is Everything

Twenty-seven years ago, Microsoft won enterprise computing by selling control. Active Directory, Exchange, and Windows Server weren’t the fastest or cheapest solutions. They were integrated, accountable, and reduced complexity for IT departments managing thousands of users across disparate systems.

Cloud computing changed the game by commoditizing infrastructure. Best-of-breed disaggregation won because components were reliable enough to mix and match. Integration’s value proposition weakened.

AI is changing it back. Jagged intelligence reintroduces fundamental unreliability that makes integration essential. You cannot safely deploy systems that are brilliant yet unpredictable without control layers that catch failures before they reach production.

Microsoft’s Q1 FY26 wasn’t just a strong earnings beat. It was quantitative proof that customers are voting for re-integration with their wallets. The $392 billion RPO represents enterprises choosing a single, accountable partner to manage AI’s chaos. The $34.9 billion CapEx represents Microsoft building the integrated infrastructure to deliver on those commitments. The 112% bookings growth represents the market capitulating to this reality.

The technology has changed. The models are transformer-based, not rule-based. The infrastructure is cloud-native, not on-premise. But the fundamental enterprise need—control over complex, business-critical systems—hasn’t changed at all.

Microsoft is executing the same playbook that built Server & Tools into a $20+ billion business. They’re just doing it at $75 billion scale with AI instead of directories and databases. The control premium is alive. The Great Re-Integration is happening. And Q1 proved it’s not theory—it’s revenue.

Disclaimer:

The content does not constitute any kind of investment or financial advice. Kindly reach out to your advisor for any investment-related advice. Please refer to the tab “Legal | Disclaimer” to read the complete disclaimer.

Wow, the part about the 'Control Premium' truely stood out. I'm curious, how exactly does the 'Cockpit' layer mitigate the specific risks of 'jagged intelligence'?