MongoDB and the Memory of Machines

From developer rebellion to AI retrieval: a new fight for data, control, and margins

TL;DR

MongoDB won the last data revolution by freeing developers from rigid schemas—but the next battle is about how machines remember, not just how data is stored.

AI applications demand hybrid retrieval—combining semantic vector search, structured filters, and real-time operational data under tight governance. MongoDB bets that integration will beat specialist speed.

The outcome will hinge on margin resilience and AI attach rates: If MongoDB proves its unified stack drives real-world adoption and sustains software-like economics, it could re-bundle the AI data layer. Otherwise, it risks being commoditized by cloud vendors and “good enough” defaults.

The First Data Revolt

In the spring of 2012, a small team of engineers at a New York startup faced a familiar problem. Their user-generated content application was drowning in database complexity. Every time a product manager asked for a new feature—adding user preferences, nested comments, flexible metadata—the developers faced the same ritual: sketch out new SQL tables, write migration scripts, update object-relational mappers, coordinate deployment windows, pray nothing broke.

MongoDB offered an escape. Instead of forcing messy, hierarchical data into rigid tables, its document model let developers store information the way their applications naturally thought about it: as JSON objects. No migrations, no ORM hell, no database administrator gatekeepers. The productivity gain was immediate and visceral.

This was MongoDB’s original value proposition: remove the friction between how developers think and how databases work. It succeeded spectacularly. By 2015, MongoDB had become the default choice for a generation of web applications, powering everything from mobile backends to content management systems. The company rode the wave of “NoSQL” adoption, went public in 2017, and built a multi-billion dollar business on a simple insight: developers will choose tools that make them faster.

But that was solving yesterday’s constraint—how we store data. Today, as artificial intelligence reshapes software, a new constraint has emerged: how machines recall information accurately, securely, and instantly to make decisions. This shift—from storage to retrieval, from recording to remembering—defines MongoDB’s current challenge and opportunity.

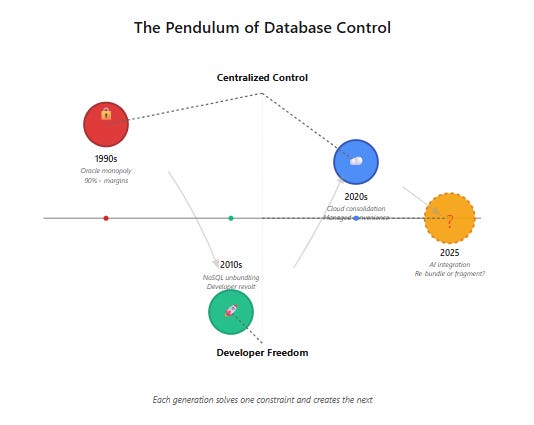

The Cycle of Control and Freedom

To understand where MongoDB sits today requires understanding the pendulum swing of database history.

The Oracle bargain.

In the 1980s and 1990s, enterprises faced chaos: data trapped in incompatible mainframe systems, no way to answer cross-departmental questions, no single source of truth. Oracle Database offered salvation through the relational model—a unified, standardized way to organize information with SQL as its universal language. Companies eagerly signed up.

The cost of this order was steep. Oracle charged premium prices (gross margins above 90%, operating margins near 45%) and enterprises had no alternative once their data accumulated. More subtly, the rigid schema requirements meant every business change required database changes, creating bottlenecks. IT departments became gatekeepers. Agility suffered. But for 20 years, the trade-off seemed worth it: reliability and consistency in exchange for speed and flexibility.

The unbundling.

The internet era shattered this equilibrium. Applications needed to evolve daily, not quarterly. Data structures were messy and nested (user profiles, social graphs, clickstreams), not the clean rows and columns that relational databases expected. A new generation of developers, armed with open-source tools and running on cheaper commodity hardware, started building specialized databases optimized for specific jobs.

MongoDB emerged as the champion of this developer revolt. Its document model was both technically superior for web workloads and philosophically aligned with developer autonomy. No longer would the database dictate application design; applications would choose databases that fit their needs.

By the mid-2010s, the enterprise database landscape had fragmented. MongoDB for documents, Elasticsearch for search, Redis for caching, Neo4j for graphs, Cassandra for time-series. Developers had freedom. But that freedom came with new complexity: managing a dozen specialized systems, keeping them synchronized, securing them individually, stitching together queries across boundaries.

The cloud’s gravity.

Enter AWS, Azure, and Google Cloud. The hyperscalers didn’t reverse the unbundling—they commoditized it. They offered managed versions of every tool on a single bill with infinite scalability. The value proposition shifted from which database to who operates it.

This created strategic pressure on independent database companies. Why pay MongoDB separately when AWS offers DocumentDB (a MongoDB-compatible service) with one-click deployment and integrated billing? The convenience of consolidation began pulling developers back toward the clouds’ gravitational center. MongoDB’s response was Atlas, its own managed cloud service running on top of AWS, Azure, and GCP infrastructure—effectively reselling the hyperscalers’ compute while adding a management layer.

This set up an uneasy dynamic. MongoDB depends on cloud providers for infrastructure but competes with their database offerings. The question that defines MongoDB’s current position: Can it maintain software-like economics (70%+ gross margins) while essentially arbitraging cloud infrastructure costs?

The New Battlefield: How Machines Remember

The emergence of AI and large language models has created yet another inflection point.

AI applications require something fundamentally different from traditional software: they must answer questions by retrieving relevant information from vast datasets, not just reading or writing records. A customer service chatbot needs to find similar past tickets (semantic search), filter by customer tier and date range (structured queries), and do it all within 200 milliseconds while respecting access controls (governance).

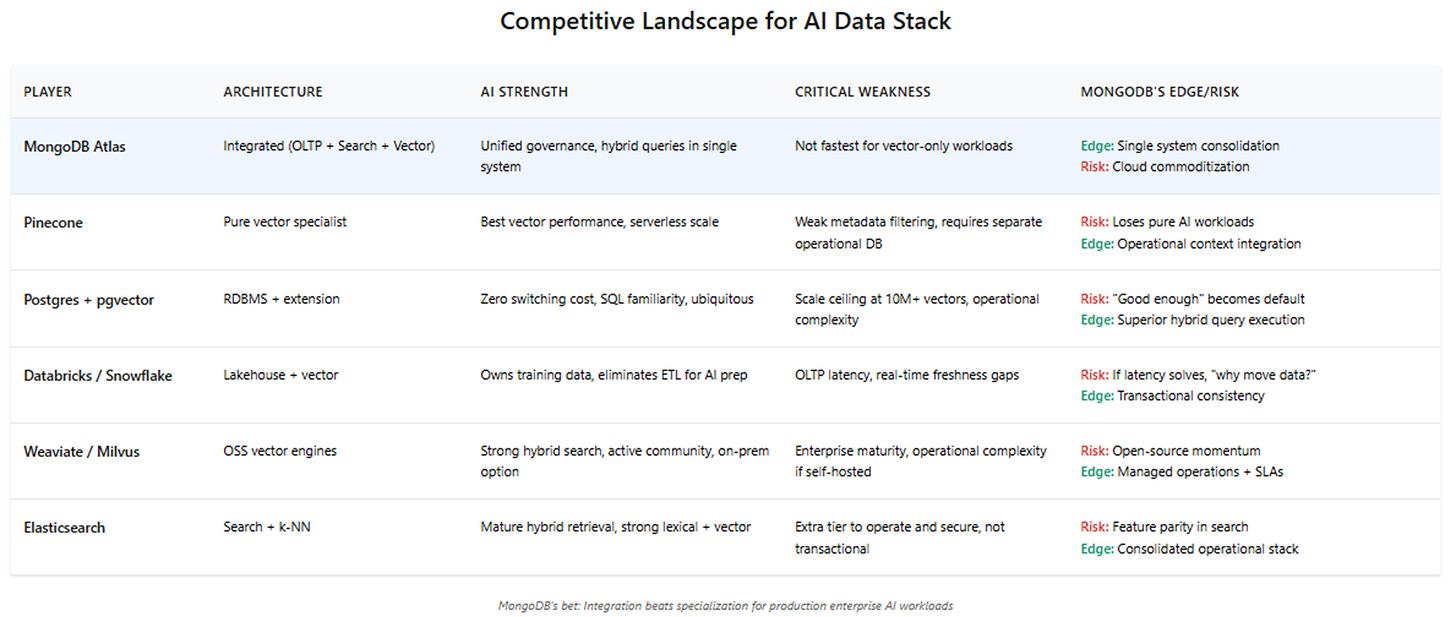

This hybrid requirement—combining vector similarity search with traditional database filtering and policy enforcement—has exposed a gap in the current tool landscape. Three competing approaches have emerged:

The Integrated System — MongoDB’s bet is that production AI applications need operational data, search, and vector retrieval unified in a single governed system. One query, one security model, one consistency guarantee. Atlas combines its document store with Atlas Search (full-text) and Atlas Vector Search, all queryable together. The advantage: simplicity and governance. The trade-off: not the absolute fastest at any single dimension.

The Specialist Stack — Pure-play vector databases like Pinecone, Weaviate, and Milvus offer superior performance for similarity search. They’re architected from the ground up for high-dimensional embeddings, optimized for billion-vector scale. The advantage: best-in-class vector performance. The trade-off: enterprises must run a separate system alongside their operational database, duplicating security policies, managing data synchronization, and accepting additional latency for metadata filtering.

The Default Incumbent — PostgreSQL with the pgvector extension offers “good enough” vector capabilities inside the database that most developers already know. Combined with JSONB for semi-structured data, it covers 70-80% of use cases. The advantage: zero switching cost, SQL familiarity, one less system to manage. The trade-off: scaling vector search to millions of documents requires careful tuning, and performance lags purpose-built solutions.

The strategic question underlying all of this: Does competitive advantage accrue to the system with the best individual component (fastest vector index) or the best integration (unified operational and retrieval)?

MongoDB is betting on integration. Its thesis: AI applications in production enterprises care more about governance, freshness, and operational simplicity than benchmark victories. A financial services firm building an AI-powered trading system needs to ensure that retrieved context respects real-time permissions, complies with audit requirements, and reflects the absolute latest transaction state—all while maintaining sub-second latency. Stitching together multiple databases makes those guarantees harder to provide.

MongoDB’s Strategic Bet

Understanding MongoDB’s position requires looking at both its technical architecture and business economics.

The architectural play. MongoDB is building what it calls “operational and retrieval” capability in a single query layer. A developer can write:

db.transactions.aggregate([

{$vectorSearch: {queryVector: embedding, limit: 10}},

{$match: {timestamp: {$gt: Date.now() - 86400000}}},

{$match: {customerTier: {$in: [”premium”, “enterprise”]}}},

{$match: {status: “active”}}

])

This executes semantic similarity search (finding transactions conceptually related to the query), time-based filtering (last 24 hours), relational filtering (customer tier), and operational state (active status)—all in one request, one security context, one consistency model.

Contrast this with a specialist stack: query Pinecone for similar vectors, extract IDs, query MongoDB for metadata, filter in application code, potentially missing recent updates or security changes. The latency overhead of multiple round-trips and the governance complexity of keeping policies synchronized are real production concerns.

MongoDB has reinforced this bet with recent moves:

Atlas Search and Vector Search are now available for self-managed deployments, addressing regulated industries that can’t use cloud services

The acquisition of Voyage AI brings state-of-the-art embedding models directly into the database, improving retrieval quality

Cloud prepayments and IPv4 address purchases aim to structurally reduce infrastructure costs, defending gross margins as the business shifts further toward consumption-based Atlas revenue

The economic reality. Here’s where the story gets complicated. MongoDB’s current financials reveal tension between its growth narrative and margin structure.

Atlas, the managed cloud service, now represents 74% of revenue and growing. It carries gross margins around 73-75%—respectable for a cloud service but structurally lower than pure software. MongoDB is essentially reselling AWS, Azure, and GCP compute with a management layer and proprietary features. Every dollar of Atlas revenue includes roughly $0.25 in cloud infrastructure costs.

Enterprise Advanced (EA)—the high-margin (85-90%) self-managed license business—is shrinking. Management guides to “high single-digit decline” but the actual rate may be faster. Non-Atlas revenue grew only 7% YoY in the most recent quarter, and that number includes lower-margin services revenue. If EA is declining 12-15% annually, MongoDB is losing $40-50 million of its highest-margin revenue each year.

Meanwhile, AI features like vector search require more computational resources than traditional CRUD operations. Vector similarity calculations are compute-intensive, often requiring 2-3x the resources of document lookups. Yet MongoDB can’t charge a steep premium for “AI-enabled” Atlas—the market won’t bear it when Postgres+pgvector is free and specialists compete aggressively.

The question: Can gross margins hold at 73-75% as Atlas grows to 85% of revenue and AI workloads scale, or will they compress toward 68-70% as the mix shift and vector costs accumulate?

Management has identified levers: cloud vendor prepayment agreements (paying upfront for discounts), IPv4 address purchases (reducing network costs), and architectural optimizations. These could add 100-200 basis points to gross margin over the next several quarters. But they’re fighting against the structural headwind of losing high-margin EA revenue.

The Variant View

Wall Street’s consensus view on MongoDB looks something like this: It’s a high-quality database company growing in the high-teens percentage range, well-positioned for AI workloads through vector search capabilities, with stable margins and a path to 20%+ operating margins by 2028. At $322 per share and 9.4x forward revenue, the market prices in sustained 17-18% growth with margin expansion from operating leverage.

The variant perspective starts from a different premise: The real battleground isn’t vector performance—it’s governed hybrid retrieval over live data.

Enterprise AI applications aren’t choosing databases based on vector benchmark leaderboards. They’re choosing based on whether the system can deliver trustworthy answers that respect complex business rules in production. Consider a healthcare AI assistant: it must retrieve relevant patient records (semantic search), filter by the doctor’s specialty and hospital system (relational constraints), exclude records the doctor isn’t authorized to see (policy enforcement), and reflect updates made in the last few minutes (operational consistency)—all while maintaining HIPAA compliance audit trails.

This problem is harder to solve with stitched-together systems. Pinecone provides the vector search, but the operational database (MongoDB, Postgres, etc.) holds the live state and permissions. Synchronizing them introduces consistency problems: What if permissions change between the vector query and the operational query? What if new data arrives? How do you maintain a unified audit log?

MongoDB’s architectural advantage—executing hybrid queries within a single ACID-compliant system with unified governance—matters more for production enterprise deployments than raw vector throughput. The company that publishes credible service-level objectives around hybrid retrieval (precision, latency, freshness) wins the enterprise budget.

The second piece of the variant view concerns margins. The consensus assumes MongoDB’s 73-75% gross margins remain stable. The variant sees compression to 68-70% by 2028 as EA dies faster than disclosed and vector workloads scale without pricing power.

But—and this is critical—the variant also sees upside if MongoDB executes on its infrastructure cost levers. Cloud prepayments, IPv4 purchases, and improved instance placement could deliver 100-200 basis points of structural improvement. If that happens while AI features drive higher revenue per customer, the earnings trajectory changes dramatically. The market isn’t pricing this possibility.

The third element: AI attach rates. If vector search, Atlas Search, and stream processing features show up in 30%+ of new deals within 18 months—and if that attach comes at a revenue premium—then MongoDB isn’t just holding margins steady, it’s expanding them while accelerating growth. Management has been conservative in discussing AI monetization (”early days,” “not yet material”), but that conservatism creates opportunity if the numbers surprise.

The edge comes from this setup: The market prices a steady-state outcome (stable margins, moderate growth). The variant sees a binary: either margins compress 300-500 basis points (bear case, -30% downside) or AI attach validates the integration thesis and margins expand (bull case, +30% upside). The catalyst is simple: two consecutive quarters of gross margin expansion despite rising Atlas mix, combined with disclosure of AI feature adoption rates.

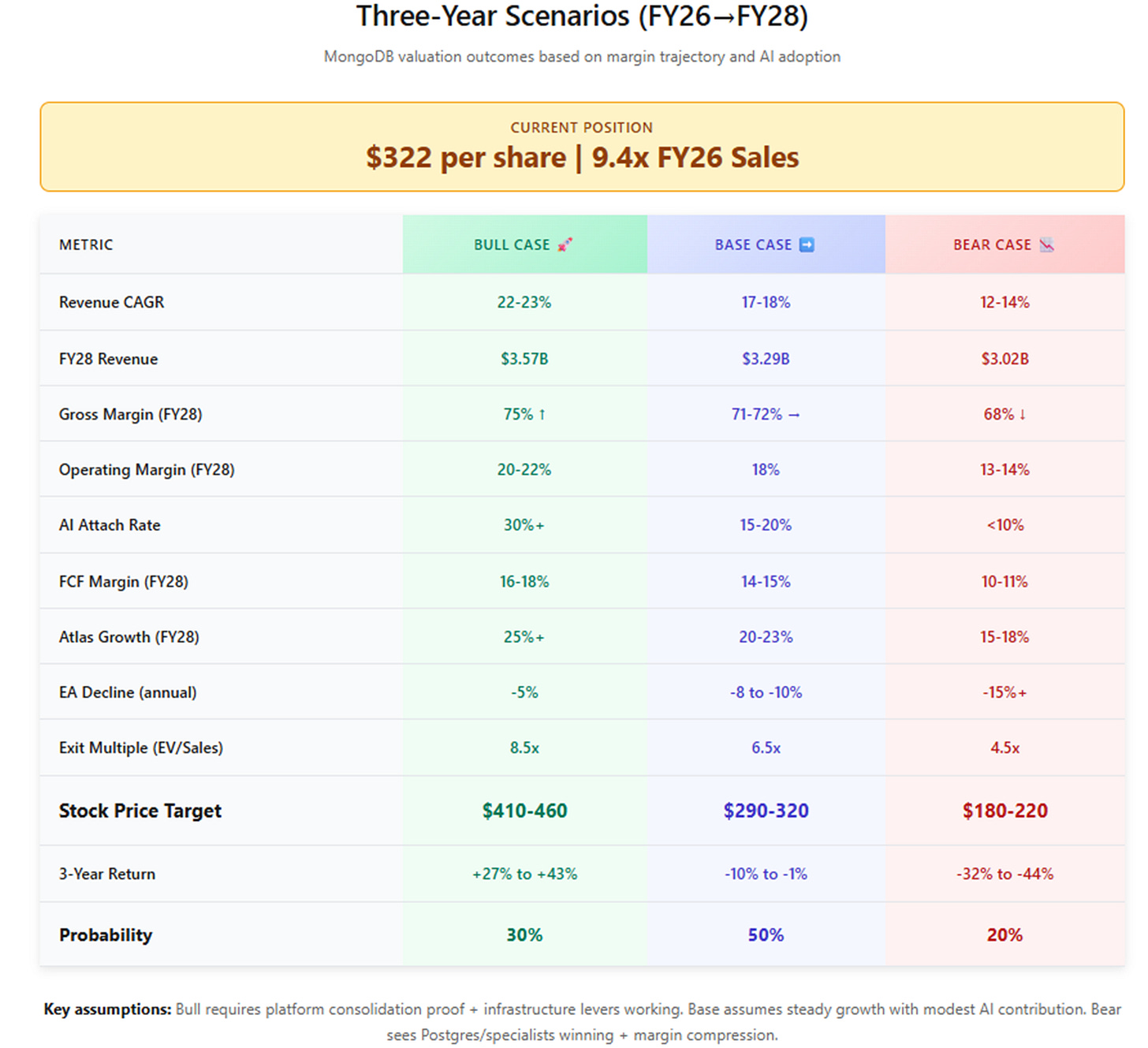

The Three Futures

Three scenarios capture MongoDB’s potential paths over the next three years to fiscal 2028.

Bull Case (30% probability): The Re-Bundling — $410-460

In this future, enterprise AI adoption validates MongoDB’s integration thesis. Companies standardize on unified operational-and-retrieval systems to reduce complexity and improve governance. AI features (vector search, Atlas Search, stream processing) attach to 30%+ of deals, driving revenue per customer higher. Simultaneously, cloud infrastructure levers work: prepayments and IPv4 purchases add 150-200 basis points to gross margins over two years.

The financial model: Revenue grows at 22-23% compound annual growth rate from $2.36B (FY26) to $3.57B (FY28). Operating margins expand from 15% to 20%+ as the business demonstrates true operating leverage. Free cash flow reaches 16-18% of revenue. With net cash accumulating to $4.0B and 83 million shares outstanding, MongoDB earns an 8.5x EV/Sales multiple—premium but justified by the combination of growth and profitability. Stock price: $410-460, representing 8-9% annual returns from current levels.

Key assumptions: EA decline slows to 5% annually (regulated AI drives on-prem deployments). Atlas maintains 25%+ growth. Gross margins rise to 75%. Platform consolidation becomes real—customers actively remove Elasticsearch and Pinecone sidecars, proven by case studies.

Base Case (50% probability): The Steady Workhorse — $290-320

The most likely scenario is evolutionary, not revolutionary. MongoDB continues capturing operational database workloads and AI features gain traction, but the impact is moderate rather than transformative. AI attach reaches 15-20% of deals—visible but not game-changing. Gross margins hold steady at 71-72% as infrastructure levers offset EA decline and vector costs, but don’t expand.

Revenue grows 17-18% CAGR to $3.29B. Operating margins reach 18% through disciplined expense management. Free cash flow stabilizes at 14-15% of revenue. The business is healthy and profitable but not breaking out. With $3.4B net cash and 85M shares, the market assigns a 6.5x multiple—fair for a mid-teens grower. Stock price: $290-320, roughly flat to slightly down from today.

Key assumptions: EA declines 8-10% annually (expected pace). Atlas grows 20-23% (durable but decelerating). Postgres+pgvector captures 25-30% of new AI projects. Lakehouses haven’t solved real-time serving latency. MongoDB remains a solid choice but not dominant.

Bear Case (20% probability): The Fragmented Future — $180-220

In the bearish outcome, specialization wins over integration. Pure-play vector databases prove too performant to ignore, while PostgreSQL’s “good enough” vector capabilities combined with SQL familiarity capture the mid-market. MongoDB gets squeezed: it loses high-value AI-native startups to specialists and loses “AI-enablement” projects to Postgres inertia.

More critically, gross margins compress. EA dies faster than expected (15%+ annual decline), vector workloads scale up without pricing power, and cloud vendors maintain pricing leverage. Gross margins fall to 68% despite mitigation efforts. Revenue growth slows to 12-14% CAGR, reaching only $3.02B. Operating margins stall at 13-14% as the company must keep investing in features to maintain relevance. Free cash flow reaches 10-11% of revenue—solid but unremarkable.

With $2.5B net cash, 88M shares, and a 4.5x multiple reflecting mature grower status, the stock falls to $180-220, a -17% annual return. MongoDB remains a viable business serving operational workloads but cedes the AI platform opportunity.

Key assumptions: SQL/Python defaults dominate developer mindshare. Databricks and Snowflake achieve sub-100ms serving latency, allowing enterprises to avoid moving data. Open-source DocumentDB reaches feature parity. The database layer becomes interchangeable middleware rather than strategic high ground.

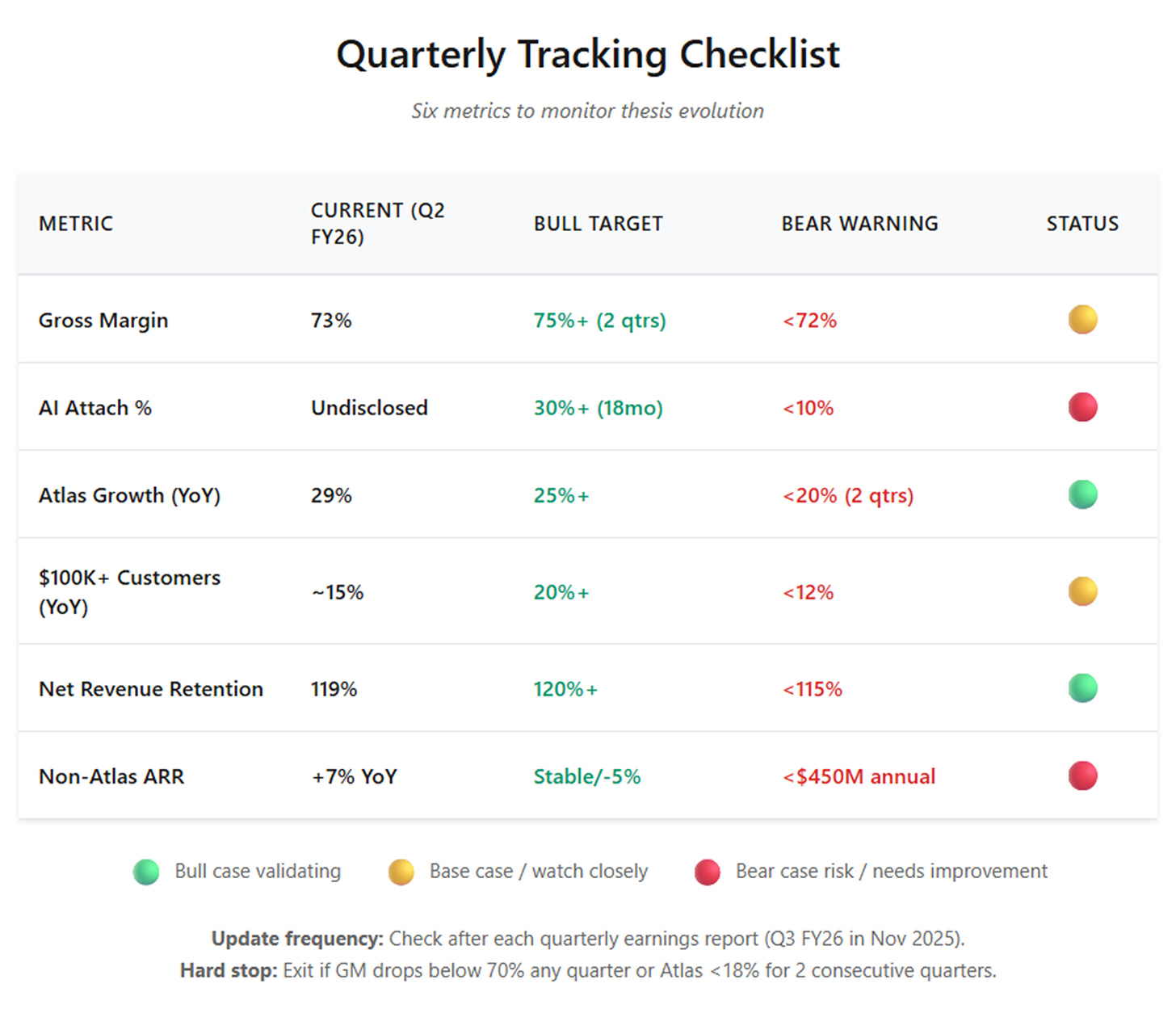

Markers to Watch

The virtue of this framework is its falsifiability. Within 18-24 months, specific metrics will reveal which scenario is playing out.

· AI Attach Rate. Management must disclose what percentage of new and expansion deals include Atlas Vector Search, Atlas Search, or stream processing. Target: 30%+ within 18 months validates the bull case. Below 15% suggests base case. If it remains “immaterial” or undisclosed after three consecutive quarters, the bear case gains credibility.

· Gross Margin Trajectory. Watch for two consecutive quarters of 50-75 basis point sequential improvement despite rising Atlas mix. This would confirm that infrastructure levers (prepayments, IPv4, placement) are structural, not one-time. Conversely, if gross margins plateau or decline below 72% by mid-2026, margin compression is real.

· Retrieval Quality Metrics. MongoDB should publish service-level objectives for hybrid queries: P95 latency under 150 milliseconds for vector similarity combined with metadata filters, data freshness within 1 second, precision metrics for semantic search. These technical benchmarks establish competitive differentiation beyond marketing claims.

· Enterprise Displacement Evidence. Look for customer case studies showing companies removing Elasticsearch and Pinecone from their stacks in favor of MongoDB’s integrated approach. Absence of these stories after two years of product availability suggests the consolidation thesis isn’t resonating.

· Cohort Expansion Metrics. Post-FY25 customer cohorts should expand 5-8 percentage points faster at the 6-month and 12-month marks compared to FY24 cohorts, validating that the “workload quality” improvements management implemented are real.

· Developer Sentiment Tracking. Monitor Stack Overflow annual surveys and GitHub activity. If PostgreSQL’s ranking continues rising while MongoDB’s plateaus or falls, it signals a durable shift in developer defaults—especially among Python/data-science-oriented AI engineers.

· Lakehouse Latency Evolution. Databricks Mosaic AI and Snowflake Cortex are improving serving latency. If they achieve production-grade sub-100ms query times with policy enforcement by late 2026, the “why move your data” threat becomes real. MongoDB’s defense—transactional consistency and operational freshness—must remain demonstrably superior.

The Strategic Unknowns

Even with clear metrics, several questions remain genuinely open.

Economic: Can a business that resells cloud infrastructure maintain software-like gross margins? MongoDB’s 73-75% is impressive for a consumption model, but structurally lower than pure software companies’ 85-90%. The infrastructure prepayment strategy is clever, but does it deliver durable margin improvement or just defer costs? The answer determines whether MongoDB earns a software multiple or infrastructure multiple long-term.

Behavioral: Will AI developers embrace MongoDB’s document model and aggregation pipelines, or default to SQL they already know? The Python data science community has strong SQL muscle memory. MongoDB has excellent Python drivers, but SQL is what Jupyter notebooks expect. Can MongoDB close this developer experience gap with tooling and education, or is the cultural inertia too strong?

Competitive: Do hyperscalers ultimately co-opt MongoDB’s value by deeply integrating vector capabilities and governance into their native services? AWS could enhance DocumentDB with better vector support and unified IAM policies. If “good enough, deeply integrated, and included in our contract” becomes compelling, MongoDB’s multi-cloud neutrality advantage diminishes.

Organizational: Can MongoDB sell both legacy modernization and AI enablement simultaneously without confusing buyers? These are different conversations with different stakeholders. Legacy replacement is a multi-year, CIO-driven initiative. AI features are developer-driven and project-specific. Threading this needle—being simultaneously the future of AI and the escape route from Oracle—requires organizational dexterity.

The Crux — “The Memory of Machines”

The through-line connecting MongoDB’s origin story to its current moment is this: Every generation of database technology solves one constraint and reveals the next.

Relational databases solved the 1980s problem of data chaos, but their rigidity became a constraint in the internet era. MongoDB solved the 2010s problem of schema inflexibility, but the proliferation of specialized databases created operational complexity. The cloud solved the management burden, but at the cost of vendor dependence.

AI’s emergence reveals the new constraint: trustworthy recall. Machines must remember not just what happened, but what’s relevant, recent, and permitted—then deliver that context instantly to make decisions. This isn’t a storage problem or a search problem; it’s both simultaneously, with policy and governance woven throughout.

MongoDB’s opportunity is to become the system of record for machine memory—the layer where operational truth and semantic understanding converge under consistent rules. Its risk is that this vision proves too ambitious, and simpler solutions (SQL plus vector extensions, or stitched-together specialists) win through familiarity or performance.

Whether that vision earns software economics (70%+ gross margins, 20%+ operating margins, premium multiples) or infrastructure economics (mid-60s gross margins, mid-teens operating margins, infrastructure multiples) depends on execution against the markers above. The numbers will tell the story within two years.

For now, MongoDB stands at an inflection point remarkably similar to its 2012 founding moment. A decade ago, it freed developers from relational schema constraints and captured the wave of web application development. Today, it aims to free machines from distributed retrieval complexity and capture the wave of AI application development.

The question isn’t whether the problem exists—hybrid retrieval over governed, live data is real and urgent. The question is whether MongoDB’s integrated solution proves so compelling that enterprises pay a premium for it, or whether “good enough” alternatives and hyperscaler bundling compress its economic opportunity.

From $322 per share, the market prices uncertainty: not quite bullish enough to assume AI success, not quite bearish enough to assume commoditization. The investment case comes down to conviction that the markers described above will break positively—that when MongoDB reports earnings in 6, 12, 18 months, the data will show AI attach accelerating, margins expanding, and the integration thesis validating.

And perhaps that’s fitting. MongoDB bet on developers in 2012 when Oracle’s enterprise lock-in seemed impregnable. It’s betting on them again now, in a world where machines have begun to learn—but first, they must remember.

A decade ago, MongoDB freed developers from schemas. Now it must free machines from forgetting what matters—and keep the accountants happy while doing it.

Disclaimer:

The content does not constitute any kind of investment or financial advice. Kindly reach out to your advisor for any investment-related advice. Please refer to the tab “Legal | Disclaimer” to read the complete disclaimer.