NVIDIA 2QFY26 Earnings: The $7 Billion Bet That Made NVIDIA’s Future

From a “puzzling” Mellanox deal to $7.3B in quarterly networking revenue and 40–50% growth visibility into 2026

TL;DR:

Forward Growth Visibility: While investors fixated on 6% sequential growth, Jensen Huang's comments about "very significant forecasts" from large customers suggest continued strong growth ahead, though translating suggestive language into specific projections requires caution. cast.

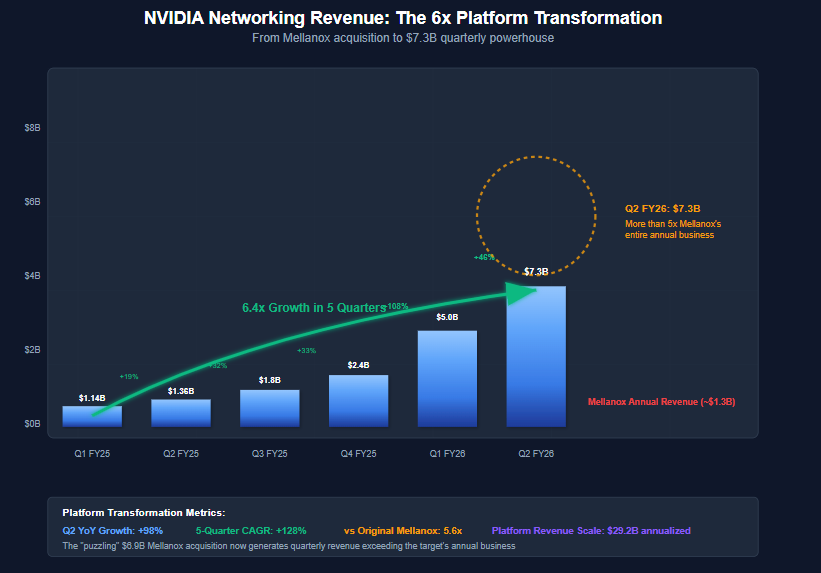

Networking as the Moat: Q2 networking revenue surged 98% YoY to $7.3B, validating Mellanox as the platform-defining acquisition that enables NVIDIA to capture structural efficiency gains and lock-in customers.

Margins Like Software, Scale Like Hardware: With gross margins already at 72.7% and guided toward mid-70s, NVIDIA is delivering software-like economics alongside hyperscale-level growth, a rare combination that redefines how it should be valued.

From Bloomberg:

Nvidia Corp. gave a tepid revenue forecast for the current period, signaling that growth is decelerating after a two-year boom in AI spending. Sales will be roughly $54 billion in the fiscal third quarter... Though that was in line with the average Wall Street estimate, some analysts had projected more than $60 billion.

My first reaction when reading these results: Wall Street completely missed the point. Everyone obsessed over the 6% sequential growth "deceleration" and a guidance number that supposedly missed whisper expectations, while ignoring the $7.3 billion figure that proves NVIDIA isn't a chip company anymore.

This wasn't a semiconductor earnings report. It was proof that NVIDIA has successfully transformed into something entirely different—and most investors haven't figured out what they actually own.

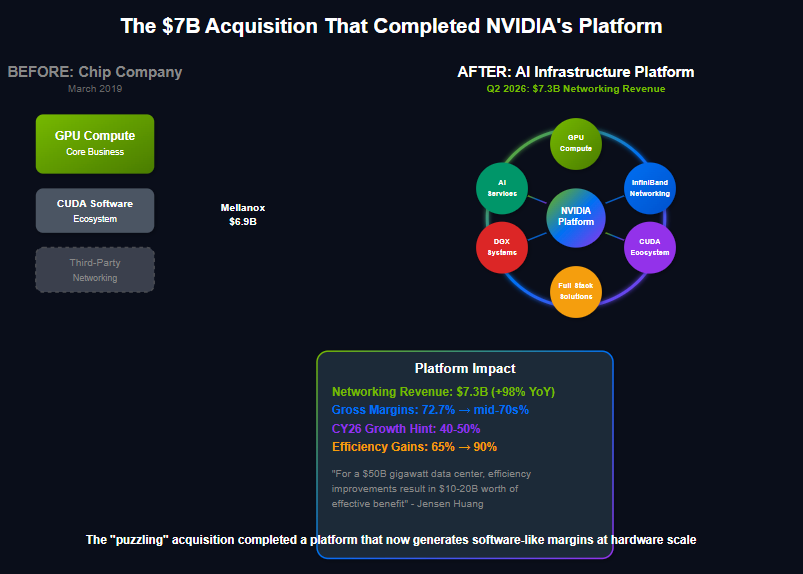

The $7 Billion Acquisition That Changed Everything

In March 2019, NVIDIA announced it would acquire Mellanox Technologies for $6.9 billion, the largest acquisition in the company's history. The reaction from analysts was predictably skeptical. Why would a graphics processing company buy a networking firm? The transaction seemed like expensive diversification—a GPU vendor wandering far from its core competency into an adjacent market with different customers, different sales cycles, and different technical challenges.

"We don't see much overlap between the two businesses," one Wall Street analyst wrote at the time. Another questioned whether NVIDIA was "paying too much for a mature networking business in a crowded market." The conventional wisdom was clear: NVIDIA should stick to what it knew best.

Jensen Huang saw something different. During the acquisition announcement, he said something that seemed like typical CEO hyperbole:

"The combination of Mellanox's smart interconnect technology and NVIDIA's accelerated computing platform will enable next-generation data centers to achieve higher performance, greater utilization and lower operating costs."

Five years later, NVIDIA just reported quarterly networking revenue of $7.3 billion—more than Mellanox's entire annual revenue when it was acquired. That single division is now larger than most S&P 500 companies. More importantly, it represents the completion of a strategic transformation that most investors still don't understand.

What Wednesday's Earnings Actually Revealed

NVIDIA reported second-quarter revenue of $46.7 billion and guided for $54 billion in the third quarter. The stock initially fell 2% in after-hours trading as investors focused on what they perceived as decelerating growth—sequential revenue growth of just 6% compared to the torrid pace of recent quarters.

This reaction missed the fundamental story hiding in plain sight. While data center compute revenue declined 1% sequentially due to China export restrictions, networking revenue exploded 46% QoQ to reach $7.3 billion, representing 98% YoY growth.

When networking grows faster than compute at NVIDIA, you're not watching a semiconductor company anymore. You're watching the completion of a platform transformation that began with that puzzling Mellanox acquisition.

The Growth Hint That Changes Everything

But first, the most important thirty seconds of the entire earnings call had nothing to do with Q2 results. When asked whether 50% annual growth was "a reasonable bogey for how much datacenter revenue should grow next year," Jensen deflected the specific number but his language suggested strong forward visibility:

"We have reasonable forecasts from our large customers for next year, a very, very significant forecast."

"AI native start-ups are really scrambling to get capacity so that they could train their reasoning models. And so the demand is really, really high."

What this actually signals: NVIDIA has contracted or committed demand extending into CY26 that management views as substantial. However, translating Jensen's suggestive comments into specific growth percentages involves considerable interpretive leaps.

The mathematical reality is complex. NVIDIA's current data center run rate is approximately $164B annually. Maintaining 40-50% growth rates becomes exponentially more challenging as the base expands—adding $80B in new annual revenue would exceed most companies' total revenue. Moreover, 40-50% growth would actually represent deceleration from the current 56% YoY pace.

A more analytically grounded interpretation suggests continued strong growth driven by reasoning AI's computational multiplier effects and customer forward commitments, but likely in the 25-35% range versus 29% YoY consensus expectation. Even at the lower end, this would represent exceptional performance for a company approaching $200B in annual revenue.

The forward guidance transforms the investment thesis not because of specific growth rates, but because it demonstrates Jensen's confidence in sustained demand visibility extending well beyond typical semiconductor cycles. This suggests NVIDIA's platform transformation is creating the revenue predictability characteristics typically associated with infrastructure plays rather than cyclical hardware businesses.

The Architecture of Dependency

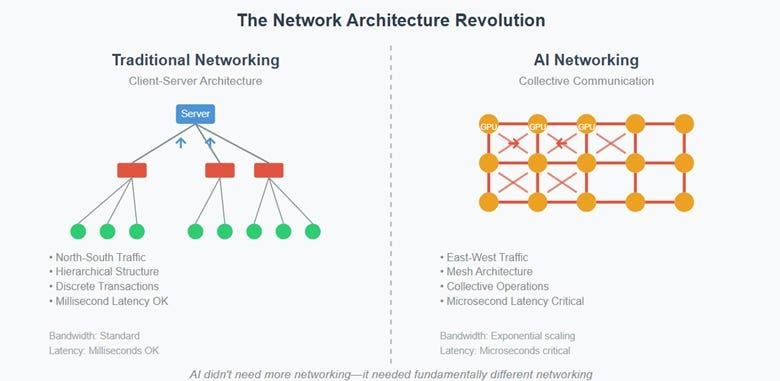

To understand what's really happening, consider what AI training actually requires. Traditional data centers move information between servers that work largely independently. AI training requires thousands of GPUs to coordinate every calculation in perfect synchronization, sharing intermediate results continuously across the entire cluster.

The difference is like comparing a chess match to conducting a symphony. Both require communication, but the performance requirements are entirely different. Try conducting the New York Philharmonic with walkie-talkies and you'll understand why AI needed fundamentally different networking.

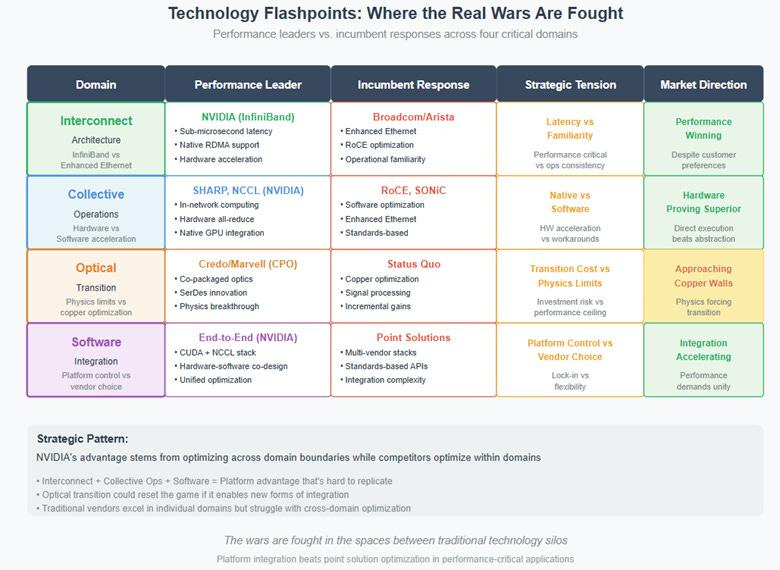

I wrote about this dynamic in detail back in June in "The AI Networking Wars: How Performance Rewrote the Competitive Hierarchy", where I argued that NVIDIA's genius was recognizing the fundamental mismatch between traditional networking architectures and AI requirements before anyone else understood the implications.

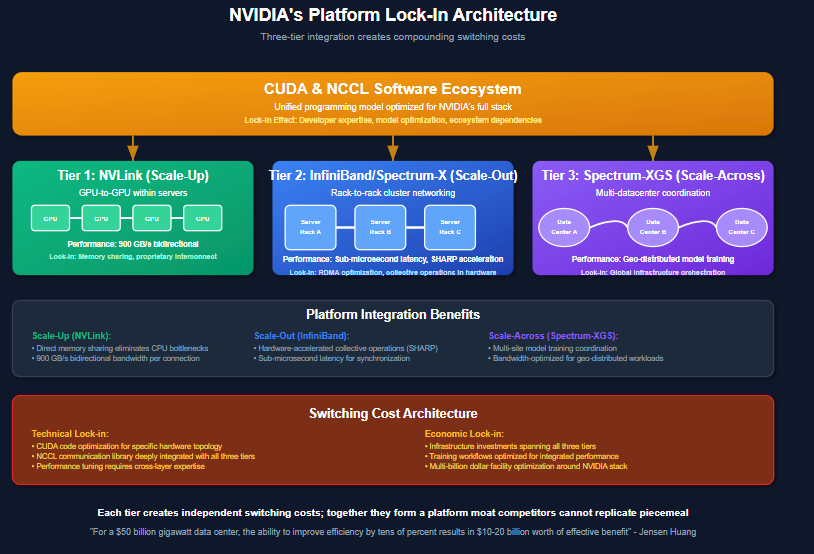

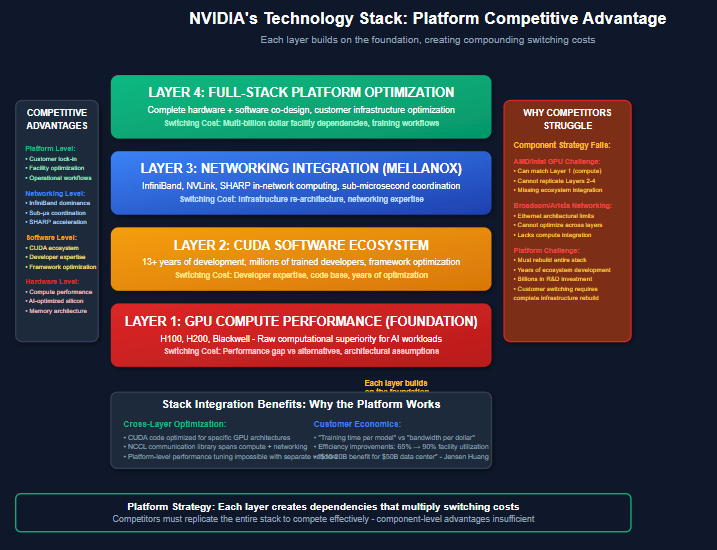

The Mellanox acquisition wasn't diversification—it was completing a platform. While competitors continued thinking about selling individual components, NVIDIA was building integrated systems where the network fabric became as critical as the processors themselves.

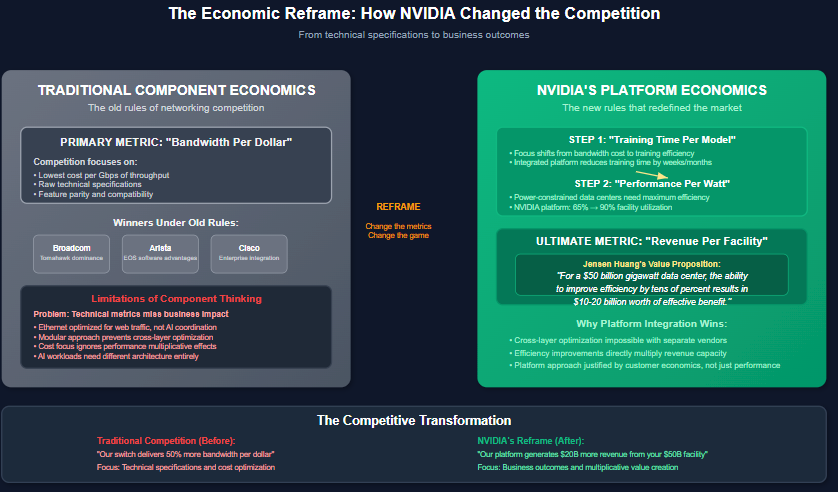

Wednesday's results validated this thesis in spectacular fashion. CFO Colette Kress explained that NVIDIA's networking solutions can improve AI cluster efficiency "from approximately 65% to 85-90%." In power-constrained data centers, that efficiency improvement translates directly to revenue generation capability for customers.

Jensen Huang quantified this value proposition: "For a $50 billion gigawatt data center, the ability to improve efficiency by tens of percent results in $10-20 billion worth of effective benefit." This is why customers pay premium prices for NVIDIA's integrated solutions—the networking improvements more than pay for themselves through increased facility utilization.

The Economic Reframe That Competitors Can't Match

What makes this platform strategy particularly powerful is how it reframes the entire economic calculation for customers. As I detailed in the networking analysis, traditional networking competed on "bandwidth per dollar"—how much data you could move at the lowest cost. NVIDIA changed the game by making customers care about "training time per model," and now "performance per watt" and ultimately "revenue per facility."

This shift is evident in management's language. Rather than discussing chip specifications or bandwidth metrics, Huang consistently frames NVIDIA's value in terms of customer economics: "NVIDIA's performance per unit of energy used drives the revenue growth of that factory."

The competitive implications are profound. Companies like Broadcom and Arista, which I identified as facing "architectural mismatch" problems in June, are indeed struggling to respond. Broadcom's Jericho3-AI and Arista's EOS optimizations represent smart engineering, but they're still fighting the fundamental physics limitations that make Ethernet suboptimal for AI workloads.

Meanwhile, NVIDIA's three-tier networking strategy—NVLink for scale-up, InfiniBand and Spectrum-X for scale-out, and now Spectrum-XGS for scale-across multiple data centers—creates comprehensive platform lock-in exactly as predicted. Spectrum-X Ethernet alone is now "annualizing over $10 billion" according to management, validating the thesis that NVIDIA could succeed in Ethernet environments despite technical compromises.

The platform approach also creates switching costs that extend far beyond hardware procurement. Customers optimizing entire AI infrastructures around NVIDIA's architecture must retrain engineering teams, rebuild software stacks, and re-architect their approach to distributed computing. These organizational investments become moats protecting NVIDIA's market position.

Platform Mix and Margin Expansion

The networking success is driving a fundamental change in NVIDIA's business model that most investors haven't recognized. Look at the gross margin trajectory:

Q4 FY25: 60.5% (depressed by China inventory charges)

Q1 FY26: 61.0% (initial recovery)

Q2 FY26: 72.7% (platform mix improvement)

Q3 FY26 guide: 73.5% (path to "mid-70s")

This isn't cyclical pricing power from semiconductor shortages. This is structural margin improvement driven by selling complete solutions rather than individual components. NVIDIA is increasingly capturing value across the entire AI infrastructure stack, from processors to networking to software optimization.

The company's guidance for "mid-70s" gross margins by year-end reflects management's confidence that this platform approach is sustainable while maintaining 40-50% annual growth rates. These are the kind of margins typically associated with software companies, not hardware manufacturers, combined with growth rates that most software companies can only dream of achieving.

China as Optionality, Not Dependency

One of the most strategically important aspects of Wednesday's results was how NVIDIA handled China. Rather than building China revenue into their baseline guidance, management explicitly excluded it while noting that $2-5 billion in additional revenue could materialize if geopolitical issues resolve.

This positioning accomplishes several goals simultaneously. It demonstrates that NVIDIA's core business no longer depends on China access for continued growth—revenue increased 56% YoY with China effectively at zero. It creates massive upside optionality should restrictions ease. And it positions NVIDIA as compliance-focused rather than confrontational toward export controls.

The strategic implications extend beyond quarterly revenue. NVIDIA's platform approach makes China market access valuable but not essential, giving the company negotiating flexibility that pure component suppliers lack. When Jensen references China as a potential $50 billion annual market growing 50% yearly, he's describing a massive upside catalyst that doesn't compromise the baseline growth trajectory.

The Competitive Moat

Wednesday's call also revealed why NVIDIA's integrated approach is difficult for competitors to replicate. When asked about ASIC competition, Huang provided an extensive explanation of full-stack complexity that demonstrated confidence rather than concern.

"NVIDIA builds very different things than ASICs," he explained. "Accelerated computing is a full stack co-design problem. AI factories have become so much more complex because of the scale... It is really the ultimate, most extreme computer science problem the world's ever seen."

This response highlighted the fundamental challenge facing potential competitors—exactly the dynamic I outlined in the networking analysis.

AMD and hyperscaler custom chips can match NVIDIA's computational performance in specific applications, but they cannot replicate the software ecosystem, networking integration, and systems optimization that makes NVIDIA's platform economically compelling for customers.

The networking revenue surge provides concrete evidence of this competitive advantage. Competitors can build alternative processors, but building alternative ecosystems requires the kind of integrated platform development that takes years to achieve and billions to fund. As industry expert Dylan Patel recently noted, custom silicon from hyperscalers represents "margin compression rather than technological disruption"—they can match NVIDIA's capabilities for their own use cases but cannot replicate the platform effects that drive external demand.

The Infrastructure Reality

The 40-50% growth trajectory Jensen hinted at is supported by infrastructure realities that favor NVIDIA's integrated approach. The bottlenecks aren't chip supply anymore—they're power grid connections, skilled electricians, substations, and cooling systems. These constraints actually strengthen NVIDIA's position because their performance-per-watt advantages become economically mandatory rather than merely technically superior.

When data centers are power-constrained and construction-limited, the platform that maximizes output per scarce resource wins permanently. NVIDIA's networking integration isn't just a nice-to-have feature—it's the difference between 65% and 90% facility utilization, which translates to billions in customer value creation.

What This Means

NVIDIA has achieved something rare in technology: maintaining hypergrowth characteristics while developing utility-like demand durability. Traditional semiconductor companies face cyclical demand based on upgrade cycles and inventory dynamics. NVIDIA is demonstrating the revenue predictability of a platform while maintaining the growth rates of a technology leader—with forward visibility extending into CY26.

The $7.3 billion networking number represents more than impressive quarterly performance—it's proof that the Mellanox acquisition completed a transformation most investors still don't recognize. NVIDIA isn't a chip company that happens to be benefiting from the AI boom. They're the infrastructure platform that makes the AI boom economically viable, with customer commitments extending well into next year at growth rates that defy the deceleration narrative.

This distinction matters for how NVIDIA should be valued going forward. Platform companies with network effects and switching costs typically command premium multiples because their competitive advantages compound over time rather than erode. The networking success suggests NVIDIA has built exactly these characteristics while maintaining growth rates that would be impressive for any technology company, let alone one approaching $200 billion in annual revenue.

The growth rates will eventually normalize—no company can grow 40-50% annually forever. But the platform advantages that drove Wednesday's networking results should strengthen with scale, creating the kind of durable competitive position that sustains long-term value creation. And based on Jensen's forward guidance, that normalization isn't happening in CY26.

That $6.9 billion Mellanox acquisition that puzzled analysts five years ago may prove to be one of the most strategically important technology acquisitions of the decade. NVIDIA didn't just buy a networking company—they bought the final piece of a platform that now generates more quarterly revenue than most companies achieve annually, with forward demand visibility that suggests continued strong growth even as specific growth rates moderate from current levels.

The networking wars I analyzed in June have a clear winner, and Wednesday's earnings proved it decisively—while revealing that the real battle is just beginning.

NVDA 0.00%↑ AMD 0.00%↑ AVGO 0.00%↑ ANET 0.00%↑ SMH 0.00%↑ SOXX 0.00%↑

Disclaimer:

The content does not constitute any kind of investment or financial advice. Kindly reach out to your advisor for any investment-related advice. Please refer to the tab “Legal | Disclaimer” to read the complete disclaimer.