Silicon, Software, Scarcity: Nvidia's Playbook Meets the Hyperscaler Imperative

How Nvidia’s platform playbook collided with the hyperscaler imperative—and rewired the AI infrastructure economy

TLDR

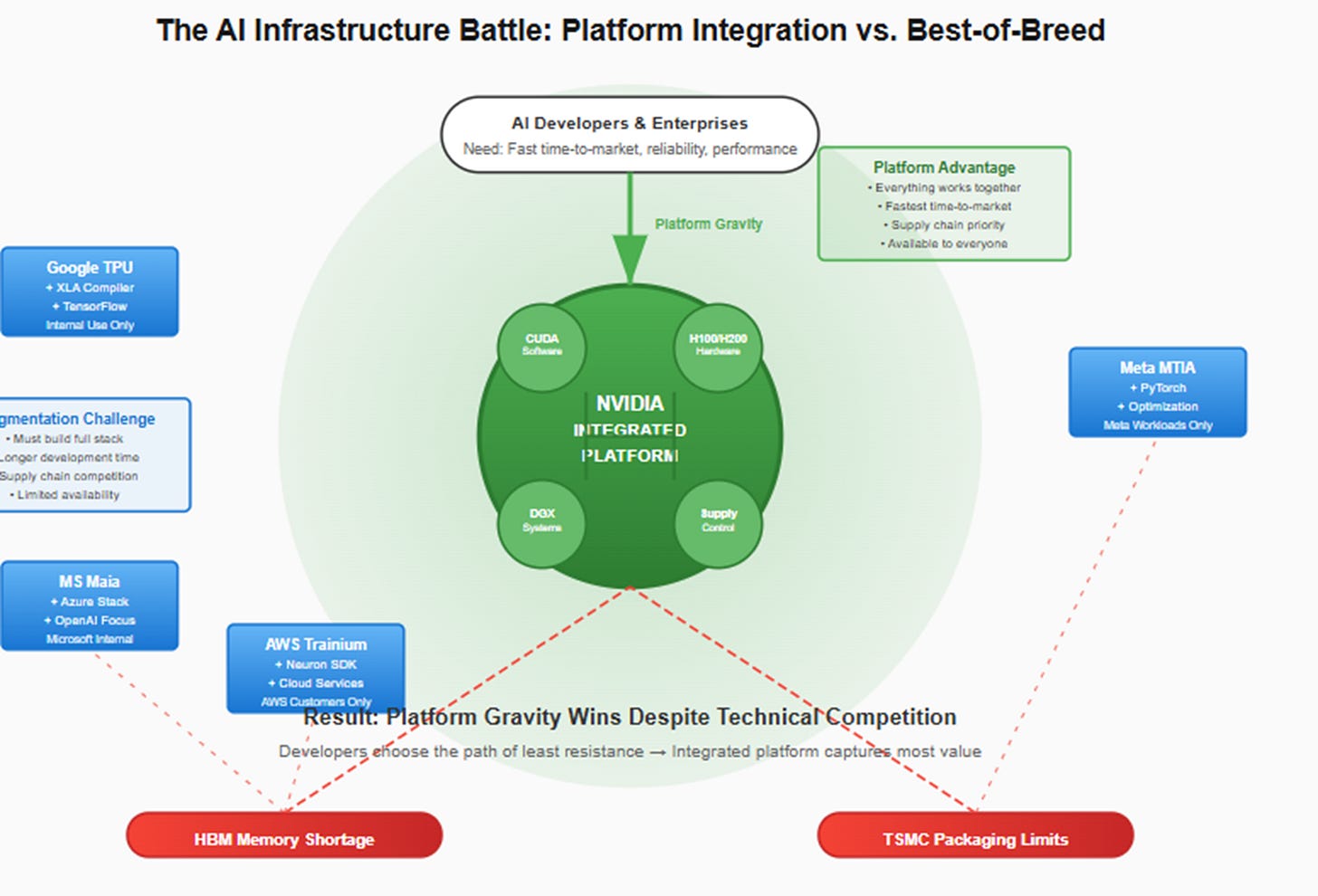

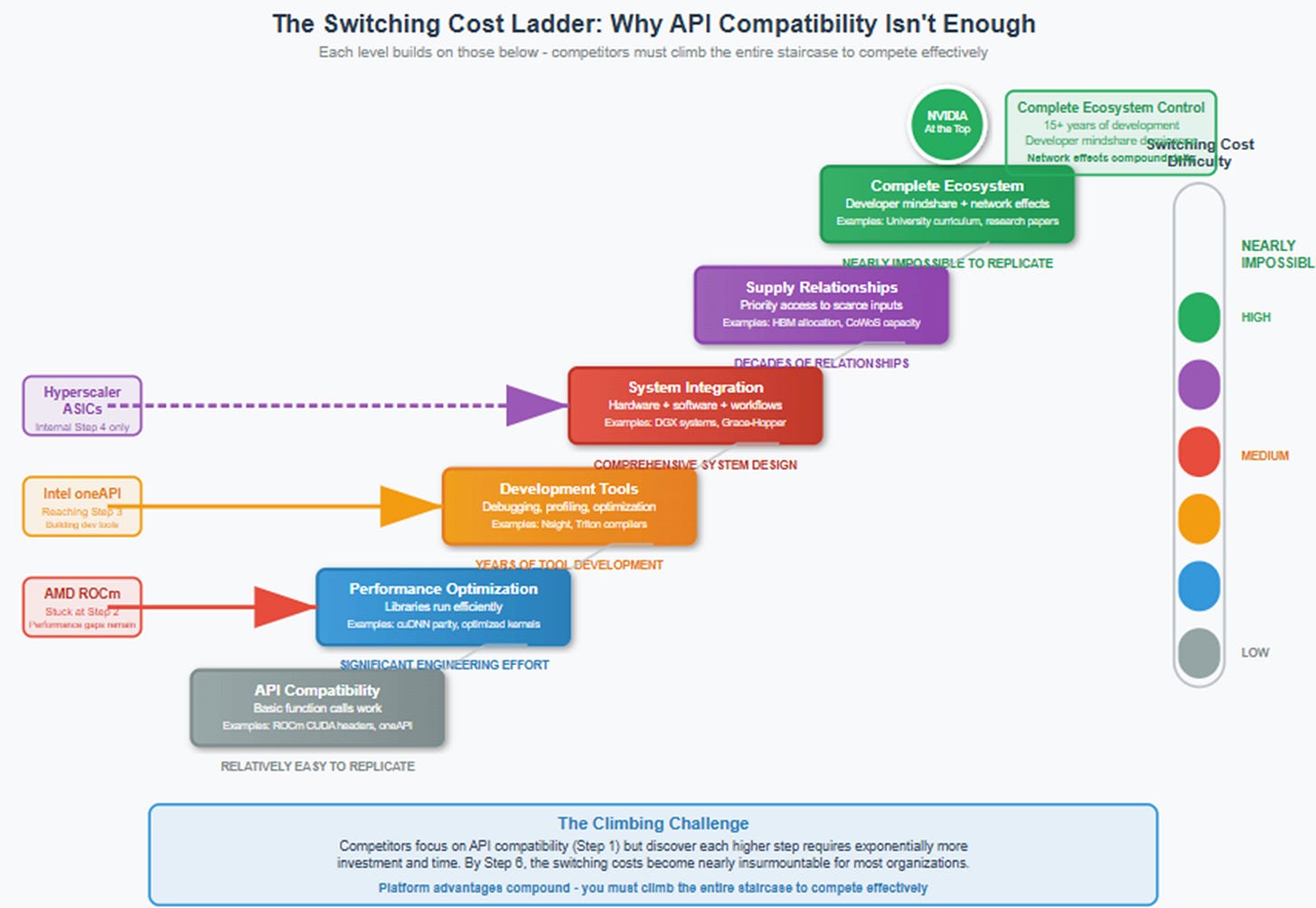

Platforms beat products. Nvidia’s moat is a bundle—CUDA + system integration (NVLink/DGX/Grace-Hopper) + control of scarce inputs (HBM/CoWoS). That combo creates experience lock-in and preserves premium pricing on memory-rich, time-to-SOTA gear.

Hyperscalers counter with silicon and abstraction. TPUs/Trainium/MTIA plus XLA/Neuron/Torch-Compile/Triton aim to reclaim margin and portability; inference commoditizes first, while fast-changing training sticks to GPUs until true 90%+ backend parity shows up on real workloads.

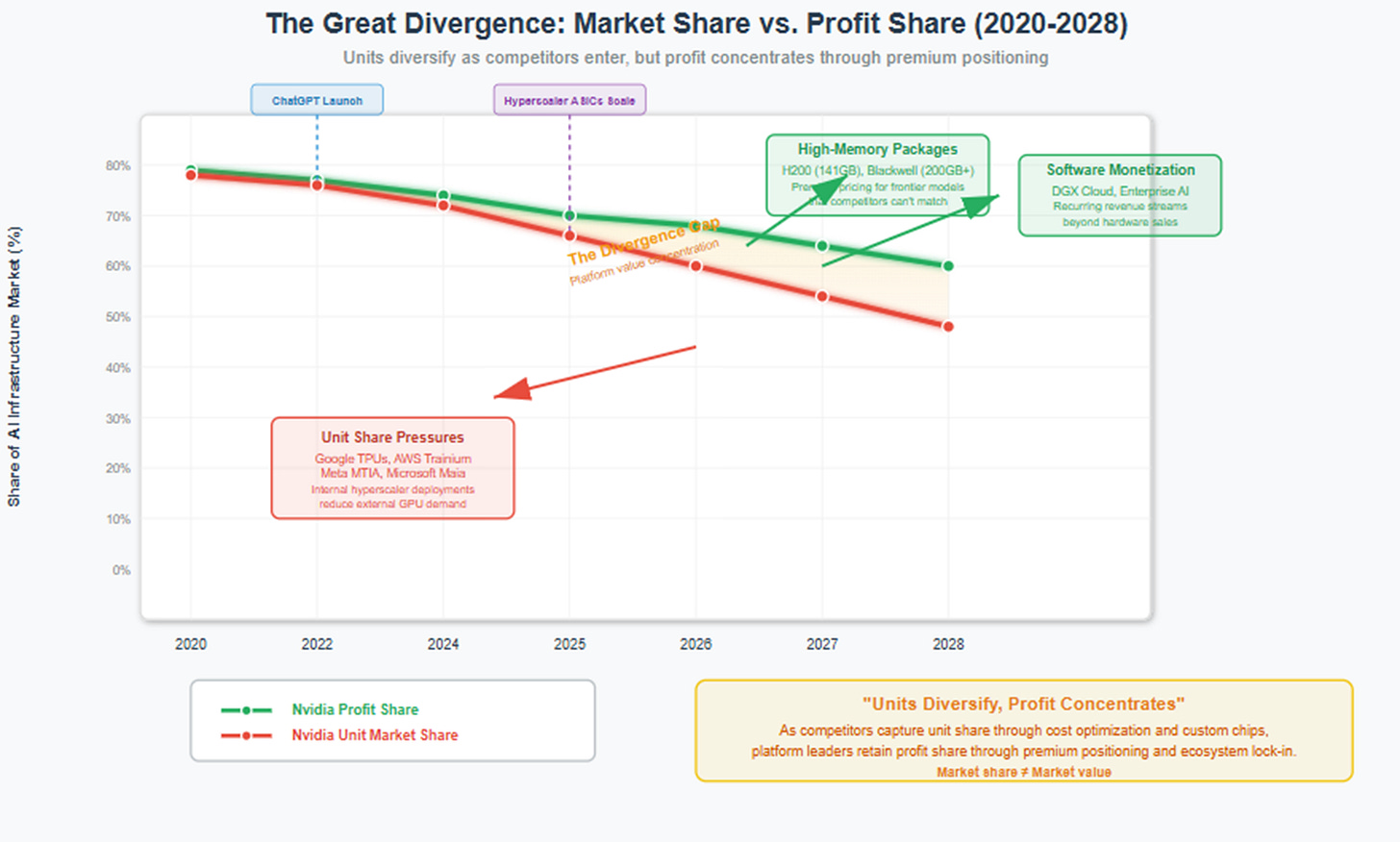

Share fragments, profit concentrates. Base case: Nvidia’s unit share moderates but profit pool stays skewed via high-memory packages and rising software attach. Watch three tells: (1) training parity, (2) ASICs powering new model development at scale, (3) HBM/CoWoS allocation or regulatory shifts.

Predicting AI infrastructure winners by staring at benchmarks is like picking Federer vs. Nadal—or Nadal vs. Djokovic—by tallying aces and unforced errors. You miss why Rafa pins Roger to the backhand, or why Novak neutralizes Rafa’s topspin with depth and return position.

The same is true here: CUDA, HBM/CoWoS allocation, DGX systems, PyTorch compilers, and hyperscaler ASICs are styles, patterns, and match plans—not just numbers. Nvidia’s game is platform gravity plus supply orchestration; hyperscalers counter with backend abstraction and workload partitioning. If you ignore the strategic matchups and only compare FLOPs/$, you’ll forecast the wrong champion on the wrong surface.

“Benchmarks are the box score; platforms are the game plan. To call Nvidia vs. the hyperscalers on stats alone is like picking Nadal–Djokovic by winners and errors without watching where the ball actually goes.”

This article is lengthy because it connects three decades of platform lessons to today’s AI buildout: defining compute, turning features into a stack, and locking down scarce inputs—then tests them against TPU/Trainium/MTIA. Read it to understand where training vs. inference will land and who keeps the profit pool.

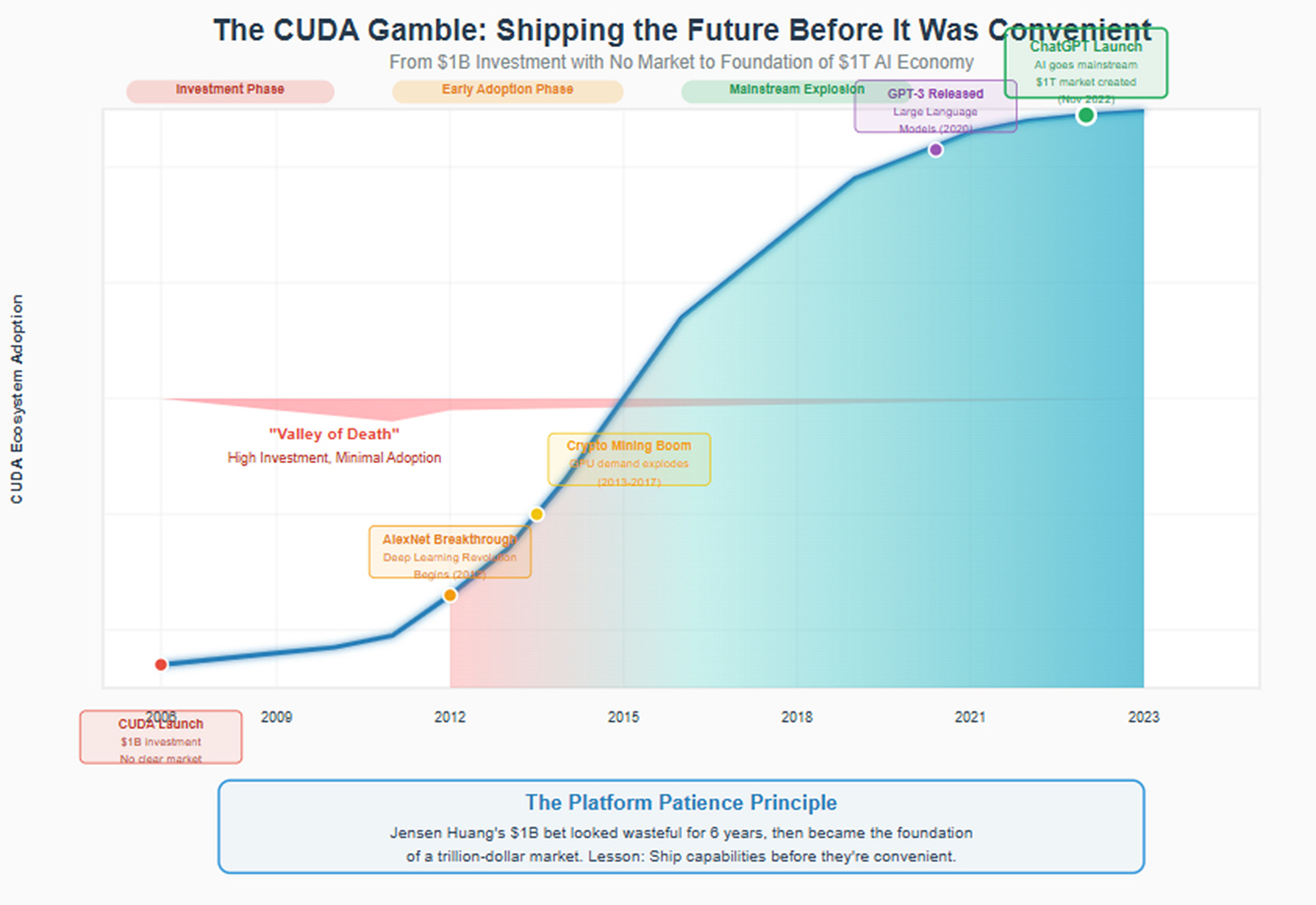

The CUDA Bet

In late 2005, Jensen Huang faced a room full of skeptical Nvidia engineers. The company had just committed nearly a billion dollars to developing CUDA—a parallel computing platform that would let any programmer write code for graphics processors. The problem was fundamental: there was no market for it.

"Why are we doing this?" one engineer asked. Gaming, Nvidia's core business, didn't need general-purpose GPU computing. The scientific computing community was deeply skeptical that graphics chips could handle serious computational work. Wall Street analysts were already calling it a costly distraction from the profitable business of making faster gaming GPUs.

But Huang had been thinking about this moment since Nvidia's founding in 1993. The company had survived the brutal graphics wars of the 1990s not by building the fastest chips, but by understanding something deeper: platforms beat products, and whoever controls the software ecosystem controls the market.

The decision to fund CUDA was a bet on infrastructure before demand—shipping the future before it was convenient. CUDA launched in 2006 alongside the GeForce 8800 GTX, the first GPU built on Nvidia's unified shader architecture. Most buyers ignored the compute capabilities entirely, focused only on frame rates in Crysis and Call of Duty.

For three years, CUDA seemed to validate every criticism. Usage was minimal, adoption was slow, and the investment looked like an expensive science experiment. Then machine learning researchers discovered that neural networks could run dramatically faster on parallel processors. Cryptocurrency miners realized that GPUs could calculate hashes more efficiently than CPUs. Scientific computing applications found that weather modeling, protein folding, and seismic analysis could be accelerated by orders of magnitude.

By 2016, CUDA powered the deep learning revolution. By 2023, it was the foundation for ChatGPT, Google Search, and virtually every major AI breakthrough. What looked like a wasteful bet in 2006 became the cornerstone of a trillion-dollar market position.

The CUDA story reveals a principle that extends far beyond semiconductors: the fastest way to win hardware is to win the software and the system around it—then control the scarce inputs that everyone needs.

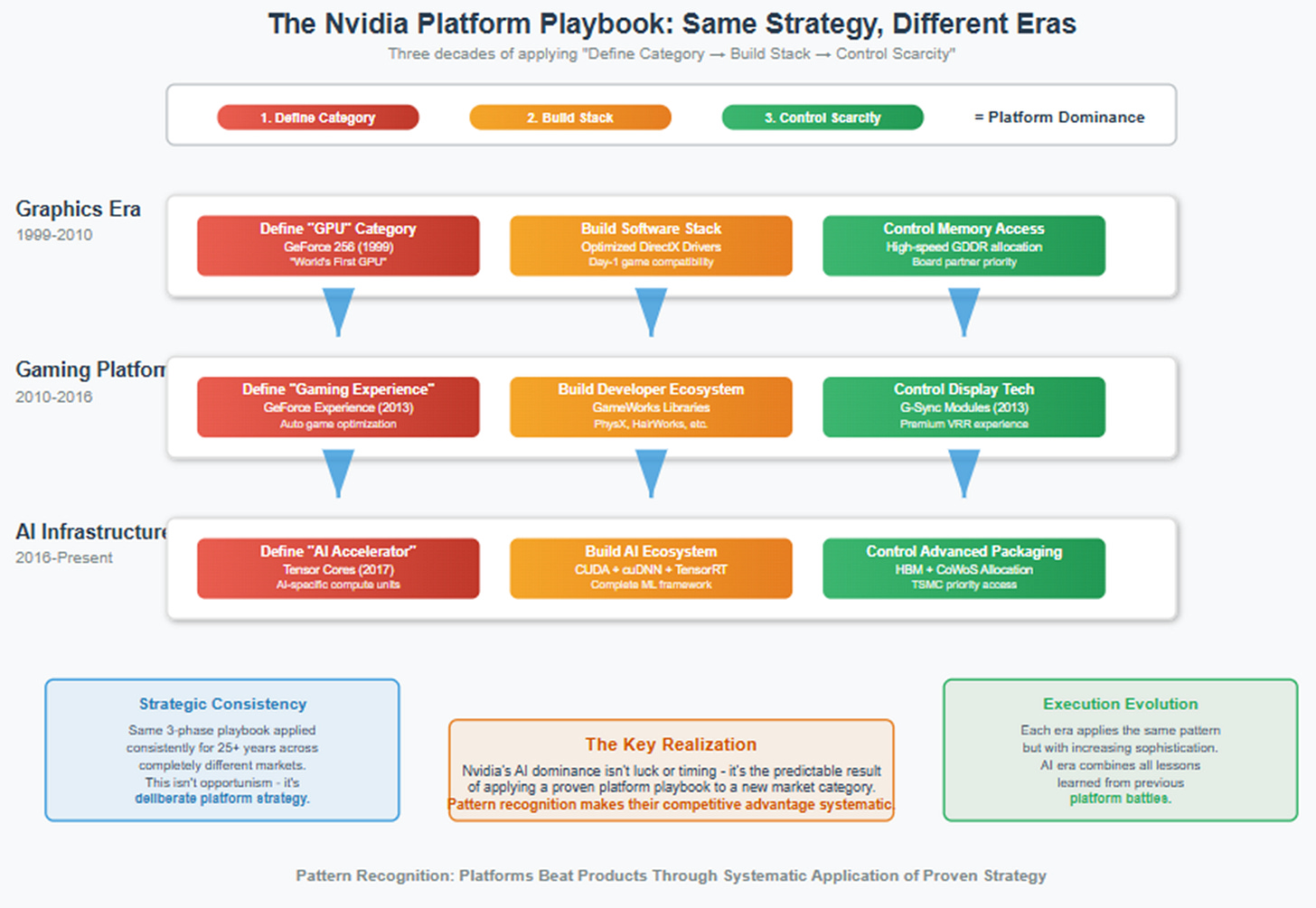

The Pattern: Lessons From Three Decades of Platform Building

Nvidia's path to AI dominance wasn't accidental. It followed a playbook the company had been perfecting since 1993, learning through successive hardware generations how platforms triumph over products. Three critical lessons emerged from the graphics wars that would define the company's approach to every subsequent market.

Lesson 1: Define the Compute, Define the Market

When Nvidia introduced the GeForce 256 in October 1999, the company didn't just ship a faster graphics card—it invented an entire category. Before GeForce 256, 3D graphics cards were "accelerators" or "display adapters," specialized hardware that helped CPUs render polygons faster. Graphics processing was understood as a narrow, dedicated function.

GeForce 256 changed that by introducing hardware transform and lighting (T&L) engines directly on the chip. For the first time, geometric calculations that had always run on the CPU were offloaded to dedicated silicon. More importantly, Nvidia branded this the "world's first GPU"—Graphics Processing Unit—coining a term that reframed the entire conversation.

The naming wasn't marketing fluff. By defining the GPU as a programmable co-processor rather than a fixed-function accelerator, Nvidia established a new category with different rules. Competitors could build faster rasterization engines, but they were fighting yesterday's battle. Nvidia had defined what next-generation graphics computation meant: parallel processing with dedicated silicon.

The same pattern repeated in 2001 with the GeForce3's introduction of programmable pixel and vertex shaders. While ATI focused on improving fixed-function performance, Nvidia pushed the industry toward programmable pipelines that let developers write custom graphics effects. This wasn't just a technical choice—it was a strategic bet that flexibility would matter more than raw speed.

The pattern culminated in 2018 with RTX ray tracing. Real-time ray tracing wasn't new—film studios had been using it for decades—but implementing it in consumer hardware required massive computational resources. Rather than incremental improvements to traditional rasterization, Nvidia built dedicated RT cores and created an entire ecosystem around real-time ray tracing: APIs, tools, game engine integrations, and developer support.

Early RTX adoption was challenging. Games like Battlefield V and Metro Exodus showcased impressive lighting and reflections, but performance was often halved with ray tracing enabled. Critics called it a gimmick, an expensive feature that few games would support.

But Nvidia understood that creating new categories requires shipping capabilities before they're convenient. By 2024, over 400 games supported RTX features, and ray-traced graphics were becoming expected in major releases. AMD scrambled to add ray tracing to RDNA2, but arrived years later with less sophisticated implementations.

Categories aren't created by benchmark scores or transistor counts. They're created by software ecosystems that target new capabilities and make them feel inevitable.

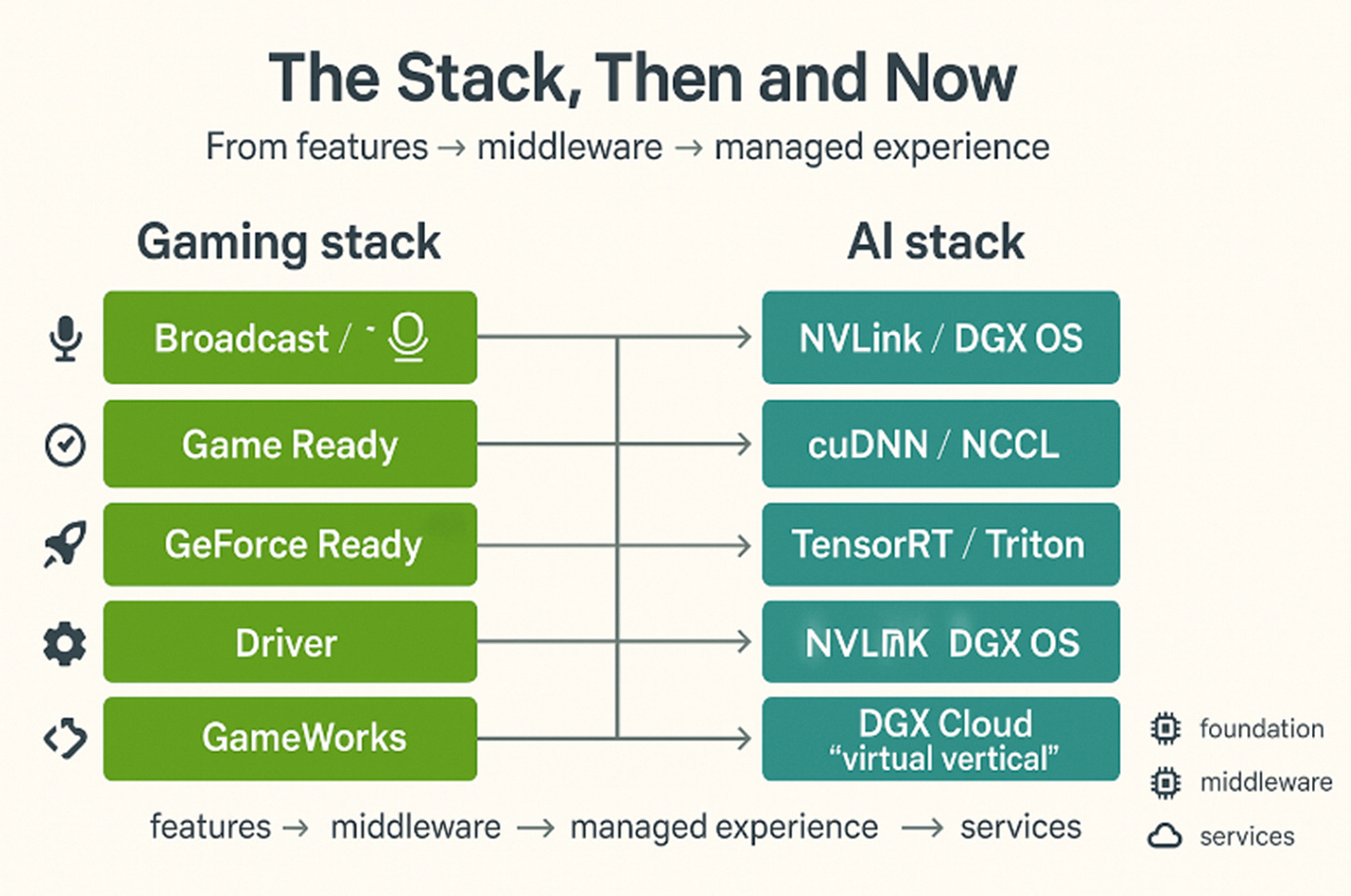

Lesson 2: Turn Features into a Stack

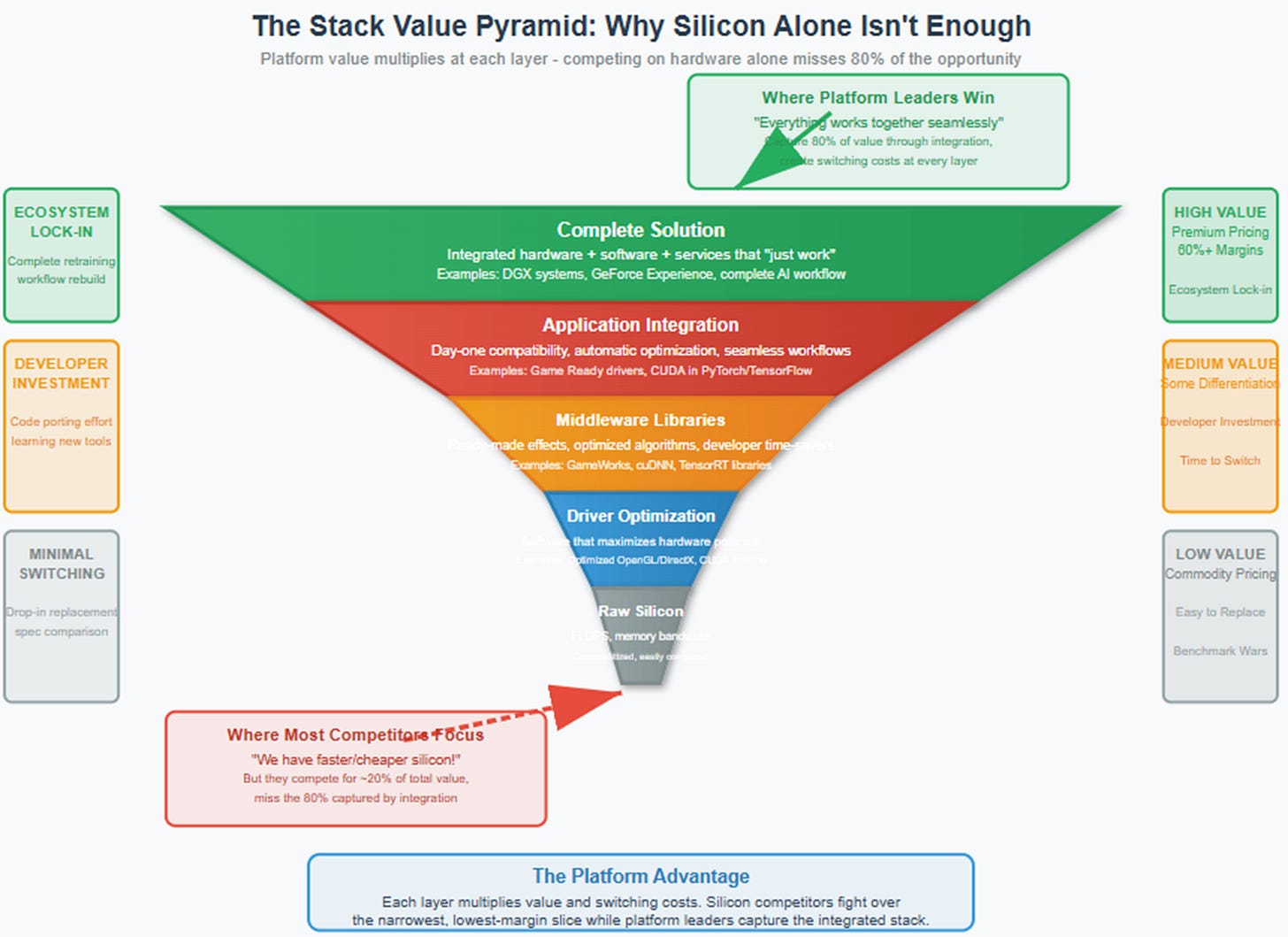

Raw silicon performance was never Nvidia's real product. The company's breakthrough came from understanding that developers needed more than fast hardware—they needed an entire ecosystem that made that hardware productive.

This realization emerged from Nvidia's early struggles. The NV1 chip, launched in 1995, was technically innovative but incompatible with Microsoft's emerging DirectX standard. Despite impressive technology, NV1 failed commercially because it required developers to target Nvidia's proprietary APIs rather than industry standards.

The lesson was clear: hardware capabilities without software support are worthless. But the solution wasn't just compatibility—it was building value at every layer of the stack.

This meant moving upward systematically. Basic DirectX compatibility became optimized driver implementations that squeezed extra performance from Nvidia GPUs. Driver optimizations became GameWorks libraries—middleware that provided ready-made effects for smoke, fire, realistic hair, advanced physics, and ambient occlusion.

GameWorks was controversial. AMD accused Nvidia of creating "black box" libraries that hindered performance on competing hardware. Game developers appreciated the time savings but worried about vendor lock-in. Critics argued that GameWorks effects often ran poorly on AMD cards because the code was optimized specifically for Nvidia's architecture.

But controversy missed the strategic point. GameWorks solved real developer problems while making Nvidia GPUs more attractive. If a major game like The Witcher 3 used HairWorks for realistic character hair, and that effect ran dramatically better on GeForce cards, enthusiasts had a clear reason to choose Nvidia.

The stack continued upward. Game-specific optimizations became day-zero "Game Ready" drivers that ensured new titles ran perfectly at launch. Hardware capabilities became end-user applications like GeForce Experience that automatically configured optimal settings for each game and hardware combination.

Each layer multiplied the leverage of layers below. A faster GPU was nice; a faster GPU with day-one driver support, automatic optimization, and exclusive visual effects was essential. Competitors found themselves perpetually reacting to Nvidia's hardware while simultaneously trying to build their own software ecosystems from scratch.

By 2020, Nvidia's software offerings extended beyond gaming into streaming (NVIDIA Broadcast for noise removal), content creation (RTX Remix for remastering classic games), and cloud services (GeForce Now for streaming PC games to any device). What started as graphics drivers had become a comprehensive platform for interactive entertainment.

Lesson 3: Control the Hard Parts

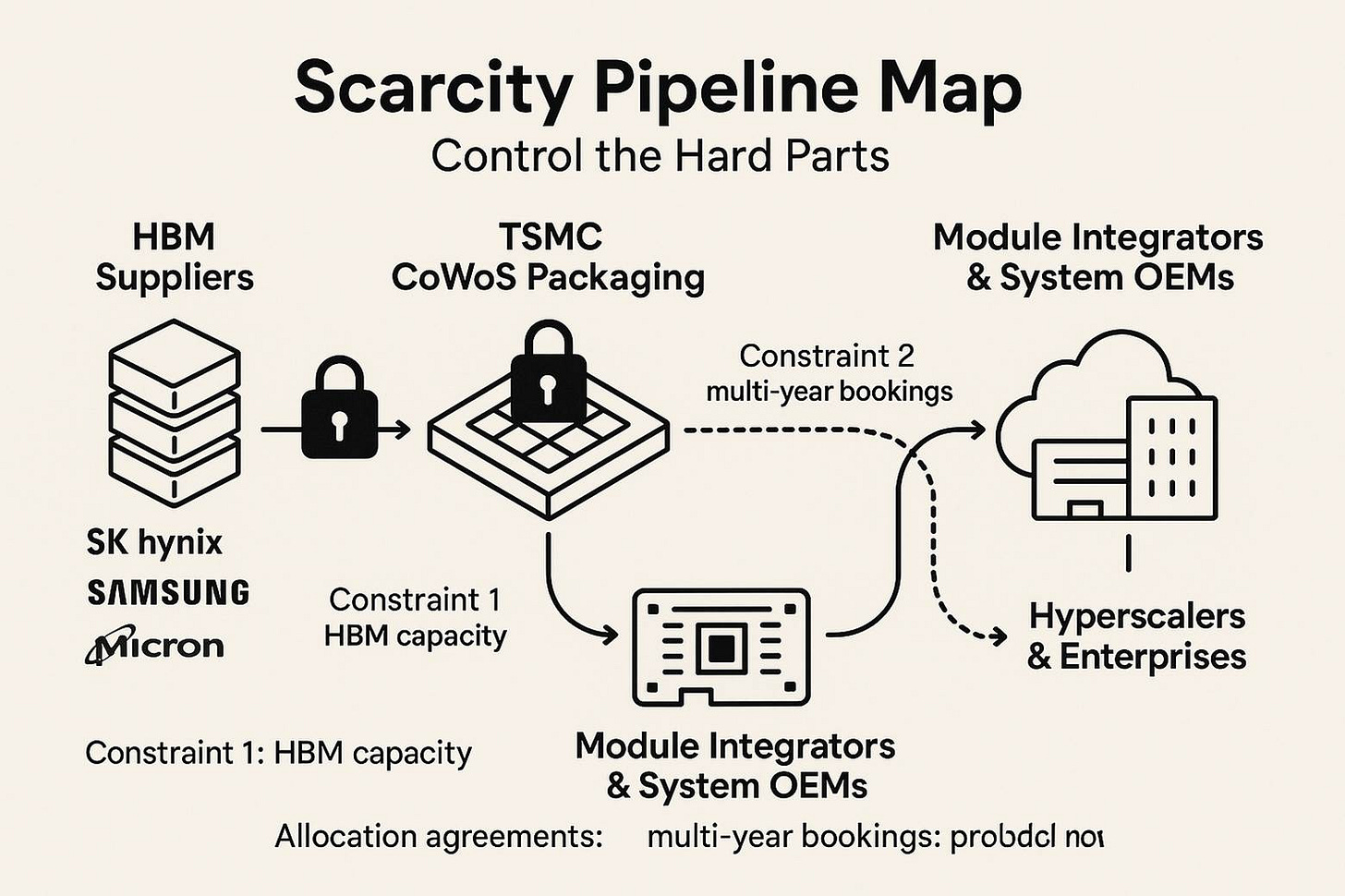

The most important lesson was also the most subtle. In every generation, Nvidia identified the scarcest, most difficult-to-replicate input and secured preferential access to it.

In the early 2000s, this meant controlling board supply and retail relationships. While competitors designed chips and hoped partners would build competitive cards, Nvidia cultivated deep relationships with add-in-board manufacturers like ASUS, EVGA, and MSI. When new GPU launches required exotic cooling solutions or high-speed memory, Nvidia's partners got priority access to components.

Later, control shifted to more sophisticated bottlenecks. The introduction of G-Sync in 2013 exemplified this strategy. Variable refresh rate displays promised to eliminate screen tearing and stuttering, but implementation required precise synchronization between GPU output and monitor refresh cycles.

AMD responded with FreeSync, an open standard built on VESA's Adaptive-Sync specification. FreeSync monitors were cheaper because they didn't require proprietary hardware modules. But Nvidia's G-Sync used dedicated processors that Nvidia designed and manufactured, giving the company direct control over quality and capabilities.

G-Sync monitors commanded premium pricing, but they delivered superior performance with wider operating ranges and better motion clarity. More importantly, the G-Sync module created a direct revenue stream from display sales while ensuring that the best variable refresh experience required Nvidia GPUs.

The pattern was consistent across generations: find the bottleneck that determines system performance, then make sure you control it better than anyone else.

When high-bandwidth memory became critical for compute applications, Nvidia secured preferential HBM allocation from SK Hynix and Samsung.

When advanced packaging enabled larger chip designs, Nvidia booked TSMC's most sophisticated CoWoS capacity years in advance.

The company's real product became the experience contract around silicon—a guarantee that buying Nvidia meant getting not just hardware, but an integrated solution that worked better than anything you could assemble yourself.

The Great Transition: From Gaming to AI Infrastructure

By 2016, Nvidia faced a strategic inflection point. Gaming remained profitable, but growth was slowing as the PC market matured. Console victories went to AMD, which supplied chips for PlayStation 4, Xbox One, and later their successors. Mobile gaming was dominated by ARM-based SoCs where Nvidia's Tegra chips had failed to gain traction.

But machine learning researchers had discovered something unexpected: the same parallel processing capabilities that accelerated graphics could dramatically speed up neural network training. ImageNet competitions were being won by convolutional neural networks running on consumer gaming GPUs. Research labs were buying GeForce cards in bulk to experiment with deep learning.

The AI transition revealed how prescient the CUDA investment had been. While competitors scrambled to build machine learning frameworks for their hardware, Nvidia already had a mature ecosystem. CUDA provided the low-level parallel programming interface. cuDNN offered optimized primitives for deep neural networks. TensorRT accelerated inference for trained models.

Most importantly, the entire academic community had learned parallel computing using CUDA. Universities taught GPU programming with Nvidia tools. Research papers implemented reference code in CUDA. When startups and enterprises wanted to deploy machine learning at scale, they naturally gravitated toward platforms their engineers already understood.

But the AI boom also created unprecedented challenges. Demand exploded beyond gaming into scientific computing, cryptocurrency mining, and enterprise AI. Supply chains strained under sudden volume requirements. Competitors with deep pockets—Google, Amazon, Microsoft, Apple—began developing custom silicon to reduce dependence on a single supplier.

Nvidia's response demonstrated how thoroughly the company had internalized its platform lessons. Rather than just building faster chips, Nvidia applied the complete gaming playbook to AI infrastructure: define new categories, build comprehensive software stacks, and control scarce inputs.

Completing the Arc: Platform Strategy in the Age of AI

The AI boom changed everything about compute demand, but nothing about Nvidia's underlying strategy. GPUs became general-purpose; demand exploded across industries; new competitors emerged with unlimited budgets. What remained constant was the winning formula: platform first, with silicon, interconnect, software, and services delivered faster than anyone else could absorb.

Silicon & Packaging: The New Kingmakers

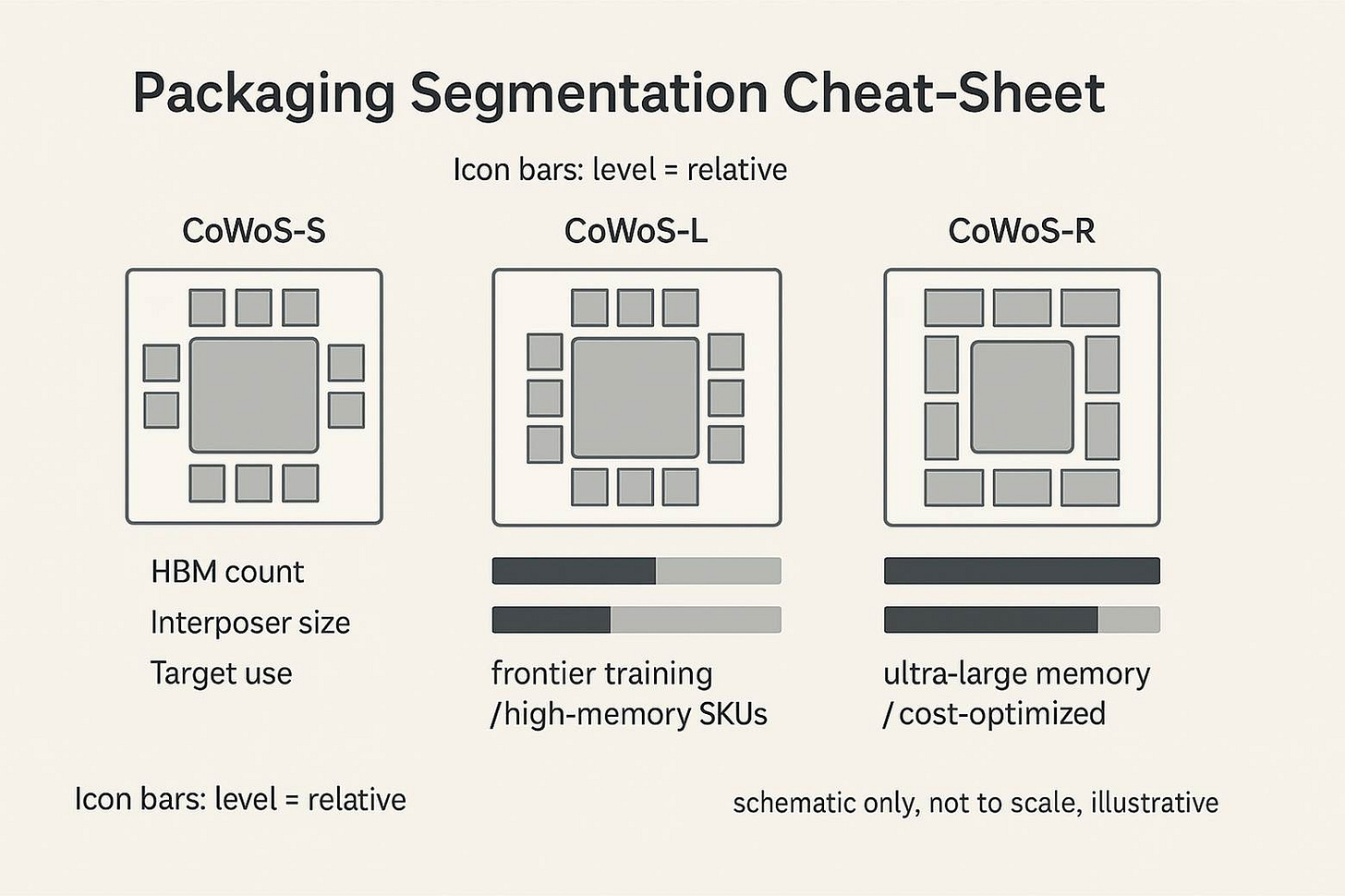

Today's scarce input isn't board supply or display modules—it's memory bandwidth and advanced packaging technology. The evolution from HBM2e to HBM3 to HBM3E to HBM4 memory, combined with TSMC's increasingly sophisticated CoWoS packaging variants, determines who can build competitive AI chips more than any architectural innovation.

Understanding this requires diving into the technical details that define modern AI accelerators. Traditional GPU designs used GDDR memory connected through relatively narrow interfaces. High Bandwidth Memory (HBM) stacks multiple memory dies vertically and connects them to processors through wide, high-speed interfaces on silicon interposers.

TSMC's Chip-on-Wafer-on-Substrate (CoWoS) technology enables this integration. CoWoS-S supports traditional GPU designs with interposer areas up to 2,700 square millimeters, accommodating four to six HBM stacks. This configuration delivers the 80-192 GB memory capacities that power current AI training workloads.

CoWoS-L represents the next step: larger interposers using local silicon interconnect and redistribution layers. This technology enables eight or more HBM stacks while supporting multi-chiplet processor designs. The difference between CoWoS-S and CoWoS-L isn't just technical—it's strategic positioning between volume inference and premium training markets.

Nvidia's H100 GPU uses CoWoS-S with six HBM3 stacks, delivering 80 GB of memory with 3.35 TB/s of bandwidth. The H200 upgrade moved to HBM3E memory, increasing capacity to 141 GB with 4.8 TB/s bandwidth using the same package configuration. Future Blackwell designs will use CoWoS-L to accommodate eight HBM stacks, potentially reaching 200+ GB configurations.

CoWoS-R, currently in development, uses polymer redistribution layers to enable even larger designs at lower cost. This technology could support twelve or more HBM stacks while reducing manufacturing complexity for ultra-large AI modules.

The packaging roadmap reveals Nvidia's segmentation strategy. CoWoS-L packages target premium training applications where memory capacity and bandwidth matter more than cost. CoWoS-S configurations serve volume markets where price-performance optimization is critical. CoWoS-R could enable new categories of ultra-large memory systems for specific applications.

Nvidia's advantage isn't just designing chips that use these technologies first—it's securing the manufacturing allocation to actually ship them at scale while competitors wait in queue. TSMC's advanced packaging capacity is limited, and allocation is typically booked years in advance. Control over CoWoS and HBM supply determines what reaches market and when.

Software Gravity: The Default for New Workloads

CUDA evolved from Jensen Huang's 2006 experiment to an ecosystem that defines how the industry approaches parallel computing. Today's AI researchers don't choose CUDA because it's technically superior to alternatives—they choose it because it's the path of least resistance for getting new models running quickly.

This gravity operates at multiple levels. Every major AI framework—PyTorch, TensorFlow, JAX—optimizes for CUDA first and other backends second. Cloud providers offer CUDA-compatible instances as the default option, with alternative accelerators positioned as specialized choices. Universities teach parallel computing using CUDA examples and Nvidia-donated hardware.

The switching costs aren't just technical—they're educational, operational, and temporal. Porting a research codebase from CUDA to AMD's ROCm or Intel's oneAPI requires significant engineering effort. More importantly, it means accepting slower iteration cycles while alternative toolchains mature and performance optimizations catch up.

This gravity is strongest for new, rapidly-evolving workloads where established best practices don't exist yet. Large language model architectures change frequently as researchers experiment with attention mechanisms, mixture-of-experts designs, and novel training techniques. Custom inference chips optimized for today's transformer models might struggle with next year's architectures.

Standardized inference workloads can migrate to purpose-built chips, but experimental training techniques gravitate toward platforms with the most mature tooling and fastest iteration cycles. This dynamic explains why Google uses TPUs for large-scale deployment of proven models while continuing to purchase Nvidia GPUs for research and development.

CUDA's evolution also demonstrates how platforms can adapt to maintain relevance. The introduction of Tensor Cores for AI workloads, Triton for high-performance kernel development, and specialized libraries for emerging applications like large language models shows how incumbent platforms can absorb new categories rather than being displaced by them.

System Design: Beyond Silicon

Nvidia's current product isn't really the H100 GPU—it's the complete stack that surrounds it. This represents the culmination of lessons learned from two decades of gaming platform development, applied to enterprise AI infrastructure.

Grace-Hopper superchips combine ARM CPUs with GPUs through coherent shared memory, eliminating the complexity and performance overhead of traditional CPU-GPU architectures. Rather than assembling heterogeneous systems and optimizing data movement between components, customers get integrated modules where CPU and GPU can share data structures directly.

NVLink and NVSwitch interconnects create GPU clusters that behave like single, massive processors. Traditional multi-GPU systems rely on PCIe connections that become bottlenecks for communication-heavy workloads. NVLink provides 900 GB/s of bidirectional bandwidth between GPUs, while NVSwitch enables all-to-all connectivity in large clusters.

DGX systems arrive pre-configured with networking, storage, and software optimized for AI workloads. Rather than forcing customers to become system integrators, Nvidia delivers turnkey solutions that can begin training models immediately. This approach eliminates the weeks or months typically required to optimize multi-GPU systems for specific applications.

The value proposition mirrors what GeForce Experience offered gamers: complexity abstracted away, optimal performance guaranteed, everything working together seamlessly. Enterprise customers pay premium pricing for integrated solutions that reduce time-to-productivity and eliminate integration risk.

This system-level approach also creates switching costs that extend beyond software compatibility. Organizations that standardize on DGX infrastructure invest in operational knowledge, integration with existing workflows, and support relationships that make migration to alternative platforms expensive even when compatible hardware exists.

"Virtual" Vertical: Control Without Ownership

Perhaps the most sophisticated evolution is Nvidia's approach to cloud services. Traditional vertical integration would mean building hyperscale data centers to compete directly with Amazon, Microsoft, and Google. Instead, Nvidia created a "virtual vertical" that provides platform control without the capital requirements or channel conflict of direct competition.

DGX Cloud represents this strategy in practice. Rather than building its own data centers, Nvidia partners with cloud providers like Oracle, Microsoft Azure, and others to offer managed AI infrastructure. Customers access Nvidia's software stack and pre-configured systems through a browser interface, without the complexity of deploying on-premises hardware.

The neo-cloud strategy extends this approach through partnerships with specialized providers like CoreWeave, Lambda Labs, and others. Nvidia provides financing, technical support, and preferential access to hardware in exchange for aligned go-to-market strategies and customer acquisition.

These partnerships learned from gaming's distribution model: maintain control over the user experience while leveraging partners for reach and scale. Rather than competing directly with hyperscale clouds, Nvidia creates alternative channels that can offer differentiated experiences for AI workloads.

The virtual vertical also provides leverage in negotiations with large cloud providers. When Amazon or Google considers restricting Nvidia GPU access to promote their own chips, the existence of alternative channels constrains their ability to limit customer choice.

This approach demonstrates how platform strategies can adapt to different market structures. In gaming, Nvidia could build direct relationships with consumers through software and reference designs. In enterprise AI, customers prefer cloud services and managed infrastructure, so platform control requires different mechanisms.

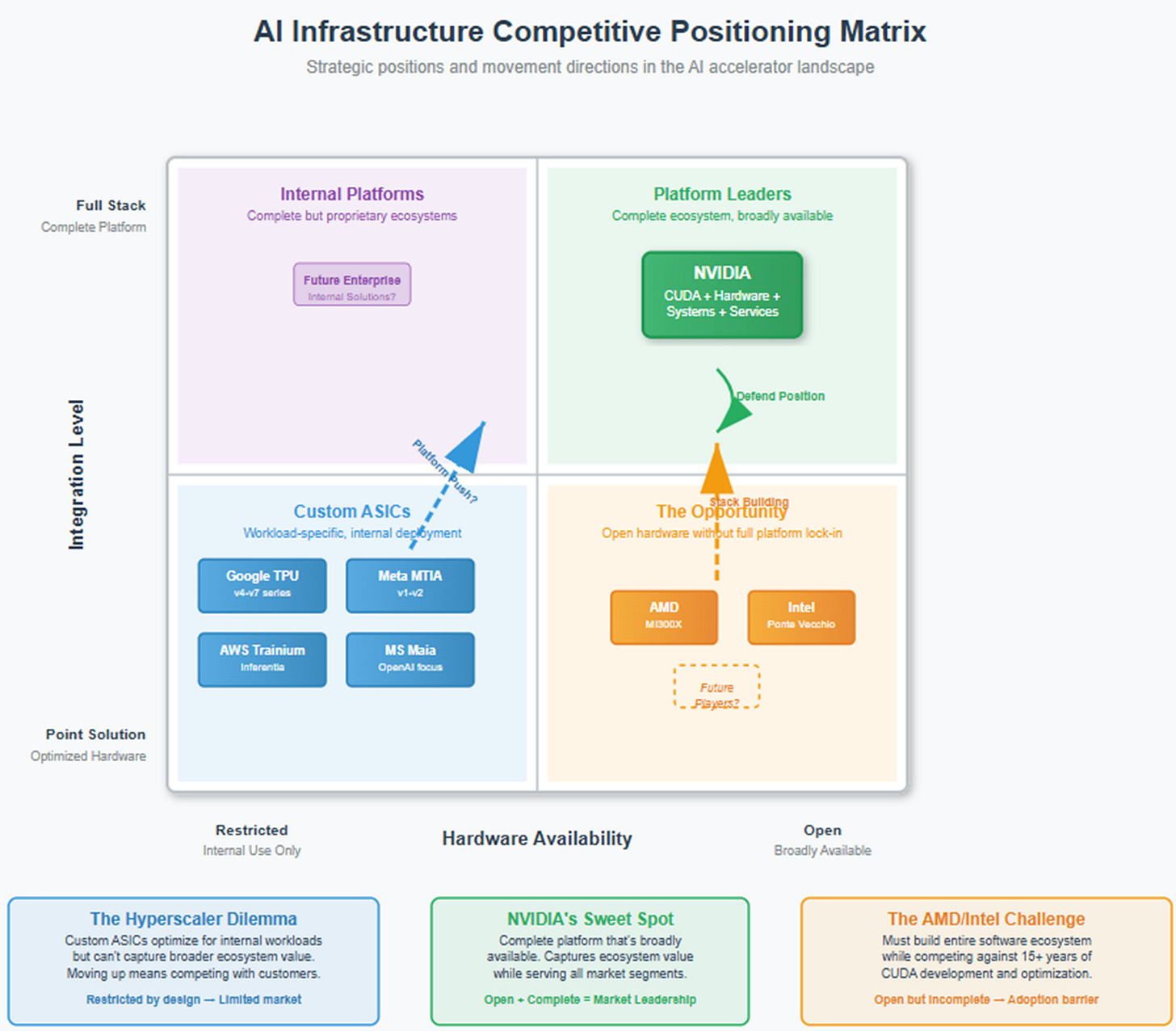

The Counter-Move: The Hyperscaler Imperative

The AI boom that made Nvidia dominant also created the conditions for its biggest challenge. When your customers are Google, Amazon, Microsoft, and Meta—companies with unlimited engineering resources and annual AI compute budgets measured in billions—dependency on a single supplier becomes an existential strategic vulnerability.

The Motive: Reclaiming Design Margin and Control

Hyperscalers aren't building custom AI chips because they enjoy hardware design. They're responding to fundamental misalignment between their needs and Nvidia's business model. When Google trains models like PaLM or Gemini, the workloads are highly predictable and run at massive scale for months or years. General-purpose GPUs optimized for diverse workloads carry significant overhead for these applications.

More importantly, Nvidia's 60%+ gross margins represent value that hyperscalers believe they can capture internally. When your annual AI compute budget reaches billions of dollars, even modest improvements in price-performance justify substantial chip development investments. Amazon's CFO noted that every 10% reduction in AI compute costs could fund hundreds of millions in additional R&D spending.

Control matters as much as cost. Dependence on Nvidia means accepting their product roadmap, supply allocation decisions, and pricing strategies. When ChatGPT's success created unprecedented demand for H100 GPUs, OpenAI and Microsoft faced supply constraints that limited their ability to scale services, despite willingness to pay premium pricing.

Custom silicon offers control over optimization priorities. Google's TPUs are designed specifically for TensorFlow operations and Google's serving infrastructure, achieving better efficiency for these workloads than general-purpose alternatives. Amazon's Trainium chips target the cost-sensitive training jobs that AWS customers actually run, rather than the flagship benchmarks that dominate hardware marketing.

The hyperscaler roadmap reveals the scope of this investment. Google's TPU program spans multiple generations from v4 through planned v7 chips. Amazon develops separate Trainium (training) and Inferentia (inference) product lines with regular cadence updates. Microsoft's Maia chips target OpenAI partnership requirements. Meta's MTIA focuses on recommendation and content filtering workloads that comprise the majority of their inference compute.

The Means: Silicon Plus Abstraction

The hyperscaler strategy operates on two levels: custom accelerators for specific workloads, and software abstraction that makes hardware choice invisible to applications.

TPUs, Trainium, MTIA, and Maia represent the silicon component—chips optimized for each company's dominant use cases. But the more strategically important development is compiler and serving abstraction that enables the same software to run efficiently across different hardware backends.

Google's XLA (Accelerated Linear Algebra) compiler can generate optimized code for TPUs, GPUs, and CPUs from the same high-level computation graphs. Amazon's Neuron SDK provides similar capabilities for Trainium and Inferentia chips. Meta's compiler work focuses on automatically mapping PyTorch models to MTIA hardware.

The most significant development is PyTorch's evolution toward backend-agnostic execution. Triton, originally developed by OpenAI, allows developers to write high-performance kernels that can target different hardware architectures. Torch Compile can automatically optimize model execution for available accelerators without code changes.

This abstraction layer represents the most serious long-term threat to CUDA's dominance. If PyTorch can deliver 90%+ of CUDA performance on alternative hardware through automatic compilation, the switching costs that maintain platform loyalty disappear for many applications.

Progress varies by workload type. Inference applications with stable computational patterns are easier to optimize automatically. Training workloads with dynamic computation graphs and frequent architecture changes remain more challenging for backend-agnostic systems.

The Catch: Execution Risk and Supply Constraints

Building competitive AI chips requires more than engineering talent and financial resources. Success depends on executing across the same supply chain constraints that limit Nvidia's competitors.

First-generation hyperscaler chips often underperform expectations. Meta's initial MTIA chip reportedly delivered insufficient speedup over GPUs and was redesigned significantly for the second generation. ByteDance's partnership with Broadcom for custom AI silicon was reportedly canceled after spending billions on development that failed to meet performance targets.

Supply chain execution presents another challenge. Custom chips require access to advanced process nodes, HBM memory allocation, and sophisticated packaging technologies—the same scarce inputs that Nvidia depends on. TSMC's 3nm capacity is limited, and allocation is typically negotiated years in advance. HBM supply from SK Hynix and Samsung is constrained, with production capacity booked by GPU manufacturers.

Even perfect chip design means nothing without access to manufacturing capacity when needed. Intel's Ponte Vecchio GPU faced significant delays partly due to advanced packaging constraints. AMD's MI300X launch was reportedly limited by CoWoS capacity availability.

The hyperscaler advantage lies in patient capital and vertical integration. These companies can absorb multiple development cycles and supply chain challenges because they're optimizing for long-term strategic control rather than quarterly revenue targets. They can also guarantee volume commitments that justify preferential treatment from suppliers.

But the timeline for achieving competitive performance and cost structure is measured in multiple chip generations, not single products. Google's TPU program required five generations before achieving clear advantages over GPUs for their specific workloads.

Workload Migration Patterns: Inference First, Training Later

The evidence suggests that inference workloads standardize and migrate to custom silicon faster than training applications. Serving established models like GPT-3.5 or Llama involves predictable computation patterns that can be optimized effectively. Training new architectures requires maximum flexibility and fastest iteration cycles.

Amazon's success with Inferentia chips for cost-sensitive inference workloads demonstrates this pattern. AWS can offer instances powered by custom chips at lower prices than GPU alternatives, attracting customers for whom cost matters more than raw performance.

Google's deployment of TPUs for production inference while maintaining GPU capacity for research and development shows how the same organization can use different hardware for different purposes. Their custom chips handle scaled deployment of proven models, while GPUs provide flexibility for experimental work.

Meta's MTIA focus on recommendation and content filtering reflects similar logic. These workloads represent the majority of Meta's inference compute and follow predictable patterns that custom optimization can address effectively.

Training workloads remain more challenging to optimize with custom silicon. Large language model architectures evolve rapidly as researchers experiment with attention mechanisms, mixture-of-experts designs, and novel training techniques. Purpose-built chips optimized for today's transformer models might struggle with next year's architectures.

This explains why even companies with successful custom inference chips continue purchasing GPUs for training applications. The value of faster iteration and broader compatibility often exceeds the cost savings from optimized hardware.

The Moat, Reframed

The conventional wisdom about Nvidia's competitive position centers on CUDA's software lock-in, treating it as the primary barrier to competition. This analysis isn't wrong, but it misses the full picture of how platform advantages actually work in practice.

Nvidia's real moat combines software ecosystem, supply chain orchestration, and system integration capabilities. CUDA provides the software foundation, but success requires controlling scarce manufacturing inputs and delivering complete solutions that work reliably from day one.

Software Plus Supply Orchestration

Consider the challenges facing AMD's RDNA and CDNA architectures. AMD has invested heavily in ROCm software to provide CUDA compatibility and native development tools. Recent versions achieve reasonable performance parity for many workloads, and some benchmarks show competitive results.

But software compatibility doesn't guarantee market success when hardware availability becomes the constraint. AMD's MI300X GPUs use the same HBM3 memory and CoWoS packaging as Nvidia's H100, competing for the same limited manufacturing capacity. TSMC allocates advanced packaging based on long-term commitments and volume guarantees that favor established customers.

When supply is constrained, customers default to vendors who can guarantee delivery timelines. Nvidia's relationships with memory suppliers and packaging foundries, built over years of consistent volume, provide allocation advantages that new entrants struggle to replicate.

The same dynamic affects hyperscaler custom chips. Google's TPU v5 and Amazon's Trainium2 require similar HBM and packaging technologies as Nvidia's offerings. Success depends not just on chip design quality, but on securing manufacturing capacity to produce them at scale.

System Integration as Competitive Advantage

The most successful CUDA alternatives come from companies that control entire computing stacks rather than just chip designs. Google's TPU success stems from optimization across hardware, TensorFlow framework, and serving infrastructure. Amazon's Trainium adoption benefits from tight integration with SageMaker and other AWS services.

Apple's approach to custom silicon demonstrates this principle. The M-series processors achieve excellent performance not just through chip design, but through optimization across hardware, operating system, and application software. This vertical integration creates user experiences that competing products struggle to match with general-purpose components.

Traditional hardware vendors face more difficult challenges because they must rely on third-party software ecosystems. Intel's GPU efforts with Arc and Ponte Vecchio have struggled partly because Intel lacks control over the software stacks that determine user experience.

This explains why AMD's most promising opportunities exist in markets where system-level integration matters less. High-performance computing applications that optimize performance manually can take advantage of AMD's hardware capabilities without depending on automatic optimization tools.

Platform Durability and Evolution

Platform advantages prove remarkably durable because they can adapt to new challenges rather than being displaced by them. Nvidia's response to custom chip competition follows patterns established in gaming markets: embrace emerging standards where beneficial, while extending platform capabilities to maintain differentiation.

The introduction of Transformer Engines and FP8 precision for large language models shows how incumbents can absorb new application categories. Rather than waiting for industry standards to emerge, Nvidia ships hardware optimizations for emerging workloads and provides software tools to exploit them.

Triton development represents another adaptation strategy. By providing tools that make high-performance kernel development more accessible, Nvidia reduces the advantage that custom chip vendors gain from workload-specific optimization. If developers can easily write optimized code for GPUs, the performance benefits from purpose-built hardware diminish.

This dynamic creates a interesting paradox: the same abstraction layers that threaten CUDA lock-in can actually strengthen platform positions if incumbents control the abstraction tools. Triton kernels can theoretically target multiple backends, but they're optimized and tested primarily on Nvidia hardware.

Scenarios: Mapping the Range of Outcomes

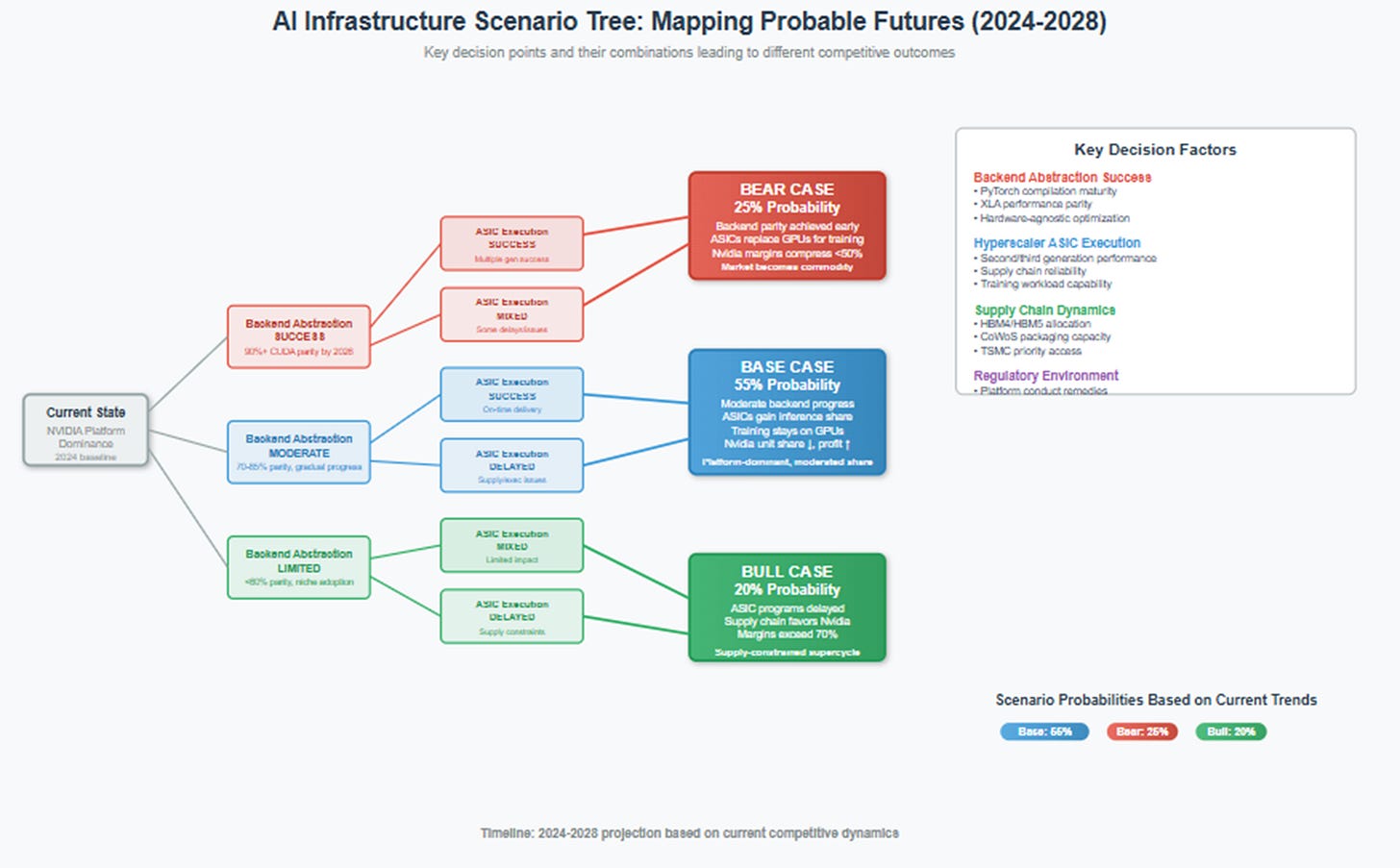

Three scenarios capture the range of plausible outcomes as these competitive dynamics evolve through 2028. Each represents a different balance between platform gravity and hyperscaler imperative, with distinct implications for market structure and profit distribution.

Base Case: Platform-Dominant, Moderated Share (55% probability)

This scenario assumes that CUDA ecosystem advantages persist for new and rapidly-evolving workloads, while custom ASICs gain meaningful share in standardized inference and some training applications. Cross-backend abstraction improves for serving established models but continues to lag for cutting-edge research and development.

Nvidia's unit market share drifts down from current levels as hyperscaler ASICs handle increasing portions of internal workloads. Google deploys TPU v6 and v7 for large-scale inference and standard training tasks. Amazon's Trainium3 captures cost-sensitive training jobs on AWS. Microsoft's Maia chips reduce OpenAI's GPU requirements for established model serving.

But profit concentration persists through product mix evolution. Nvidia maintains pricing power in high-memory configurations using CoWoS-L packaging and HBM4 memory that enable frontier model training. Software attach rates increase as platform monetization expands beyond hardware sales to include runtimes, development tools, and managed services.

Total AI compute demand grows faster than custom chip deployment, allowing absolute revenue and margin growth despite market share erosion. Nvidia remains the default choice for frontier training, heterogeneous third-party workloads, and applications where time-to-SOTA matters more than cost optimization.

AMD consolidates as the credible second source for customers seeking alternatives to Nvidia dominance. Intel finds success as an enabler through foundry services, advanced packaging, and photonics rather than head-to-head accelerator competition.

Key indicators: Hyperscaler ASICs reach 20-30% of inference workloads by 2027 but remain under 15% of training. Nvidia's average selling prices increase through mix shift toward premium configurations. Software and services become 10-15% of data center revenue.

Bear Case: Backend Parity Arrives Early (25% probability)

This scenario assumes that compiler and serving abstraction achieves 90%+ performance parity with CUDA across mainstream training and inference workloads by 2026-2027. PyTorch's native compilation, improved XLA, and hardware-agnostic optimization tools eliminate the performance penalties that maintain platform lock-in.

Hyperscaler second and third-generation ASICs become "good enough" for most applications, including new model development that previously required cutting-edge GPUs. Google's TPU v7 handles transformer architecture variants effectively. Amazon's Trainium3 achieves competitive training performance for models up to moderate size. Meta's MTIA v3 expands from inference to training applications.

Nvidia's pricing umbrella collapses first in inference markets, then extends to training as custom chips demonstrate comparable capabilities. Cloud providers offer instances powered by internal chips at significantly lower prices than GPU alternatives, forcing Nvidia to reduce pricing to maintain competitiveness.

Regulatory pressure accelerates this transition. European authorities impose conduct remedies limiting software bundling practices. Requirements for hardware-agnostic neutrality in cloud services prevent platform optimization that favors specific vendors.

Market dynamics shift toward cost-performance optimization rather than absolute performance leadership. Customers prioritize predictable pricing and vendor independence over access to cutting-edge capabilities. The AI infrastructure market begins resembling traditional server markets with multiple competitive suppliers.

Key indicators: Major frameworks achieve <10% performance delta vs CUDA by 2026. Hyperscaler training ASICs replace GPU capacity for new model development. Nvidia's data center gross margins compress below 50%. Regulatory investigations target platform practices.

Bull Case: Supply-Constrained Supercycle (20% probability)

This scenario assumes that HBM memory and advanced packaging constraints affect Nvidia's competitors more severely than the incumbent. Manufacturing capacity limitations delay hyperscaler chip programs while frontier model complexity outpaces custom ASIC development capabilities.

One or two major hyperscaler training chip projects experience significant delays due to supply chain constraints or execution challenges. Google's TPU v7 launch slips by 12-18 months due to CoWoS-L packaging availability. Amazon's Trainium3 faces HBM4 allocation issues that limit production volumes. Intel's Jaguar Shores program encounters foundry capacity constraints.

Meanwhile, frontier AI models continue growing in complexity faster than custom chips can adapt. GPT-5 and successor architectures require capabilities that fixed-function accelerators struggle to support efficiently. Research into multimodal models, reasoning systems, and novel training techniques favors platforms with maximum flexibility.

Nvidia leverages its supply chain relationships to secure preferential access to cutting-edge memory and packaging technologies. H200 and Blackwell systems command premium pricing due to scarcity and superior capabilities. DGX Cloud expansion accelerates as enterprise customers prefer managed services over internal infrastructure.

Software platform monetization accelerates through expansion into development tools, runtime services, and managed AI infrastructure. Customers pay premium rates for integrated solutions that guarantee performance and availability.

The result is a temporary return to near-monopoly conditions as supply constraints prevent effective competition. Nvidia's financial performance reaches new highs through combination of volume growth and pricing power.

Key indicators: Major ASIC programs slip by 6+ months due to supply issues. HBM4 allocation favors established GPU vendors. Nvidia's data center gross margins exceed 70%. DGX Cloud captures significant enterprise market share.

Implications by Player: Strategic Responses to Platform Competition

The unfolding competition between platform integration and vertical customization requires different strategies depending on market position and capabilities. Success will depend on understanding which forces prove stronger and positioning accordingly.

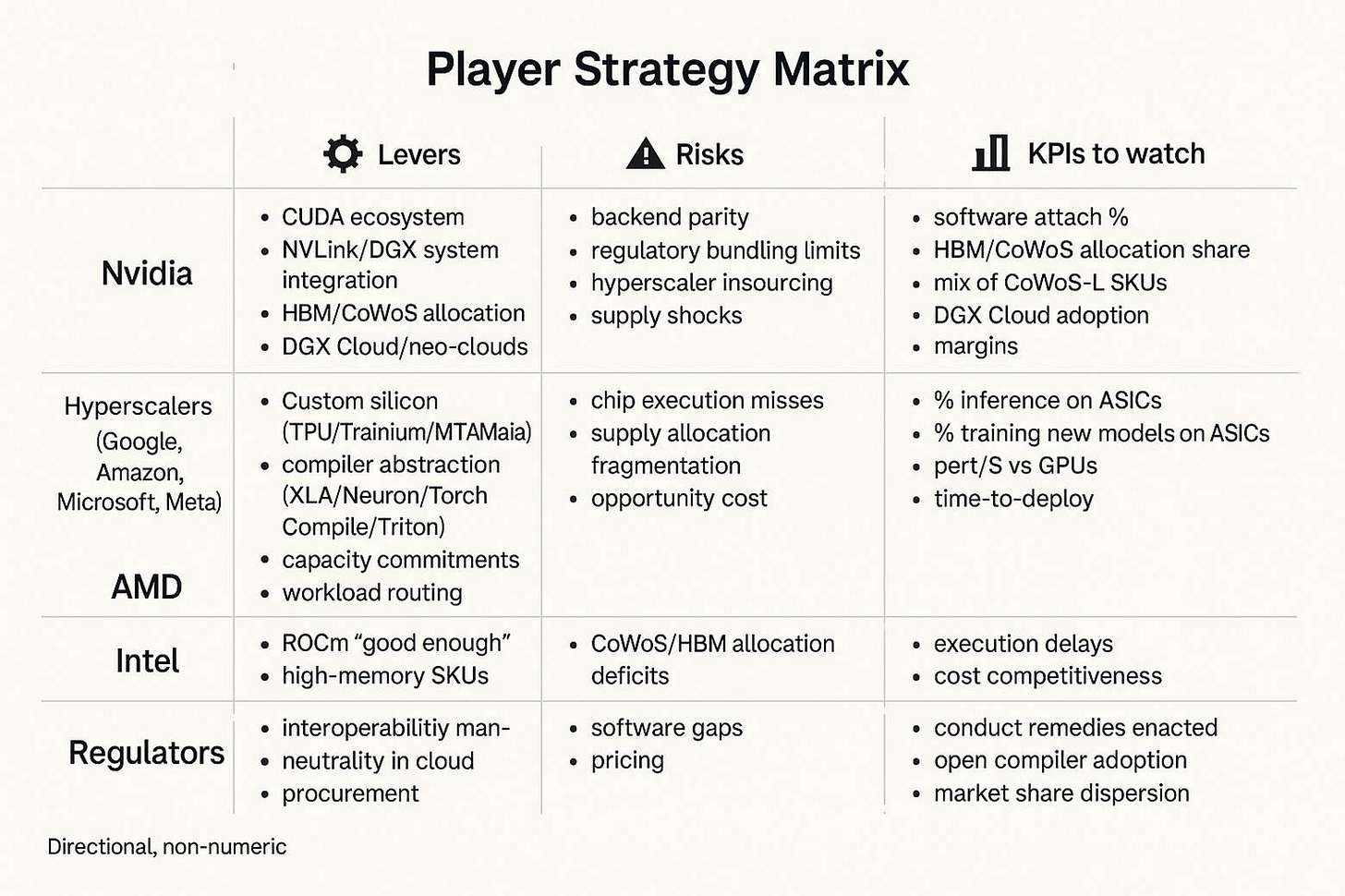

Nvidia: Defending Platform Advantages Through Evolution

Nvidia should tier its product line explicitly by packaging technology and memory configuration to maximize value capture across different market segments. CoWoS-L "ultra" variants targeting premium training applications can command higher margins through superior memory capacity and bandwidth. CoWoS-S configurations serve volume markets where price-performance optimization matters more than absolute capabilities.

Software platform expansion becomes critical for maintaining differentiation as hardware performance gaps narrow. Investment in development tools, runtime optimization, and managed services creates switching costs beyond pure API compatibility. The company should accelerate Triton development and similar tools that make GPU optimization more accessible while maintaining platform advantages.

The "virtual vertical" strategy through DGX Cloud and neo-cloud partnerships provides demand stability without direct cloud competition that would alienate hyperscaler customers. This approach should expand into additional regions and specialized markets while maintaining the partnership model that avoids channel conflict.

Supply chain orchestration requires continued investment in long-term relationships with memory suppliers and packaging foundries. Early allocation of HBM4, HBM5, and advanced CoWoS capacity will determine competitive positioning as much as chip design quality.

Most importantly, Nvidia must resist the temptation to compete primarily on cost. Platform value lies in capabilities, integration, and time-to-market rather than pure price-performance metrics. Margin preservation through premium positioning enables the R&D investment required to maintain technological leadership.

Hyperscalers: Coordinated Competition Through Standards and Scale

Google, Amazon, Microsoft, and Meta should coordinate on compiler and runtime standards that reduce switching costs across vendors while maintaining competitive advantages in specific applications. Investment in PyTorch compilation, XLA improvements, and hardware-agnostic optimization tools benefits all participants by weakening platform lock-in.

Custom chip deployment should follow a barbell strategy: use internal ASICs where workloads are stable and predictable, while maintaining GPU capacity for new models, partner ecosystems, and rapidly-evolving applications. This approach maximizes cost savings without sacrificing flexibility for unknown future requirements.

Supply chain coordination could provide leverage against incumbent advantages. Joint commitments for HBM memory or CoWoS capacity allocation would enable more predictable custom chip production while reducing dependence on favorable allocation from suppliers.

The most important strategic decision is timing. Early-generation custom chips should target applications where performance advantages are clear and sustainable. Premature deployment in competitive markets risks reputation damage that affects adoption of future generations.

AMD: Second Source Positioning in Underserved Markets

AMD's highest-leverage opportunity lies in sovereign cloud and enterprise customers seeking credible alternatives to single-vendor dependence. Government requirements for supply chain diversity create demand that prioritizes vendor competition over pure performance optimization.

High-memory configurations using HBM3E and future HBM4 technology can differentiate AMD offerings for training applications that require large model capacity. These workloads often benefit more from memory size than from raw computational performance, playing to AMD's strengths in package design.

ROCm software development should focus on "good enough" compatibility for mainstream workloads rather than theoretical performance parity across all applications. Achieving 85-90% of CUDA performance for popular frameworks may be sufficient to win price-sensitive customers.

Delivery reliability becomes a key differentiator. If AMD can guarantee supply availability while Nvidia faces allocation constraints, enterprise customers may accept modest performance trade-offs for procurement predictability.

Partnership with hyperscalers on standards development provides industry influence without requiring successful internal chip programs. Contributing to open-source alternatives to CUDA benefits AMD's competitive position regardless of custom chip adoption rates.

Intel: Enablement Over Direct Competition

Intel's maximum upside lies in foundry services, advanced packaging, and photonics rather than head-to-head accelerator competition. Success as an arms merchant for AI infrastructure offers better risk-adjusted returns than attempting to displace incumbent GPU vendors.

IFS (Intel Foundry Services) should target hyperscaler custom chip programs with competitive process technology and integrated packaging capabilities. Winning Amazon, Microsoft, or Meta as foundry customers provides diversified exposure to AI growth without platform competition risk.

Advanced packaging development through joint ventures or partnerships could position Intel as the alternative to TSMC's CoWoS monopoly. Multiple suppliers for critical packaging technologies would benefit all non-TSMC customers and create new revenue opportunities.

Photonics integration represents a potential leapfrog opportunity if optical interconnects become critical for AI cluster scaling. Intel's silicon photonics capabilities could enable new architectures that sidestep traditional electrical bandwidth limitations.

Jaguar Shores and other direct GPU competition should be viewed as technology demonstration and customer requirement fulfillment rather than primary growth strategies. Success in niche applications validates broader technology capabilities while generating customer relationships that benefit foundry and enabling businesses.

Regulators and Sovereign Entities: Balancing Innovation and Competition

Regulatory authorities should expect conduct remedies targeting software bundling and market access practices rather than structural breakups that could undermine innovation incentives. European authorities will likely lead with investigations into platform practices and requirements for hardware-agnostic neutrality in cloud services.

Sovereignty budgets will create non-hyperscaler demand for second sources regardless of pure economic optimization. Government requirements for supply chain diversity benefit all non-incumbent vendors and should be factored into procurement decisions.

International coordination on AI infrastructure standards could prevent fragmentation while promoting competition. Common approaches to hardware abstraction and workload portability benefit smaller vendors and reduce platform lock-in effects.

The most effective interventions will focus on interoperability and access rather than performance requirements. Mandating API compatibility or requiring fair licensing of development tools can promote competition without prescribing specific technical solutions.

What Would Change My Mind: Falsifiable Predictions

Five specific developments would indicate fundamental shifts in competitive dynamics requiring strategy revision:

Backend Performance Parity: Reproducible demonstrations of 90%+ CUDA performance for mainstream training workloads, not just inference, using vendor-independent compilation tools integrated into popular frameworks like PyTorch. This must include dynamic models with frequent architecture changes, not just static deployment scenarios.

ASIC Training Success: Hyperscaler second or third-generation custom chips successfully replacing GPU-based training for new model development at meaningful scale. Success means handling novel architectures and experimental techniques, not just reproducing established training runs.

Supply Chain Disruption: Non-incumbent vendors securing HBM4 and CoWoS-L allocation with volume shipments meeting announced timelines. This requires demonstrating ability to compete for scarce manufacturing inputs, not just chip design capabilities.

Regulatory Conduct Changes: Formal regulatory remedies that meaningfully limit platform software attach rates or require hardware-agnostic neutrality in managed AI services. Investigation announcements matter less than implemented changes that affect business practices.

· Channel Strategy Shifts: Platform incumbent materially ramping owned cloud capacity beyond current partnership model, indicating breakdown in virtual vertical strategy or defensive response to pricing pressure. This would signal transition from partnership to direct competition with customers.

The Business of Scarcity: Why Margins Persist in Platform Competition

The dynamics of AI infrastructure competition demonstrate how "units diversify, profit concentrates" remains viable even as market share fragments across multiple players. Understanding this requires examining how scarcity operates at different levels of the stack.

Note: Chart is for illustration purposes, actual numbers may vary from estimates

Memory-Rich Packages and Time-to-SOTA Pricing

As AI models grow larger and more complex, memory capacity becomes a hard constraint that determines what's possible rather than just what's efficient. GPT-4 class models require hundreds of gigabytes of fast memory for training. Future models may require terabytes.

HBM4 packages with 8-12 memory stacks enable capabilities that cheaper alternatives simply cannot match. A customer training a frontier language model cannot substitute multiple smaller systems for a single high-memory configuration without fundamental changes to model architecture and training procedures.

This creates natural price segmentation where premium capabilities command premium pricing regardless of competitive dynamics in volume markets. Customers pay for access to unique capabilities, not just better price-performance ratios.

Time-to-SOTA (State of the Art) has similar economic characteristics. Research teams racing to publish breakthrough results will pay significant premiums for hardware that enables faster iteration cycles. The value of being first to market with a new AI capability often exceeds the cost differential between platforms.

Platform Monetization Beyond Hardware

Software and services represent expanding revenue opportunities that scale with usage rather than just hardware sales. Development tools, runtime optimization, and managed infrastructure create recurring relationships that compound over time.

NVIDIA's software revenue remains small as a percentage of total data center sales, but growth rates significantly exceed hardware expansion. This suggests that platform monetization is in early stages with substantial upside potential.

The key insight is that software value scales with problem complexity. Simple inference applications may commoditize toward cost optimization, but frontier research and development will pay premiums for integrated solutions that reduce time and complexity.

Scarce Input Control as Moat Evolution

Traditional competitive advantages in chip design become less durable as more players achieve technical competence. But control over manufacturing inputs—HBM allocation, advanced packaging capacity, foundry relationships—proves more defensible because it cannot be replicated through engineering effort alone.

TSMC's advanced packaging capacity grows slowly due to technical complexity and capital requirements. HBM production expands gradually because of manufacturing challenges and supplier concentration. These constraints create natural bottlenecks that favor established players with strong supplier relationships.

The result is that platform competition occurs within supply-constrained environments where allocation matters as much as technical capabilities. Success requires not just building competitive products, but securing the inputs necessary to manufacture them at scale.

This dynamic explains how incumbent platforms can maintain pricing power even when facing technically competitive alternatives. If competitors cannot secure equivalent manufacturing allocation, their products remain niche regardless of technical merits.

The Past Was Prologue: Platform Lessons for the AI Economy

Jensen Huang's 2006 bet on CUDA represented more than just product diversification—it demonstrated a philosophy about how technology platforms capture value. The decision to invest a billion dollars in parallel computing infrastructure before any clear market existed reflected deep understanding of how winner-take-most dynamics emerge in technology industries.

The pattern was consistent across Nvidia's history: ship capabilities before they're convenient, build ecosystems around those capabilities, then control the scarce inputs that determine who can compete effectively. GeForce 256's hardware T&L enabled new categories of games. RTX ray tracing created new standards for visual fidelity. CUDA enabled new approaches to scientific computing and machine learning.

In each case, the initial response was skepticism followed by gradual adoption, then ecosystem lock-in as software developers optimized for the new capabilities. Competitors could eventually build technically similar hardware, but recreating the software ecosystem and developer relationships required years of investment with uncertain payoffs.

Today's AI infrastructure battle follows identical logic, but with higher stakes and more sophisticated competition. The companies building custom chips aren't resource-constrained startups—they're Google, Amazon, Microsoft, and Meta, with unlimited budgets and internal expertise that rivals any hardware vendor.

But the fundamental challenge remains unchanged: platforms win by controlling scarce inputs and software ecosystems, not just by building faster chips. The question is whether hyperscaler engineering resources and financial capabilities can overcome the structural advantages that platform incumbents have accumulated over decades.

An Economy of Compute

The future that emerges from this competition isn't just faster hardware or cheaper inference—it's an economy where computational capabilities become infrastructure that enables new categories of applications and business models.

The company that controls this infrastructure writes the rules for everything built on top of it. In the PC era, Microsoft captured value through operating system control while hardware became commoditized. In mobile, Apple demonstrated how vertical integration could maintain premium positioning. In cloud computing, Amazon showed how infrastructure services could generate sustainable competitive advantages.

AI infrastructure will likely follow a hybrid model where platform incumbents maintain control over cutting-edge capabilities while standardized workloads migrate to optimized alternatives. The key strategic question is which applications remain platform-centric and which commoditize toward cost optimization.

History suggests that the most valuable applications—those requiring maximum performance, fastest iteration, or novel capabilities—will continue gravitating toward platforms that offer complete solutions rather than optimized components. The platform that makes new things possible captures more value than the hardware that makes existing things cheaper.

The Inflection Point

The next 18-24 months will determine whether platform gravity or hyperscaler imperative proves stronger in shaping AI infrastructure. Several key developments will provide early indicators:

PyTorch compilation and backend abstraction progress will signal whether software barriers to competition are eroding faster than anticipated. Successful deployment of second-generation hyperscaler ASICs for training workloads would indicate that custom silicon can achieve competitive capabilities for mainstream applications.

Supply chain allocation for HBM4 and advanced packaging will reveal whether incumbent advantages in scarce input control remain durable. Regulatory investigations into platform practices could accelerate adoption of alternatives regardless of technical readiness.

Most importantly, the emergence of new AI applications that require capabilities beyond current hardware will determine whether platform advantages extend into new categories or whether existing functionalities become sufficient for most use cases.

The companies that understand both the technical dynamics and the economic forces shaping this transition will be best positioned for the economy of compute that emerges. Whether that economy looks more like the PC industry (with separated layers and commodity hardware) or the smartphone industry (with integrated platforms and premium experiences) remains to be determined.

Either way, the principles that drove Nvidia's rise from graphics startup to AI infrastructure leader remain relevant: the fastest way to win hardware is to win the software and the system around it—then control the scarce inputs that everyone needs. The question is whether those principles can withstand the most sophisticated and well-funded competitive challenge in technology industry history.

Disclaimer:

The content does not constitute any kind of investment or financial advice. Kindly reach out to your advisor for any investment-related advice. Please refer to the tab “Legal | Disclaimer” to read the complete disclaimer.