Nvidia 3QFY26 Earnings: Industrialization of Reasoning

How reasoning workloads and full-stack lock-in turned a GPU vendor into infrastructure

TL;DR:

3QFY26 shattered expectations: $57B revenue, 66% DC growth, and a $65B guide—all during the supposed Hopper→Blackwell “air pocket.”

Exponential reasoning inference + sovereign AI + accelerated computing needs create durable, non-correlated demand streams that don’t behave like a typical hardware cycle.

Nvidia’s full-stack strategy—from racks and fabric to CUDA and financing—has transformed it from a chip vendor into the central bank and operating system of AI infrastructure.

CEO Jensen Huang opened the earnings call with a direct rebuttal: “There’s been a lot of talk about an AI bubble. From our vantage point, we see something very different.”

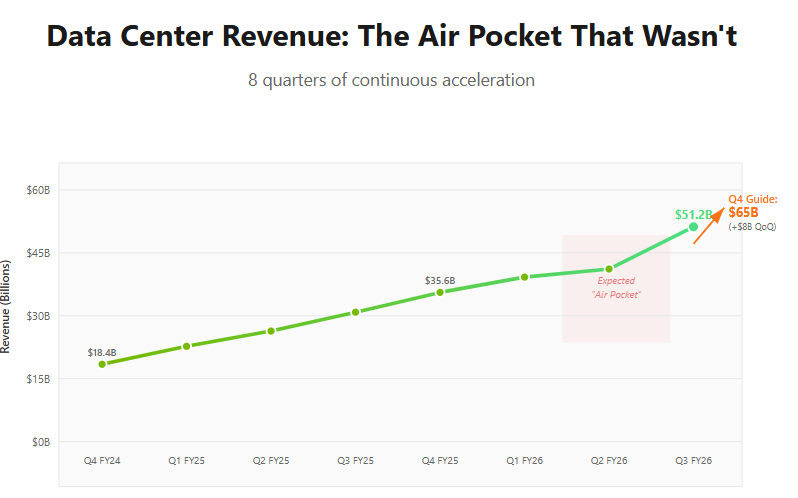

The Air Pocket That Wasn’t

The most dangerous moment for any technology platform is the transition between S-curves. For the last three months, the semiconductor market has been obsessed with a specific version of this danger: the “air pocket.” The theory was rational. As Nvidia transitions from Hopper to Blackwell, customers would naturally pause orders to wait for the better chip. Bears predicted flat quarters, inventory digestion, and margin compression as supply finally caught up with demand.

The November setup made this narrative especially potent. Hyperscalers had just reported earnings where they collectively guided to over $600 billion in capital expenditures for 2026, yet their AI-driven revenue gains remained frustratingly abstract. Media coverage saturated with questions about ROI. If anyone was going to show a crack in the AI infrastructure thesis, it would be Nvidia—the company at the epicenter, the company everyone watches as the proxy for whether this boom is sustainable or bubble.

Nvidia reported third quarter fiscal 2026 results. Revenue came in at $57.0 billion, up 62% YoY and 22% sequentially. Data Center revenue hit $51.2 billion, representing 90% of total sales and growing 66% YoY. The company guided fourth quarter revenue to $65.0 billion—an $8 billion sequential increase that represents 14% QoQ growth even from an already massive base.

The interesting question isn’t whether Nvidia beat expectations—it clearly did. The interesting question is why the company can keep growing at this rate in the face of very public doubts about AI demand sustainability, and what that reveals about the nature of the business Nvidia has actually built.

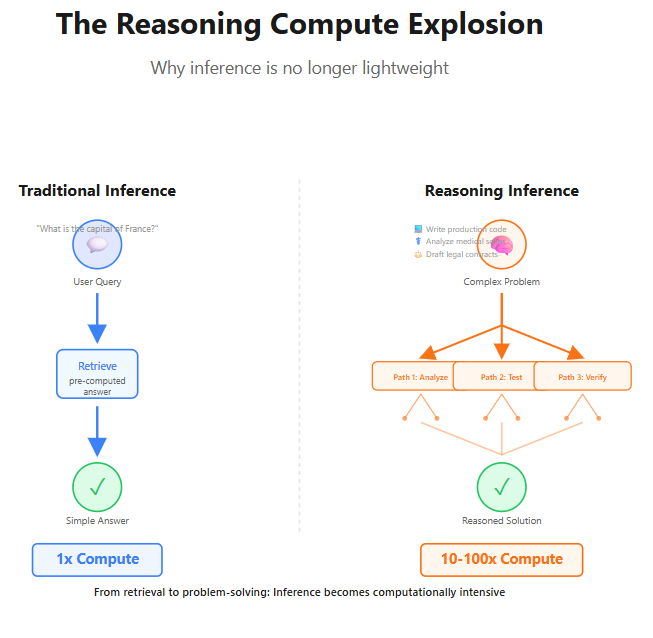

The Reasoning Demand Shift

To understand why Nvidia’s growth isn’t hitting the predicted wall, you need to understand what changed about inference workloads in the last six months. For two years, the existential threat to Nvidia’s dominance has been the “training versus inference” dichotomy. The argument went like this: Nvidia owns training—the computationally intensive process of building AI models—but the much larger inference market will inevitably shift to cheaper, custom silicon from AWS, Google, and others. After all, if inference is just serving up pre-computed responses, why pay Nvidia’s premium?

On the earnings call, Jensen Huang explained why that mental model no longer applies. “There are three scaling laws,” he said, “pre-training, post-training, and inference—each growing exponentially.” The critical revelation is what’s happening to inference. Models like OpenAI’s o1 don’t just retrieve answers; they reason. They engage in chain-of-thought processing, breaking down complex problems, checking their work, and exploring multiple solution paths before delivering a response. Huang emphasized that “AI systems capable of reasoning, planning and using tools mark the next frontier of computing.”

The computational implications are profound. A reasoning query might require ten to one hundred times more compute than a simple retrieval task. When a model spends thirty seconds “thinking” to write production code or analyze medical imaging, that’s not serving—that’s computation-heavy problem-solving. Inference is no longer the lightweight backend process it once was. It’s becoming what I’d call “Training 2.0”: stateful, memory-intensive, and schedule-sensitive workloads that demand the full parallelism and memory bandwidth of Nvidia’s architecture.

This is where Jevons Paradox enters the picture. In 1865, economist William Stanley Jevons observed that as steam engines became more efficient, coal consumption increased rather than decreased, because efficiency made steam power economically viable for many more applications. The same dynamic is now playing out with artificial intelligence. As the cost per token drops and model quality improves, consumption doesn’t moderate—it explodes. Reasoning AI gets embedded everywhere: in coding workflows, legal document analysis, medical diagnostics, supply chain optimization, digital twins for manufacturing. What was once a nice-to-have becomes infrastructure.

This reframes the entire demand picture. CFO Colette Kress noted that analyst expectations for top hyperscalers’ 2026 capital expenditures “now sit roughly at $600 billion, up more than $200 billion relative to the start of the year.”

The cynical view treats this as a bubble number—unsustainable CapEx that must eventually collapse. But if reasoning inference transforms AI from a one-time training CapEx into an ongoing operational compute sink, then $600 billion isn’t overbuild. It’s the early phase of re-platforming global computing infrastructure.

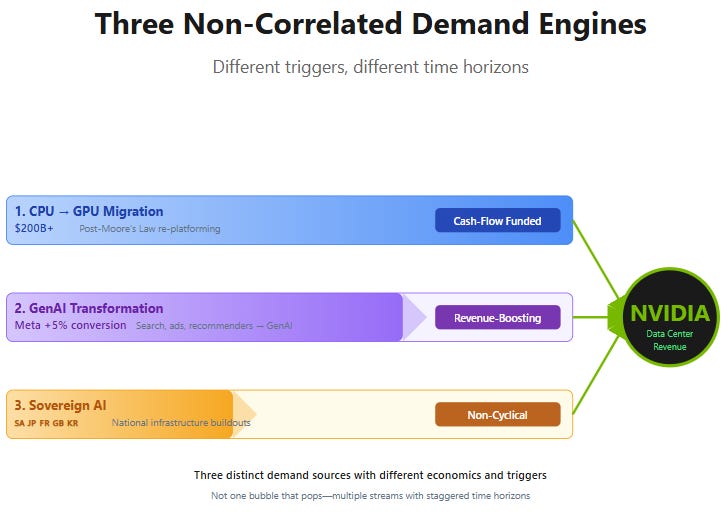

Nvidia’s demand isn’t just one phenomenon; it’s three distinct, non-correlated engines.

The first is the CPU-to-GPU transition that Huang emphasized repeatedly: as Moore’s Law slows, accelerated computing becomes necessary just to maintain the deflationary cost curve that hyperscalers need for their existing workloads. This is hundreds of billions in spending that’s cash-flow funded and defensive, not speculative.

The second is the generative AI transformation of classical machine learning. Meta’s earnings revealed that generative AI-based recommendation systems drove over 5% improvement in ad conversions. Search, ranking, content moderation, ad targeting—the core profit engines of hyperscale platforms—are all migrating from traditional ML to generative models. This isn’t spending for future optionality; it’s revenue-boosting today.

The third pillar is where things get strategically interesting: Sovereign AI. Countries like Saudi Arabia, Japan, France, and South Korea are building AI infrastructure not as corporate IT projects but as national security and industrial policy. Kress announced that Nvidia secured commitments for 400,000 to 600,000 GPUs from the Kingdom of Saudi Arabia over three years. These aren’t purchases evaluated on quarterly ROI. They’re strategic infrastructure decisions that behave more like defense spending—persistent, geopolitically driven, and largely insensitive to short-term economic cycles.

The Full-Stack Lock-In: Racks, Software, and Finance

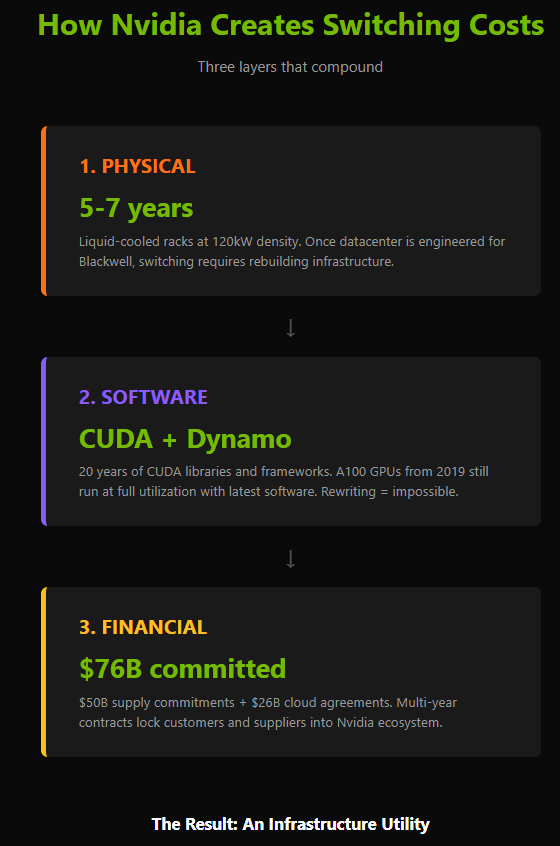

Understanding the demand side explains why growth continues. Understanding the supply side reveals why Nvidia believes it can sustain mid-70s gross margins even as competitors proliferate. The company is no longer just selling chips; it’s building something closer to an infrastructure platform with three layers of lock-in that compound on each other.

Start with the physical layer. Nvidia’s NVL72 rack architecture delivers 120 kilowatts of power density through liquid cooling systems. Once a datacenter is engineered, plumbed, and electrically provisioned for these specifications, switching to a competitor isn’t a procurement decision—it’s a multi-year facilities renovation project. The rack has become the unit of competition, not the chip. Kress noted that “Rubin is our third generation rack-scale system” and that “our ecosystem will be ready for a fast Rubin ramp” because the physical infrastructure remains compatible. This is architectural lock-in at the building level.

The software layer reinforces this. Huang made a point that often gets lost in GPU architecture discussions: “Thanks to CUDA, the A100 GPUs we shipped six years ago are still running at full utilization today, powered by a vastly improved software stack.” This is the opposite of planned obsolescence. Nvidia’s value proposition isn’t that you need to replace your hardware every year; it’s that their software keeps improving the hardware you already own. CUDA compatibility across generations means that applications and frameworks developed for one architecture run on the next, preserving customer investments while simultaneously making it harder to justify ripping out Nvidia for an alternative that would require rewriting the entire stack.

The company’s Dynamo inference framework adds another dimension. Adopted by every major cloud provider, Dynamo enables disaggregated inference—splitting prefill and decode phases across different hardware to optimize utilization. This isn’t just software; it’s orchestration that determines how efficiently a datacenter translates electricity into intelligence. The better Nvidia gets at this layer, the more their economic value shifts from selling silicon to capturing utilization improvements. You’re not just buying GPUs; you’re buying the operating system for AI factories.

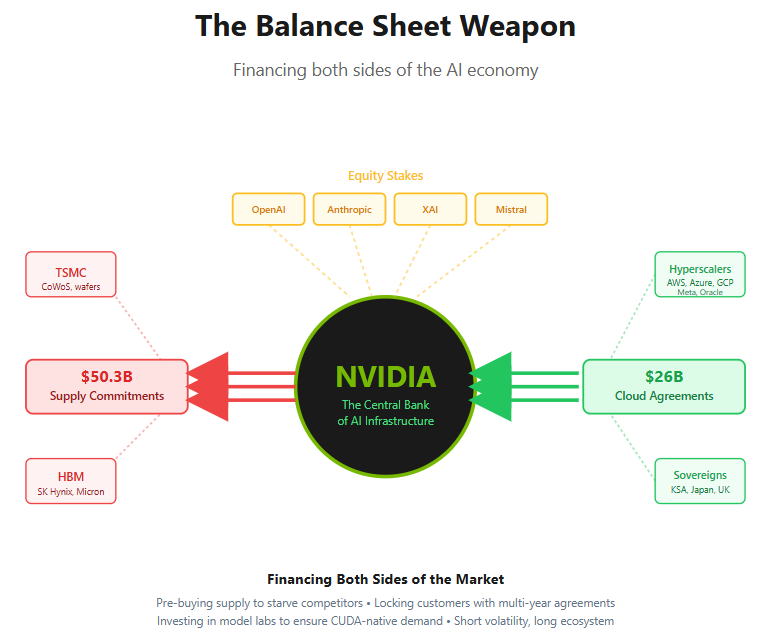

But perhaps the most underappreciated form of lock-in is financial. This quarter, Nvidia reported $50.3 billion in supply commitments, up 63% sequentially. Inventory grew to $19.8 billion, up 32% QoQ. Multi-year cloud service agreements more than doubled from $12.6 billion to $26.0 billion. The company has taken equity stakes in OpenAI, Anthropic, Mistral, and numerous other model developers. These aren’t signs of a vendor waiting for orders. They’re the moves of a company actively engineering its own demand.

Think of it as the Bank of Nvidia.

By pre-buying scarce manufacturing capacity—HBM memory, CoWoS advanced packaging—Nvidia guarantees itself supply while starving potential competitors. By locking hyperscalers and sovereign buyers into multi-year agreements, the company secures offtake visibility. By investing in the model labs that drive GPU utilization, Nvidia ensures the software layer that justifies buying more hardware remains well-funded and CUDA-native.

Jensen put it bluntly when discussing these investments: “Rather than giving up a share of our company, we get a share of theirs,” in what he expects will be “once in a generation companies.”

This is a high-wire act. The same commitments that deepen the moat are also genuine balance sheet risk if utilization stumbles or if sovereign projects face financing delays. Nvidia is effectively short volatility—betting that AI infrastructure demand not only persists but accelerates. If that bet pays off, the company has locked in the supply chain and customer base for years. If it doesn’t, the inventory write-downs and working capital consumption will be brutal.

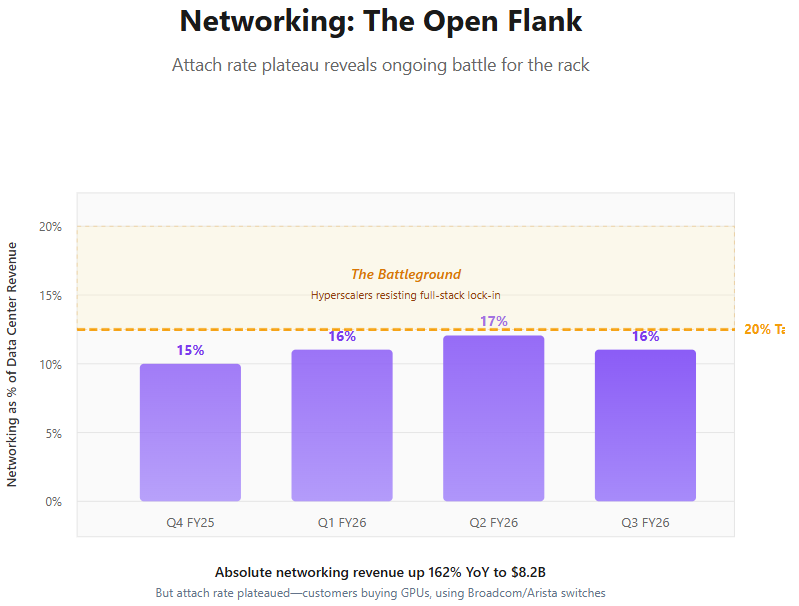

There’s one place where this lock-in strategy shows visible friction: networking. Revenue from Nvidia’s networking division hit $8.2 billion, up an extraordinary 162% YoY. This validates the Mellanox acquisition thesis—customers are buying systems, not just chips. But networking represents only 16% of Data Center revenue, below the 20-25% range that would signal full-stack dominance. The bottleneck is hyperscaler reluctance. Companies like Microsoft and Amazon would prefer to use merchant networking from Broadcom or Arista, preserving flexibility and avoiding total dependence on Nvidia.

The company’s response has been Spectrum-X, an Ethernet-based AI networking solution. By aggressively pushing into Ethernet rather than defending proprietary InfiniBand, Nvidia is acknowledging that Ethernet won the protocol war—but they intend to win the Ethernet chip war. It’s a pragmatic concession in service of a larger goal: making it economically and operationally irrational to build AI infrastructure without Nvidia at every layer of the stack.

What the Market Misses

The consensus view on Nvidia remains something like this: “Exceptional business with incredible margins, but ultimately a hardware cycle. Hyperscalers will eventually rationalize capital spending, margins will revert toward semiconductor norms, and custom ASICs will chip away at inference workloads.“

It’s a reasonable skepticism, and it explains why the stock trades at roughly 30-32 times forward earnings rather than the 40-50 times you might expect for a company growing revenue 60% YoY.

My view differs on several dimensions.

First, I don’t think this is a hardware cycle in the traditional sense. Hardware cycles are characterized by capacity buildouts that overshoot demand, followed by painful digestion periods. But if reasoning inference is genuinely shifting compute from a capital expense to an operational expense—from building the model once to running problem-solving queries continuously—then what looks like a buildout is actually the early phase of a new utility. The proper comparison isn’t Cisco in the dot-com boom; it’s AWS in 2010, when skeptics questioned whether cloud computing could sustain its growth trajectory.

Second, the multi-layered lock-in is underappreciated. Most analysis focuses on CUDA and software moats, which are real but also the most visible and therefore the most competed against. The physical lock-in of rack-scale liquid cooling systems and the financial lock-in of supply commitments and equity stakes are less discussed but potentially more durable. A hyperscaler can theoretically rewrite software stacks for a new architecture. They can’t easily rebuild datacenters or create alternative suppliers when one company has locked up manufacturing capacity years in advance.

Third, the China risk that dominated investor conversations for two years has essentially evaporated. Nvidia generated approximately $50 million from its China-specific H20 chip in the third quarter—Kress called it “insignificant.” The company now assumes zero Data Center compute revenue from China in its Q4 guidance. What was once a 25% revenue dependency has been completely replaced by U.S. hyperscaler and sovereign demand. The geopolitical overhang is gone.

But that doesn’t mean there are no risks. The most material near-term risk is simply execution: delivering Rubin on schedule in the second half of 2026 with the promised performance improvements while managing the complexity of a seven-chip system architecture. Any stumble in the cadence would give AMD or custom silicon providers an opening.

The medium-term risk is utilization and token economics. If reasoning AI proves too expensive relative to the value it creates, deployment will slow even if the technology works as advertised. We’re already seeing this tension in the AI startup world, where companies like OpenAI and Anthropic are growing revenue rapidly but remain unprofitable at the token level. For Nvidia’s thesis to fully play out, the economics need to close—not perfectly, but enough to justify continuous expansion rather than a build-pause-evaluate cycle.

The longer-term risk is regulatory. When one company’s architecture runs every major AI model on every major cloud, that starts to look like the kind of market structure that attracts antitrust attention. Nvidia is clearly aware of this, which explains the push to open NVLink IP to ARM and Intel partners and the emphasis on supporting both InfiniBand and Ethernet. But as the company’s dominance deepens, pressure around interoperability standards, CUDA bundling, and networking lock-in will almost certainly increase.

Finally, there’s the balance sheet risk I mentioned earlier. Nvidia is managing its supply chain less like a traditional semiconductor vendor and more like a project financier. If the macro environment shifts—whether from interest rates, policy changes, or a loss of confidence in AI ROI—the company’s aggressive commitments could turn from strategic advantage to liability quickly.

From Chips to Utility

Last time, I framed Nvidia’s moat as “Silicon, Software, and Scarcity”: superior chip architecture, the CUDA software ecosystem, (Link: https://lens.kristal.ai/p/silicon-software-scarcity-nvidias ) and constrained supply of HBM memory and advanced packaging that kept competitors at bay. That analysis wasn’t wrong, but the third quarter of fiscal 2026 reveals how much the thesis has evolved.

Today, Nvidia is building systems, not just selling silicon. The NVL72 rack architecture with integrated liquid cooling creates physical lock-in that lasts five to seven years. The company is optimizing for reasoning workloads specifically—the most computationally demanding form of AI and the one where programmable GPUs have the clearest advantage over fixed-function ASICs. And perhaps most importantly, Nvidia is using its balance sheet to control access to scarce supply while simultaneously financing the demand side through equity investments in model developers and multi-year agreements with hyperscalers and sovereigns.

This isn’t just vertical integration; it’s ecosystem orchestration. Nvidia has positioned itself as both the supplier and the central bank of AI infrastructure. They design the factories, optimize the software runtime, and ensure the capital flows to keep the whole system expanding.

If last year was about whether Nvidia could keep selling ever-larger GPUs, this quarter reveals something stranger and more durable: the company is quietly becoming the utility layer for an economy where the scarce input isn’t oil, bandwidth, or even electricity—it’s reasoning itself. And unlike cyclical infrastructure buildouts that boom and bust, utilities that successfully embed themselves into the operating fabric of an economy tend to compound for decades.

The air pocket was a myth. The question now is whether Nvidia can maintain this position as the platform matures, regulators take notice, and customers push back on total dependence. But after Wednesday’s results, the burden of proof has shifted. The skeptics need to explain not just why growth will slow, but how customers will extricate themselves from physical, software, and financial lock-in that Nvidia has spent years building—and billions cementing into place.

Disclaimer:

The content does not constitute any kind of investment or financial advice. Kindly reach out to your advisor for any investment-related advice. Please refer to the tab “Legal | Disclaimer” to read the complete disclaimer.